Welcome back to the Ethical Reckoner. In this Weekly Reckoning, we’ve got a smattering of AI-related news (on genAI training controversies, release conflicts, and how they might not be automatically great tutors). We also look at Bangladesh’s Internet blackout, and then, finally, CrowdStrike and the danger of choke points.

This edition of the Ethical Reckoner is brought to you by… the IT workers responsible for manually rebooting 8.5 million Windows devices.

The Reckonnaisance

Meta refuses to release generative AI models in EU, Brazil

Nutshell: Meta is withholding its new multimodal AI model in the EU and pulling models from Brazil over claims of regulatory uncertainty.

More: This isn’t about the EU’s new AI Act but its existing flagship privacy legislation, the GDPR, and Meta’s belief that the EU hasn’t been clear enough about how it can use EU data to train AI models. Brazil ruled that Meta’s privacy policy was invalid, causing it to pull the models until it clarifies how it can use personal data.

Why you should care: Meta claims that the EU regulatory environment is “unpredictable.” Regulations exist and are supposed to provide stability and promote good innovation, but they need to be interpreted, and Meta doesn’t seem to like the speed or outcomes of the EU’s interpretations. It’s looking like AI systems may end up as bargaining chips where Meta (and Apple) withheld those systems until they get favorable rulings.

AI companies controversially use YouTube subtitles to train AI models

Nutshell: A publicly available dataset called “The Pile” used by companies including Apple and Anthropic contains subtitles from YouTube videos despite it being against terms of service.

More: The dataset—which is for training textual generative AI, not video—contains subtitles from 173,536 YouTube videos from 48,000 channels, including educational channels like Khan Academy and Crash Course; news channels like the WSJ, NPR, and the BBC; late night shows; and popular creators like MrBeast and PewDiePie.

Why you should care: YouTube’s terms of service prohibit access “using any automated means (such as robots, botnets or scrapers),” but then they also provide an API for people to access its services programmatically, so it’s not immediately clear where the line is, but this sort of automated scraping of subtitles would seem to fall afoul of this. Also, no one asked the creators if they could use the content, which is disrespectful at best. And yet, this dataset has helped train models for some of the biggest companies in the world. Also concerning is the presence of flat-earth conspiracy videos and racial and gender slurs in the dataset, which help embed bias in AI models.

Generative AI might harm learning

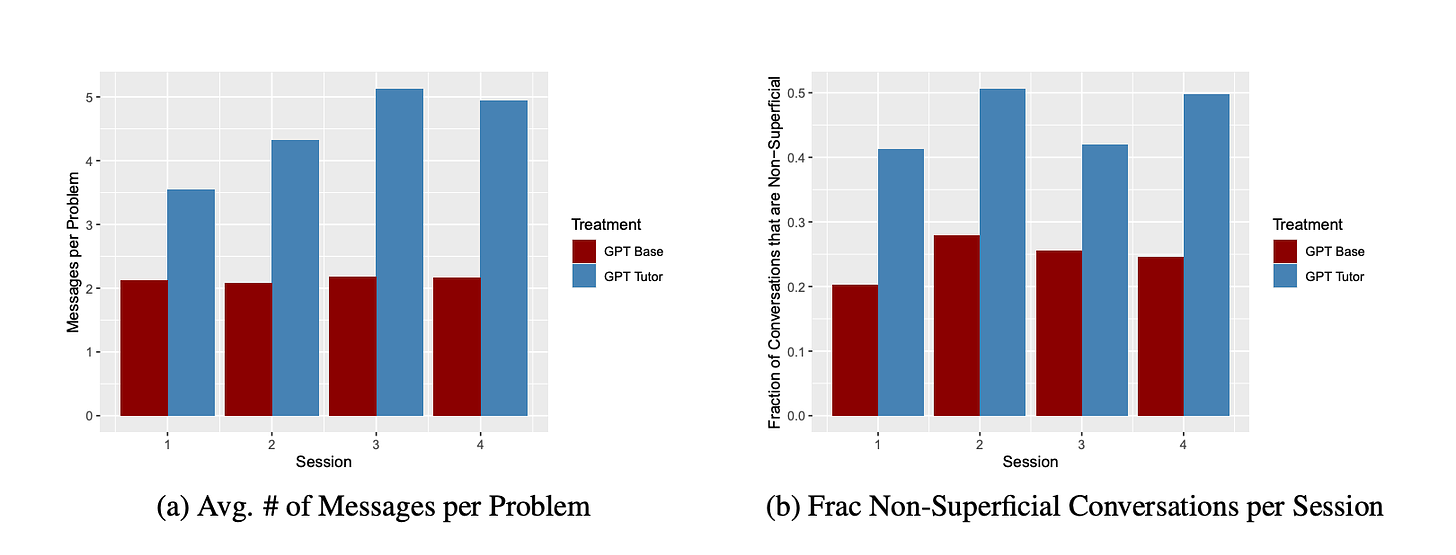

Nutshell: A new study suggests that generative AI can serve as a “crutch” that harms learning, absent safeguards.

More: A pilot study on math students compared exam results from students with access to ChatGPT, a specially-tuned “GPT Tutor” designed to nudge students towards the answer, and no AI. The researchers found that when students had access to AI, their performance on practice problems improved, but on an unassisted exam, students who had been using ChatGPT performed 17% worse than the control group and the GPT Tutor group.

Why you should care: “A tutor in every student’s pocket” has been a major selling point of generative AI, but this study seems to show that careful design is crucial when using AI for education: handing every student a ChatGPT account won’t automatically improve learning, especially if it just gives them the answers (or wrong answers, since generative AI is not great at math). The GPT Tutor didn’t harm performance, but nor did it improve it, indicating a need for more research and better design.

Bangladesh government-imposed Internet blackout continues

Nutshell: Amidst massive protests, the government has imposed a blackout on mobile data and Internet services.

More: The student-driven protests were initially demanding an end to a quota system that reserves 30% of government jobs for relatives of veterans of Bangladesh’s 1971 war of independence, but have since spread and turned deadly. The Internet blackout that began on July 18 is impacting protestor coordination, making information about the protests difficult to come by, and is a devastating blow to Bangladesh’s $1.4 billion tech sector. Despite this, the protestors secured a victory when the high court decreased the quotas to 5%, but some students have said they will keep protesting until there’s systemic change.

Why you should care: The Internet is only as free as your government wants it to be.

Extra Reckoning

Ok, let’s talk about CrowdStrike. There’s been a lot written about it, but I haven’t been totally satisfied with what I’ve seen—breakdowns are either too simplistic or too technical—and so I figured I’d write something myself about what happened and why it matters.

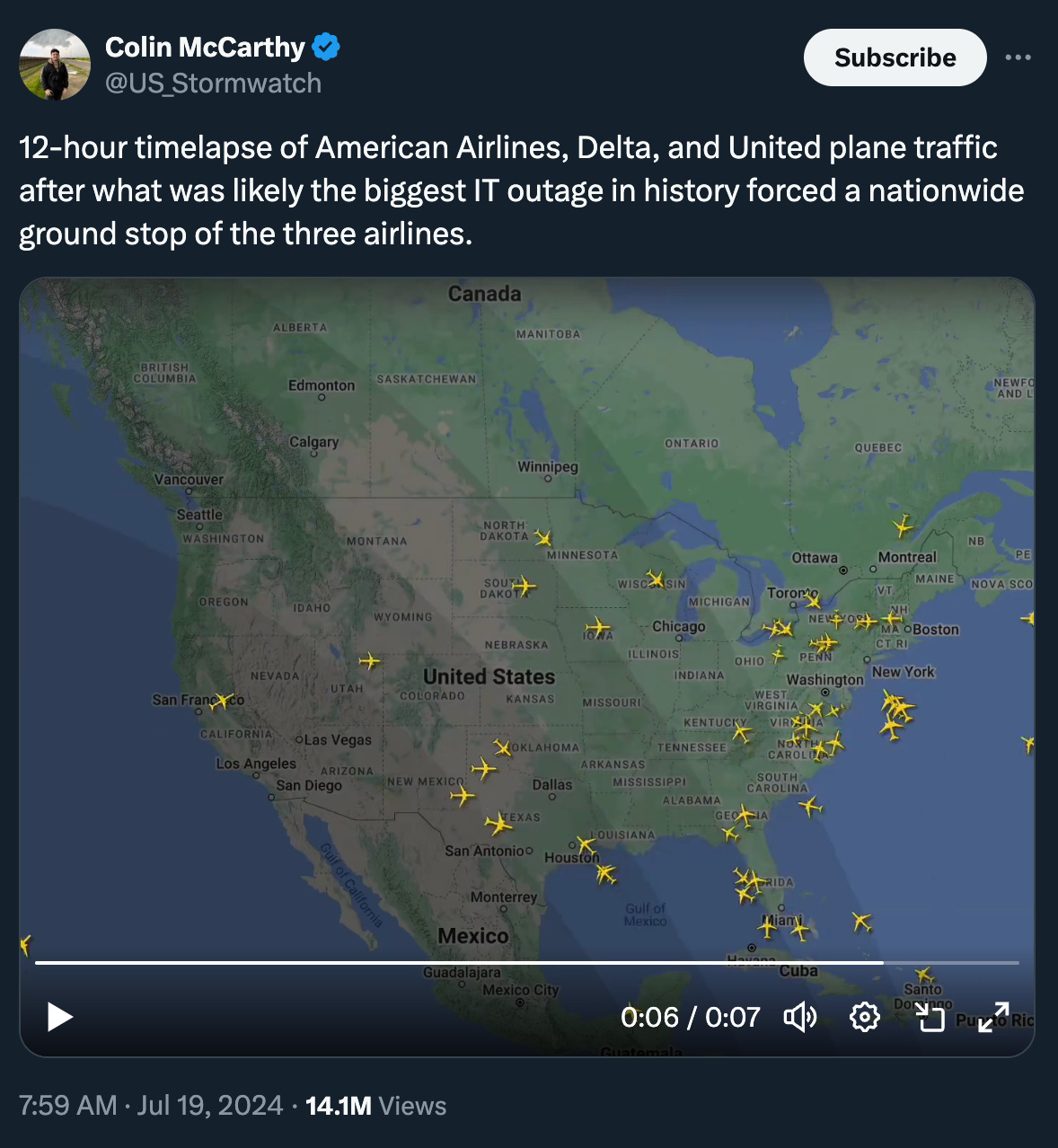

Despite being a multi-billion dollar company, chances are you hadn’t heard of CrowdStrike until Friday, and then you heard about it a lot. CrowdStrike is a cybersecurity company that makes software used by over half of the Fortune 500; they’re an enterprise software company (meaning they sell to other businesses), so you probably don’t have their software on your personal devices, but you might have it on your work computer. Security companies update their software a lot to deal with new threats, and late Thursday night/early Friday morning (EST) they pushed out an update that got 8.5 million Windows devices, including computers and servers, stuck in a boot loop displaying the “blue screen of death.” Because so many big companies use this software, banks, hospitals, 911 services, retailers, and airlines were all impacted. The latter was perhaps the most noticeable, with airline meltdowns stranding people across the world.

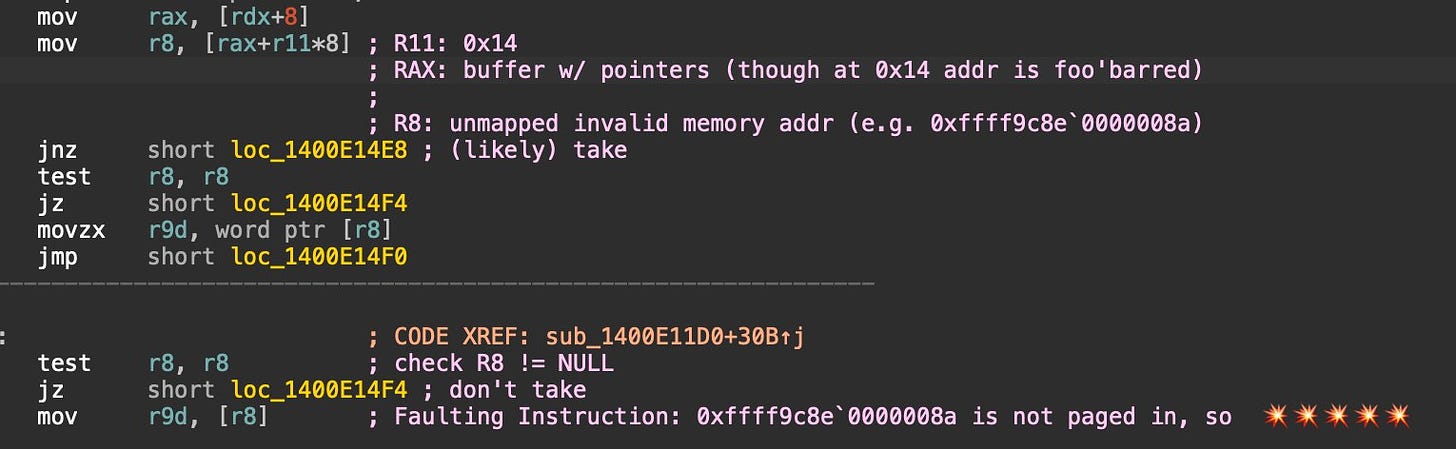

So what happened? On a technical level, the fault lay in a tiny “channel file” that supported the “Falcon Sensors” that monitor the system for threats. They work extremely closely with the operating system and the files themselves are pretty inscrutable (unless you like assembly code, which… I strangely do). People have been poking around at these files and the leading theory is that the faulty code tries to move some data to a memory address that wasn’t paged in. This means nothing to 99% of you, so imagine I’m trying to get you to put some apples in the fridge. It goes something like this:

Me: Hey, here’s a bag of apples. Put them in the crisper drawer in the fridge.

You: You haven’t told me where the crisper drawer is!

Me: Whoops, sorry. Is everything ok?

You: No.

*house explodes*

This is the basic interaction between this piece of the CrowdStrike software and Windows. Because the operating system didn’t know where to put this data, it panicked and restarted (hence the blue screen of death), but because this process runs every time a computer boots up, it turned into a vicious cycle. The first and primary remedy was to manually reboot each affected device in recovery mode and delete the bad channel file (which is why recovery has been so slow), but Microsoft (after suggesting “reboot up to 15 times” as a solution) released a tool that makes it slightly less manual. I kept seeing the debacle called the “Windows outage” or something to that effect—which I’m sure Microsoft PR loved—but it was fundamentally a CrowdStrike issue that manifested on Windows machines because MacOS and Linux work differently and don’t use these channel files.

There are many theories as to how this happened, but it’s clear that CrowdStrike’s quality testing fell down. It’s possible that changes to these files (which can happen multiple times per day) were seen as not important enough to warrant thorough testing, and if that’s the case… whoops. When I was a software engineer, we had layers of testing to make sure that something like this didn’t happen. And sure, things slip through the cracks, but if your quality assurance testing doesn’t detect a bug that blue screen of deaths everything, it’s clearly broken. So don’t blame whatever poor engineer wrote the code, blame the system that let it ship.

There’s also a more macro-level point here, which is that when an industry (like cybersecurity) becomes super consolidated, things like this can and will happen because you create single points of failure. Across the world, there’s a new wave of antitrust sentiment trying to spur innovation by restricting or even breaking up tech companies. People opposed to antitrust regulation usually claim that it would hinder economic efficiency. And on a day-to-day basis, maybe that’s true. But if you zoom out and include catastrophic events like this one (or the 2020 CloudFlare outage, or the 2021 Meta breakage, etc.), is that still true? If industries become more fragmented—if, instead of half of the Fortune 500 using the same cybersecurity service, they used 5-10 different ones—it may cause marginally more economic inefficiency on a day-to-day basis, but if it averts major incidents like this by shifting from single points of failure to a more distributed network, it may be worth the trade-off.

I Reckon…

that you can wake me up when September (and October and part of November) ends.