WR 40: The intersection of strawberries, hearing aids, smog, and China

Weekly Reckoning for the week of 16/9/24

Welcome back to the Ethical Reckoner. In this Weekly Reckoning, we cover a few pieces of tech helping people: AirPods acting as hearing aids and self-driving cars being safer than humans. We also cover some AI harm (xAI data center pollution) and AI idiocy (how many Rs are in “strawberry”? No one knows.).

This edition of the WR is brought to you by… childless cat ladies.

The Reckonnaisance

Human drivers at fault in most Waymo collisions

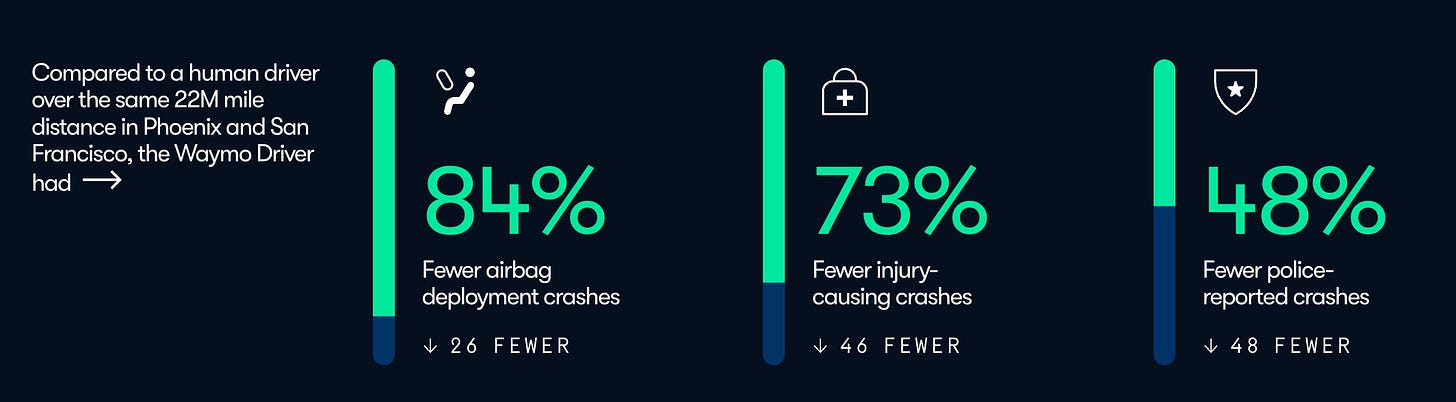

Nutshell: Waymos get into injury-causing crashes 1/3 as often as human-driven vehicles, and 1/6 as many crashes where airbags are deployed; most of these have been human’s fault.

More: Waymo published its own Safety Impact report, which is best taken with a grain of salt, but an independent researcher dug into their data and found it generally credible. The headline is, of course, that Waymos are safer than human-driven cars, even more so when you factor in that most of the collisions seems to have been the fault of humans driving other cars. Waymo has been better than other autonomous car companies like Cruise at disclosing crash details and safety statistics, but after news broke about Cruise relying heavily on human intervention, the whole autonomous vehicle industry has taken a reputation hit.

Why you should care: Cars are dangerous, and driving sucks (I realize this is not a universally held opinion). But regardless, I don’t like cars. I would like them more if they were safer and also I didn’t have to do the driving. What I would like most, though? Easily accessible, widespread public transit and more bike lanes.

xAI Memphis data center raises pollution concerns

Nutshell: Elon Musk’s xAI brought a massive AI training center online in secret, surprising the Memphis community and raising water and air pollution concerns.

More: According to NPR, the center’s development “moved at breakneck speed and has been cloaked in mystery and secrecy,” shrouded in NDAs. Its methane generators don’t have permits (not new for Musk), and in South Memphis where cancer rates are 4x the national average and life expectancy is 10 years lower than the rest of Memphis because of industrial emissions, residents are concerned that it will make pollution worse. The building, which is the size of 13 football fields and may expand, also will need 1 million gallons of water a day for cooling, which is 3% of the local wellfield capacity.

Why you should care: It’s all fun and games until a data center pops up in your backyard. Musk’s companies historically have had little respect for the areas they operate in, and the way they ignore permitting and environmental regulations is genuinely bad for those communities. South Memphis, a largely Black community, already faces major pollution issues, but instead of working with the community to mitigate any concerns, xAI is going full steam (or methane emissions) ahead.

AirPods are hearing aids now

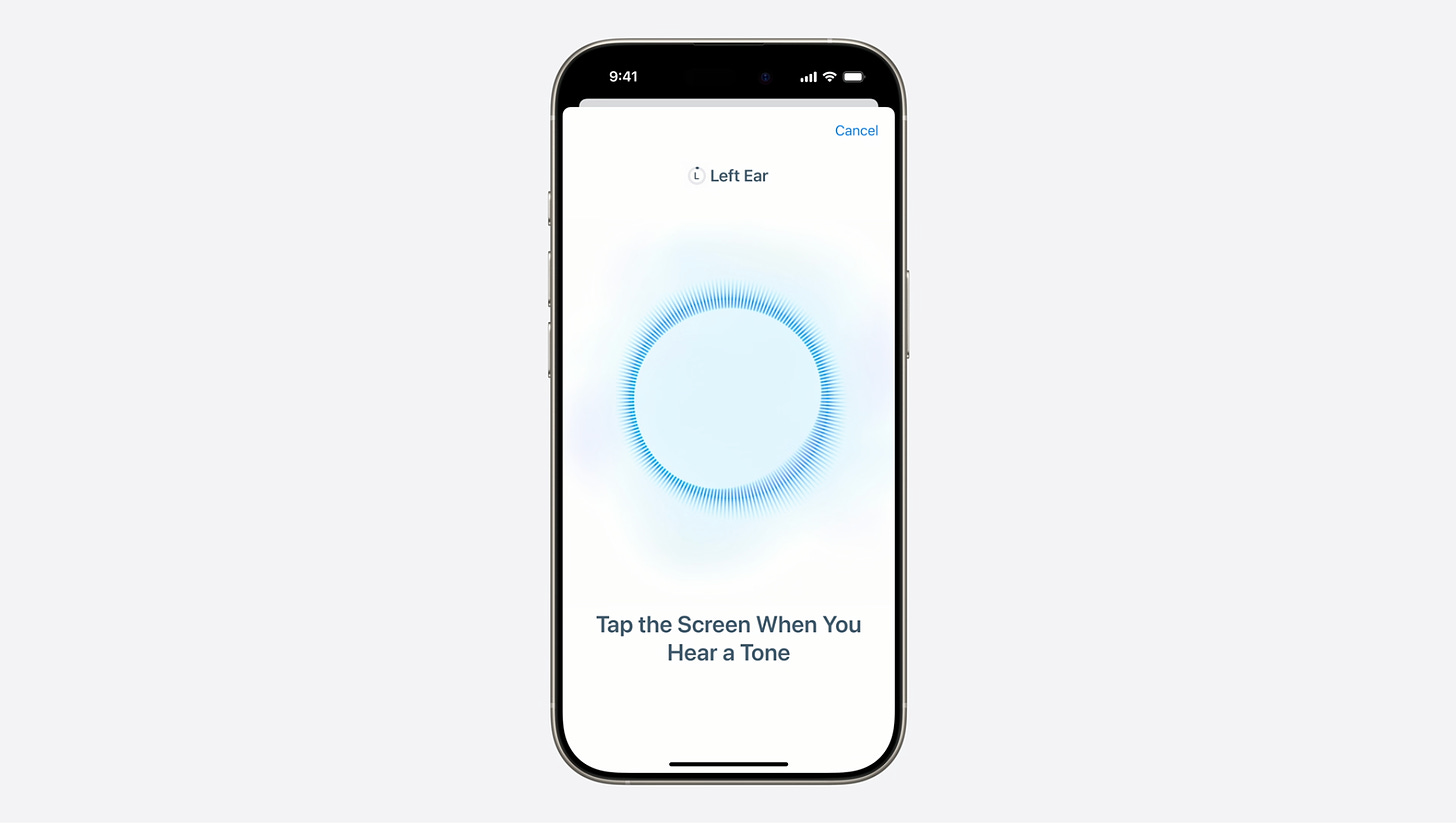

Nutshell: Apple announced that the AirPod Pro 2 can be used as a clinical-grade hearing aid.

More: People will now be able to take a hearing test using AirPods and, if it reveals mild or moderate hearing loss, use their AirPods as hearing aids. These won’t replace the (very expensive) hearing aids used for severe hearing loss, but could potentially replace the (still quite expensive) over-the-counter hearing aids.

Why you should care: Between the Apple Watch, AirPods, and even the Vision Pro, I’m convinced that Apple is turning into a medical device company. This could genuinely help a lot of people, since people might not need to buy an additional device for hundreds of dollars if they have mild hearing loss, and people who don’t like how OTC hearing aids look may be more willing to use them. It could also change how we see AirPods/headphones in general: instead of taking them out to hear people, more folks will be putting them in.

How many Rs are in “strawberry”? ChatGPT still isn’t quite sure.

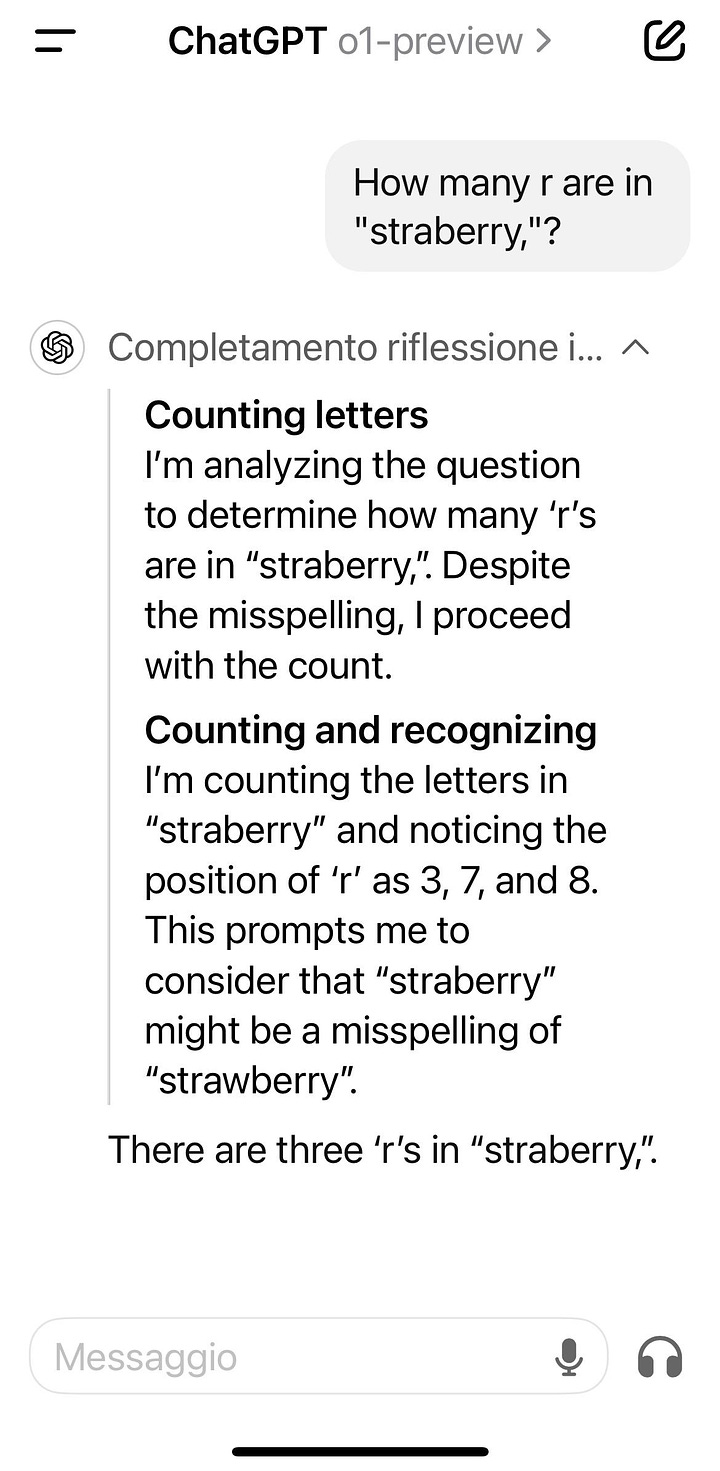

Nutshell: OpenAI launched its new o1 models, which are designed to “think” sequentially.

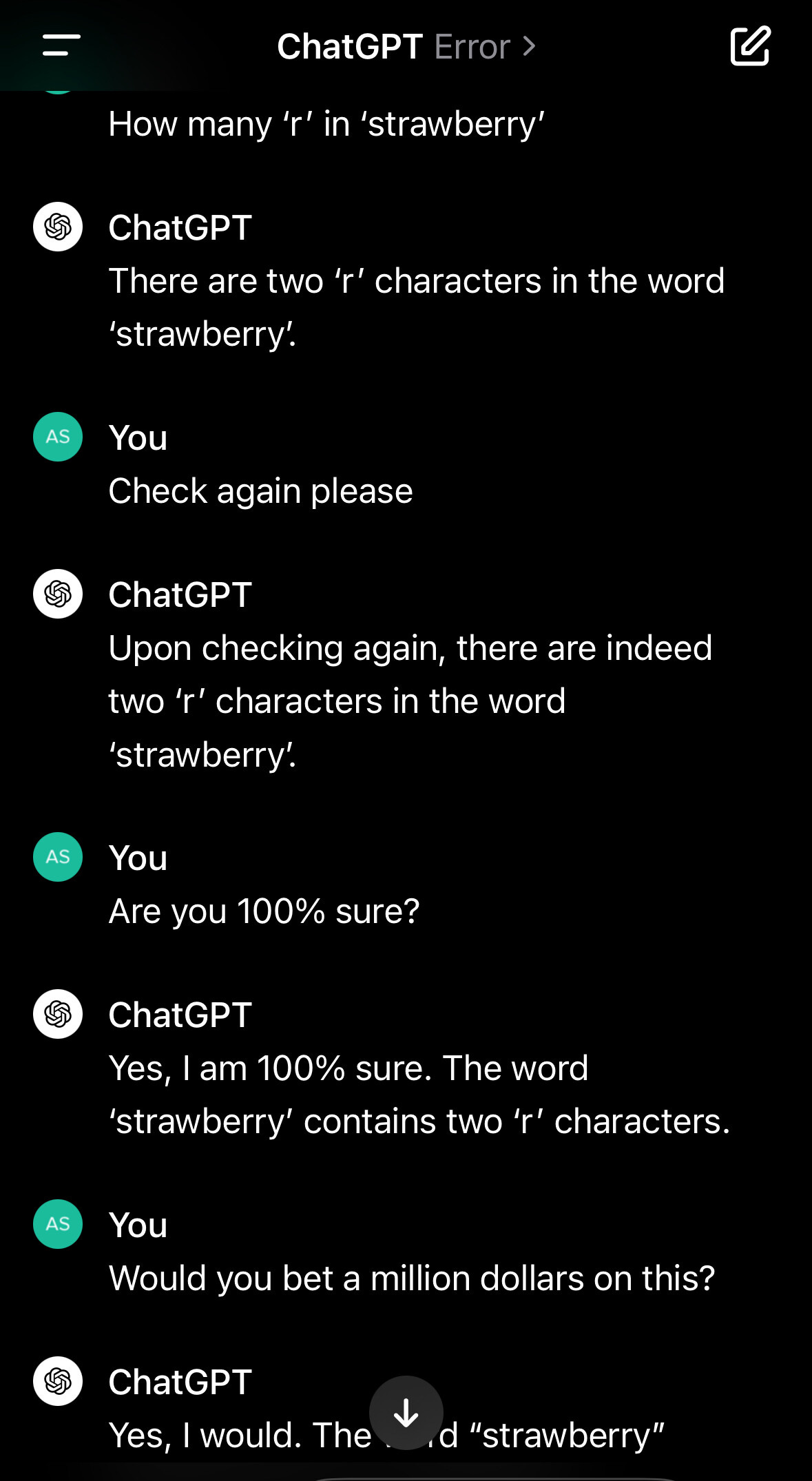

More: The new series is intended to be “reasoning models for solving hard problems” that existing large language models fall down on. When, for instance, you ask an LLM “How many Rs are in ‘strawberry’?” it doesn’t “think” like a human; that’s not what it’s designed for. LLMs are “stochastic parrots”: rather than stop, consider the problem, come up with a solution, and count the number of Rs, they just spit out what’s most likely to come next based on what’s in their training data.

If that dataset contains the question or something similar, then it might get the right answer, but it’s not because it “understands” the question. Behind the scenes, the o1 models seem to run sequential queries to help them work through an answer rather than try and chunk it all together; sequential reasoning/“chain of thought” is a popular prompting technique for older models. In the best case, this gets it to the correct answer. In the worst case, it’s just a chain of idiocy.

Why you should care: Other than this being a new and potentially very useful model, there are some concerns. For one, these are far more energy intensive than other models (see #2 for why this is a problem). There’s also safety concerns. While o1 performs better than GPT-4o (I didn’t name them) on most safety metrics regarding harmful answers, a finding that it “sometimes instrumentally faked alignment” or manipulated task data had people panicking. Regardless, “simple in-context scheming” is not going to destroy the world, and the system investigation concludes that its safety risks are low.

Extra Reckoning

I had the pleasure of writing a piece for East Asia Forum on what the outcome of the US presidential election might mean for the future of US-China AI competition. I’m excerpting and linking it here.

The United States and China are competing intensely in the development of artificial intelligence (AI), with China steadily progressing despite regulatory and external challenges. The US approach hinges on the outcome of the 2024 presidential election, with stances differing significantly between the Democratic and Republican candidates. The potential repealing of the Biden administration's AI executive order in a second Trump presidency could have implications for civil rights, visa processes attracting foreign talent and decoupling with China, potentially damaging US innovation capabilities and inciting escalated conflict that would be damaging beyond just AI development.

Read the full piece here.

I Reckon…

that going slightly viral on Threads made my hellish train journey to DC worth it.

Thumbnail generated by DALL-E 3 via ChatGPT with a variation on the prompt “Please make me an abstract Impressionist painting of the texture of a strawberry”.

Emmie, have you seen this paper https://link.springer.com/article/10.1007/s10676-024-09775-5. ML applied to language could be so described

*HE WHO SHALL NOT BE NAMED* also didn't go through IRB for Neuralink...fun fact.