Welcome back to the Ethical Reckoner. In this Weekly Reckoning, we cover new developments in agentic AI (hint: your AI may be dating other AIs soon) and how South Korea, facing a wave of abusive AI deepfake porn, is trying to outlaw them. Then, Harvard students raise awareness of facial recognition in public, and underwater drones raise awareness of sunken ships.

This edition of the WR is brought to you by… shock that somehow it’s already October??

The Reckonnaisance

Grindr testing AI “wingman”

Nutshell: Gay dating app Grindr is planning to add AI agents to help plan dates, recommend people to talk to, and potentially “date” other people’s wingmen.

More: The test group is currently less than 1,000 users, and will expand to 10,000 in 2025, so at least they aren’t rushing this out. Initially it seems like it’ll be more like an assistant, but over time, they want to have them interact with other users’ AI agents. It’s unclear if they would be conversing as bots or trying to imitate their owners, but if the latter, it’s getting real close to Black Mirror’s Hang the DJ. The model is based on one from a company called Ex-human trained to have romantic conversations; Grindr fine-tuned it to be “more gay.” This process will probably include training on Grindr user conversations, but the company—which has had questionable data practices in the past—promises they’ll ask users for permission.

Why you should care: This speaks to a larger trend in “agentic AI,” AI designed to act on your behalf. Tl;dr: it’s rising. Microsoft sees it as the future, although there’s a big difference between having AI make your restaurant reservations and having it actually chat someone up for you. If it’s just two AI agents explaining information about their people to each other, then that seems not too scary—it’s basically like someone talking through their friend's profile with a prospective date. But if they’re pretending to be their person, that gets sketchy fast, especially if it’s not disclosed that you’re talking to an AI agent.

Abusive deepfakes rattle South Korea

Nutshell: The country “most targeted by deepfake pornography” made watching or possessing it illegal.

More: Making sexually explicit deepfakes with the intent to distribute them was already illegal, but now anyone who purchases, saves, or watches it could face up to three years in jail or a fine up to 30 million won (~$22,600), adding on to South Korea’s laws against other “obscene materials” that includes most consensual pornography. Deepfake investigations have exploded this year—there have already been 800 investigations of deepfake sex crimes, compared to 156 in all of 2021. The data shows that many of the perpetrators are teenage boys, but few actually end up in prison.

Why you should care: Non-consensual intimate images are ruining women’s lives, and South Korea has an “epidemic of online sex crimes,” not just deepfakes. South Korea also faces some of the biggest gaps between men and women in terms of conservatism, and overall is reckoning with nationwide “gender conflict.” Whether this law helps remains to be seen; South Korea’s laws against pornography aren’t really enforced, although the fact that police are investigating is promising. Countries like the US, where states are creating a patchwork of anti-deepfake laws that don’t actually help victims, should take note:

College students raise awareness of facial recognition

Nutshell: Harvard students used smart glasses, face searches, and public databases to dox strangers in public.

More: The students took a pair of Meta smart glasses, live streamed its video to Instagram, then used a facial recognition algorithm to identify faces. They put images into existing face search tools (these are very scary), found their names, then plugged them into online databases to find their phone numbers and addresses—all sent to a phone app in real time. The demo video shows them going up to random people on the subway and pretending to know them, and identifying classmates’ parents. The students said they did this to raise awareness of how easy it is to get people’s information with existing technologies.

Why you should care: A lot has been made of how this is the fault of smart glasses or augmented reality (AR). The thing is, they could’ve done all of this with just a phone—the glasses just make it less obtrusive. (They aren’t AR glasses, but if they were, theoretically they could project all the info onto your field of vision—police forces and militaries in multiple countries are investing in AR facial recognition glasses.) We live in an era where basically anyone can be found online, and so the only way to prevent that is to think about what you share online and remove your data from these databases—if you live somewhere that lets you.

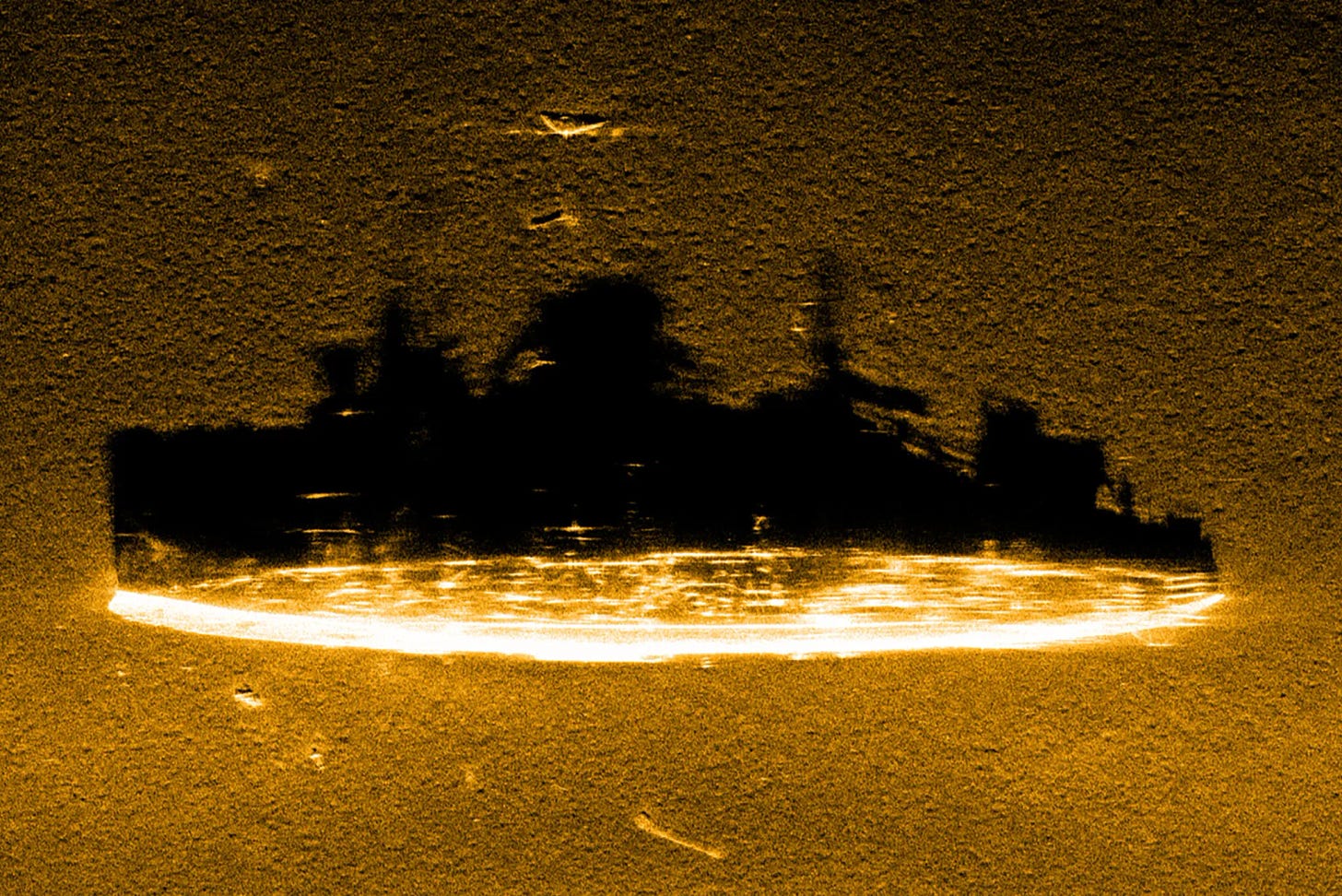

Underwater drones find “ghost ship”

Nutshell: A fleet of underwater drones found the lost USS Stewart, the only US Navy destroyer captured by Japan during WWII.

More: This was the result of a convergence of highly specific interests. The Air/Sea Heritage Foundation had been trying to track down the ship, which was sunk in 1942, raised and used by Japanese forces, then after the war, sunk as target practice by the US, and no one had been able to pin down exactly where. Ocean Infinity makes underwater drones and wanted to test using them as a fleet, so they decided to do so in the ship’s search area. The drones were able to map the seafloor much faster than traditional methods and found the ship within hours.

Why you should care: Technology isn’t all scary. Sometimes the future looks like a dystopia, but sometimes it looks like underwater drones helping us solve mysteries (of course, they may also end up having scary military applications, in which case I will find something else to be optimistic about). Ocean Infinity is also going to start a new search for the missing MH 370 plane in November, so watch this space.

Extra Reckoning

Today, I want to share a couple of papers I’ve had published in the last couple of weeks. The first is a piece with nature Index on how the “Big Tech Effect” is outweighing the Brussels Effect in US state AI regulation. In other words, states aren’t looking as much to the EU’s AI Act when considering regulation as they are to tech companies, which are lobbying for and against specific provisions and even distributing draft bills that legislators are pulling from.

Read more here!

The second is a paper published with Digital Society called Governing Silicon Valley and Shenzhen: Assessing a New Era of AI Governance in the US and China. What’s it about? I’ll let the Google AI overview tell you (truth be told, I was shocked when I googled it to get the link and this popped up):

This paper is the follow-up to my earlier paper “AI with American Values and Chinese Characteristics” (yes, I’m pleased with the titles) and looks at new developments in AI governance in the US and China.

If you read either of them, drop your thoughts in the comments!

I Reckon…

that Apple Intelligence is a comedian.

Thumbnail image generated by DALLE-3 via ChatGPT with the shipwreck image and iterations on the prompt “Please generate a brushy, abstract Impressionist version of this image”.

If they find MH370, I'll kiss the underwater drones team.