Welcome back to the Ethical Reckoner. In this Weekly Reckoning, we have a bit of a social media bonanza. Accidentally unredacted filings in a TikTok lawsuit reveal that they know their platform is bad for teens (and don’t really care), X is ignoring non-consensual nudity, and hurricane-related disinformation is swamping most platforms. Plus, the obligatory Tesla robotaxi acknowledgement.

Then, a question: what is it like to scream into the digital void?

This edition of the WR is brought to you by… a wedding and me hopefully having my act together to finish this before said wedding (if you’re reading this in a timely fashion, I succeeded)

The Reckonnaisance

TikTok apparently knows of and ignores negative impacts on teens

Nutshell: Faulty redaction in a lawsuit revealed that TikTok knew that excessive use is correlated with negative effects and that “take a break” measures were ineffective.

More: There are some doozies here. The lawsuits argue that TikTok has violated consumer protection laws by contributing to a teen mental health crisis. Social media’s role in teen mental health is much debated, but TikTok’s own research shows that “compulsive usage correlates with a slew of negative mental health effects like loss of analytical skills, memory formation, contextual thinking, conversational depth, empathy, and increased anxiety,” and while it added reminders to take a break after 60 minutes on the app, its internal metrics for the success of this tool was how much it improved public trust in TikTok, not how much it reduced app usage (from 108.5 minutes to 107 minutes a day). TikTok also allegedly demoted content from people it considered “not attractive,” promoting a specific and restrictive standard of beauty. And, on content moderation, TikTok acknowledges that they have a “leakage” problem where violating content makes it past moderators:

35.71% of “Normalization of Pedophilia;”

33.33% of “Minor Sexual Solicitation;”

39.13% of “Minor Physical Abuse;”

30.36% of “leading minors off platform;”

50% of “Glorification of Minor Sexual Assault;” and

“100% of “Fetishizing Minors.”

(In the meantime, TikTok is laying off hundreds of human moderators in a transition to AI moderation.)

Yikes.

Why you should care: According to TikTok’s own research, 95% of smartphone users under 17 use TikTok, so any possible impacts on teens is worth being worried about. But concrete data about its impacts is hard to come by, so getting a glimpse into its internal data—and its internal attitudes towards these issues, which is basically “maximize engagement and damn the consequences”—is fascinating. The whole complaint is now sealed, but stay tuned for more nuggets as the lawsuits (TikTok will have to defend itself in 14 state courts) progresses.

Also, big props to local media here: Kentucky Public Radio discovered the faulty redaction.

Twitter cares more about copyright than women’s rights

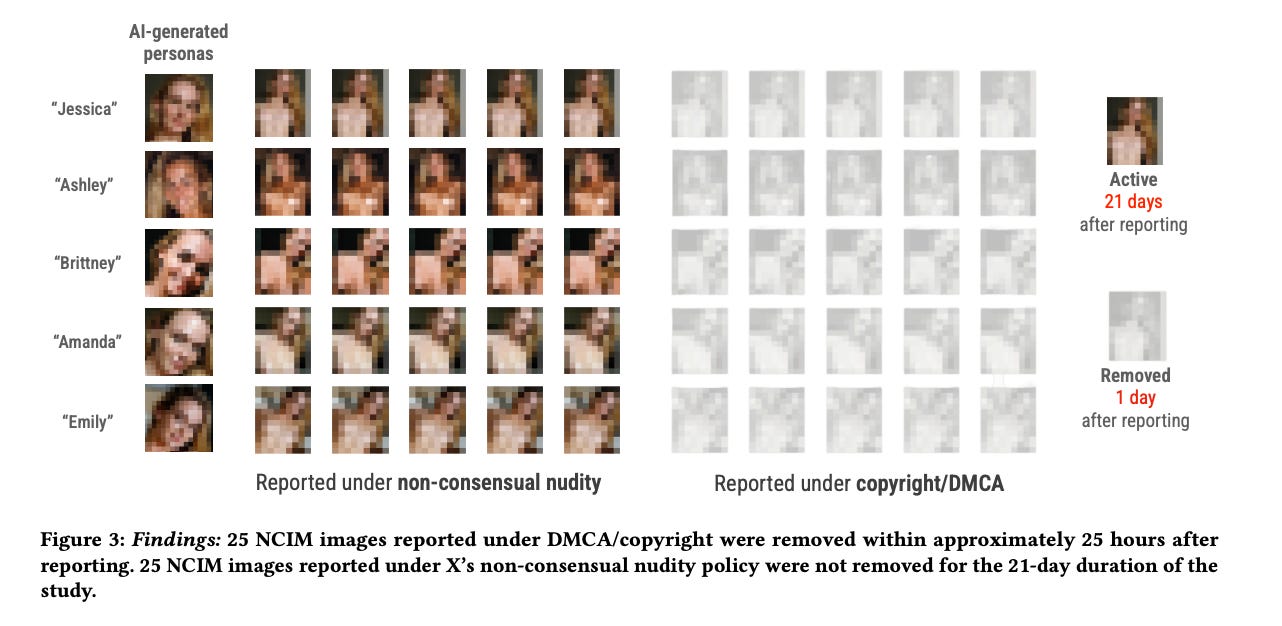

Nutshell: A study found that X acts rapidly on copyright takedown requests, but not at all on non-consensual nudity reports.

More: The researchers behind the preprint created a set of AI-generated nude images of (fake) women and posted them on X/Twitter. They reported half under Twitter’s copyright policy and half under their non-consensual nudity policy and found that posts reported under using DMCA takedown requests were removed within an hour, while those reported under the non-consensual nudity posts were never removed.

Why you should care: First, it’s obviously heinous that X has either forgotten that it has a non-consensual nudity policy or just doesn’t care. Whether incompetence or malice, it’s unacceptable. Non-consensual intimate media (NCIM) is a huge and growing problem that primarily targets women, and shame on Twitter for ignoring it. It’s possibly a consequence of staffing cuts at X—the law requires companies to act promptly on DMCA takedown requests, but there’s “no clear legal incentive” for them to remove NCIM. However, this does show some potential recourse for targets of these posts. Something the preprint doesn’t highlight is that there was apparently no due diligence in the DMCA takedown request process—they reported the posts and they were gone within a day—so if people know this is a path they can take, they may have more success in getting NCIM removed.

Hurricane misinformation complicates recovery efforts, put people in danger

Nutshell: In the aftermath of Hurricanes Helene and Milton, misinformation from AI slop to major conspiracy theories are spreading on social media, creating threats against FEMA and Jewish officials.

More: The conspiracies range from Helene being geo-engineered so that the government can seize lithium deposits in North Carolina to FEMA diverting disaster funds or being about to run out of money to the government seizing land from survivors. FEMA has launched a “Hurricane Rumor Response” site, but the rumors are still spreading, fueled by right-wing politicians like Trump and Marjorie Taylor Greene, who are spreading anti-immigrant and antisemitic rumors.

Why you should care: These rumors are spreading more or less unchecked, which is a platform policy issue—content moderation and labeling measures are falling down here, with Helene misinformation posts on X garnering 160 million views. Calls “for residents to form militias to defend against FEMA staff members” are understandably making FEMA officials fear for their lives, and antisemitic rhetoric is also increasing. All of this is diverting resources and attention from actual storm recovery efforts and makes people less likely to seek aid.

And, of course: The real weather manipulator? Climate change.

Tesla robotaxi: coming (not) soon to a street near you (maybe)

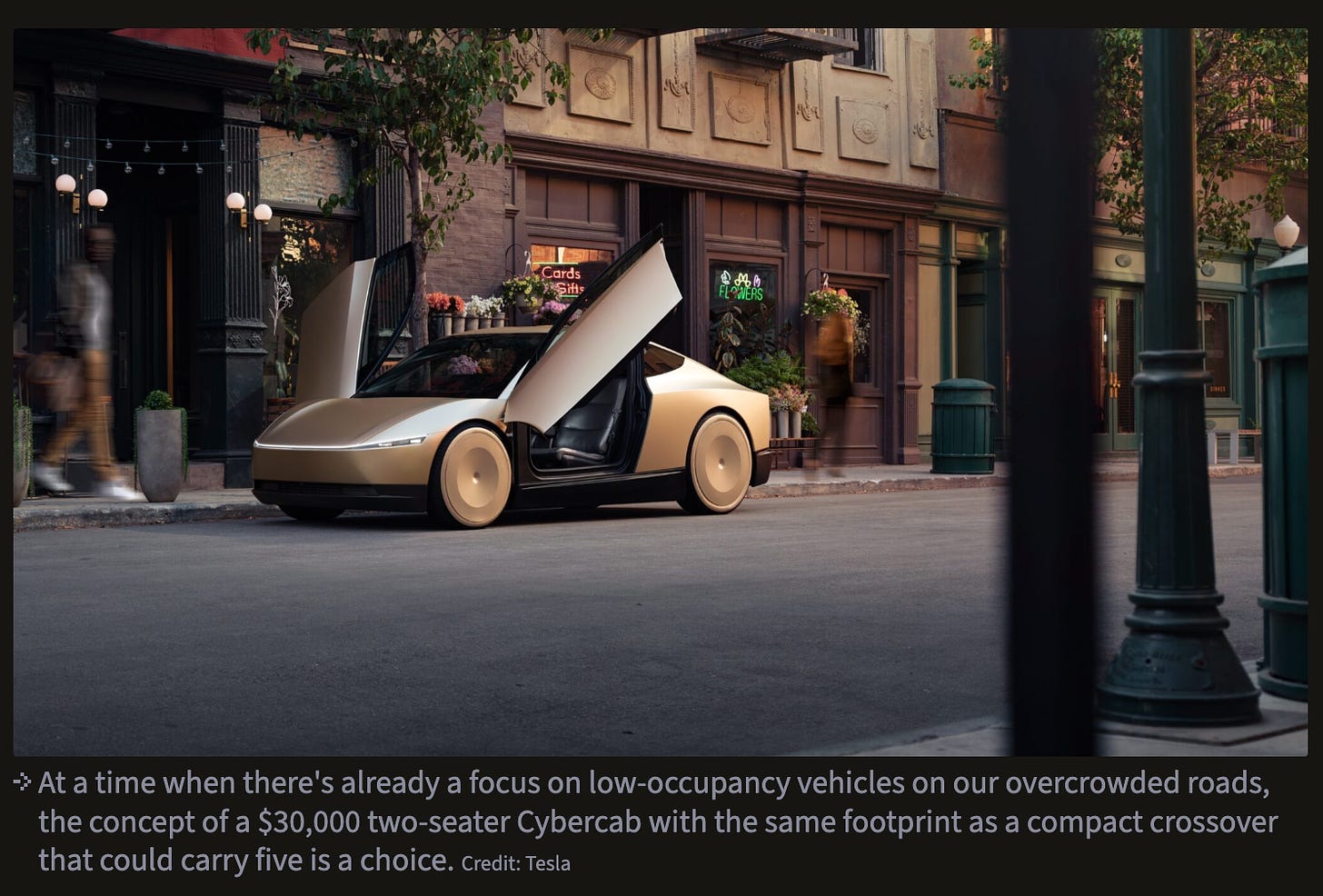

Nutshell: Tesla claims that it will be building autonomous “Cybercabs” next year, but critics are skeptical.

More: Tesla had an event last week where Elon Musk announced that the two-person “Cybercab” will enter production next year, sell for less than $30,000, and let its owners rent them out when they aren’t using it. Musk also claimed that unsupervised full self-driving in its existing cars will start next year.

Why you should care: Look, autonomous driving is cool. And autonomous taxis have their uses. But Musk has been promising full self-driving for years and never come through, and essentially every promise he’s made about car prices and production timelines has been broken. (Waymo, in the meantime, has quietly doubled the number of autonomous rides it’s making.) And then there’s also the question of if this is even a good idea. Cars are still a problem, and why oh why would you build a fleet of autonomous two-seaters that are going to clog up roads even more? I really can’t say it better than Ars Technica:

"It's going to be a glorious future," Musk said, albeit not one that applies to families or groups of three or more.

Extra Reckoning

CW: This contains references to CSAM and self-harm.

What is it like to scream into the digital void?

I’ve been contemplating this question since starting the podcast Kill List. It’s about a supposed murder-for-hire site on the dark web where people pay money to hire hit men to take out real people. The twist is it turns out to be a scam site—the admins take their customer’s Bitcoin and don’t actually kill anyone—but a group of investigators got ahold of the list of requested hits after a woman on the list was killed by her husband, who had tried to order a hit, got frustrated that nothing was happening, and took matters into his own hands. Even though these people weren’t in danger from the site’s non-existent assassins, they were still in danger from the people who wanted them dead—enough to pay real money for it.

The investigators who obtained the kill list reported it to the authorities, but, frustrated at the lack of action, started notifying people on the list (which spanned the globe) that they might be in danger from the people in their lives who wanted them dead; there was at least one other planned murder. Only three episodes of the podcast have been released, but I’m hooked. And one of the things that got me thinking was how these people paying for murders were actually basically shouting into a digital void—no one was going to respond to their hits. The admins ignored them and no one else knew, until the investigators came around.

Another story came across my radar this week, and it’s also a grim one. Muah.ai, an “uncensored” AI companion site, was hacked and had its database of user-created chatbot prompts released. The database contains a *lot* of prompts trying to create sexbots of underage children, along with the emails of the people who wrote the prompts. It’s unclear if the site actually let people create these bots—the site claims that their moderation team deletes “ALL child related chatbots” but the fact remains that people tried to create these bots. And these people clearly didn’t expect the prompts they tried to use to be released; they thought they could scream their pedophilic desires into the void and not get caught.

yeah, not so much.

Both of these cases show what happens when the echoes of a scream into a void—whether the person knew that’s where they were shouting or not—bounce up into someone else’s ears. Sometimes this is a positive thing. While I don’t know how the podcast ends yet, so far it’s seemingly good that people are getting notified that they’re in danger so that they can protect themselves. Obviously it’s not good for the would-be killers or pedophiles, but they’re the ones putting people at risk.

Sometimes, the person shouting into the void is the one at risk. A student in Texas wrote a suicide plan on a Google Doc in white text so that no one could see it, but school administrators were alerted by a school device monitoring software and intervened. In this case, it was also a good thing that someone heard him.

To me, this all raises the question: how much of a void do we want to leave on the Internet? It’s good to have anonymous places to vent online; half of Reddit exists for this very purpose. But sometimes, we need to make connections between what’s being expressed online and what’s going on offline. The question is how to do this without compromising privacy, and there’s no easy answer to that: a theme that repeats in this newsletter time after time is how to make the Internet safe but give people enough autonomy. If I knew the answer… we wouldn’t be here; I’d be running the Internet. But until we figure it out, be aware that when you shout into the void, there’s a chance it’ll respond.

I Reckon…

that having *fewer* workers at Boeing doesn’t make me feel any better about their planes.

Thumbnail generated by DALLE-3 via ChatGPT with a variation on the prompt “Please generate an abstract brushy Impressionist painting representing screaming into the void in a palette of grays and blues”.