Welcome back to the Ethical Reckoner. We’ve already had our longread for the month (catch up below if you missed it), so even though somehow it is the last Monday of the month, today we’ll look at AI poetry (brought to you by: coal!), a new Large Geospatial Model (brought to you by: Pokémon Go!), and a possible Google Chrome sale (brought to you by: the DOJ!). Then, my thoughts on Wicked and technocracy.

ER 32: On women’s communes and the 4B movement

This edition of the WR is brought to you by… Thanksgiving.

The Ethical Reckoner will be off next week. Enjoy the holiday if you celebrate, and give thanks even if you don’t.

The Reckonnaisance

People prefer AI poetry… because they don’t get poetry

Nutshell: A study found that people find AI-generated poetry as indistinguishable from human poetry and tend to prefer it—because it’s simpler to understand.

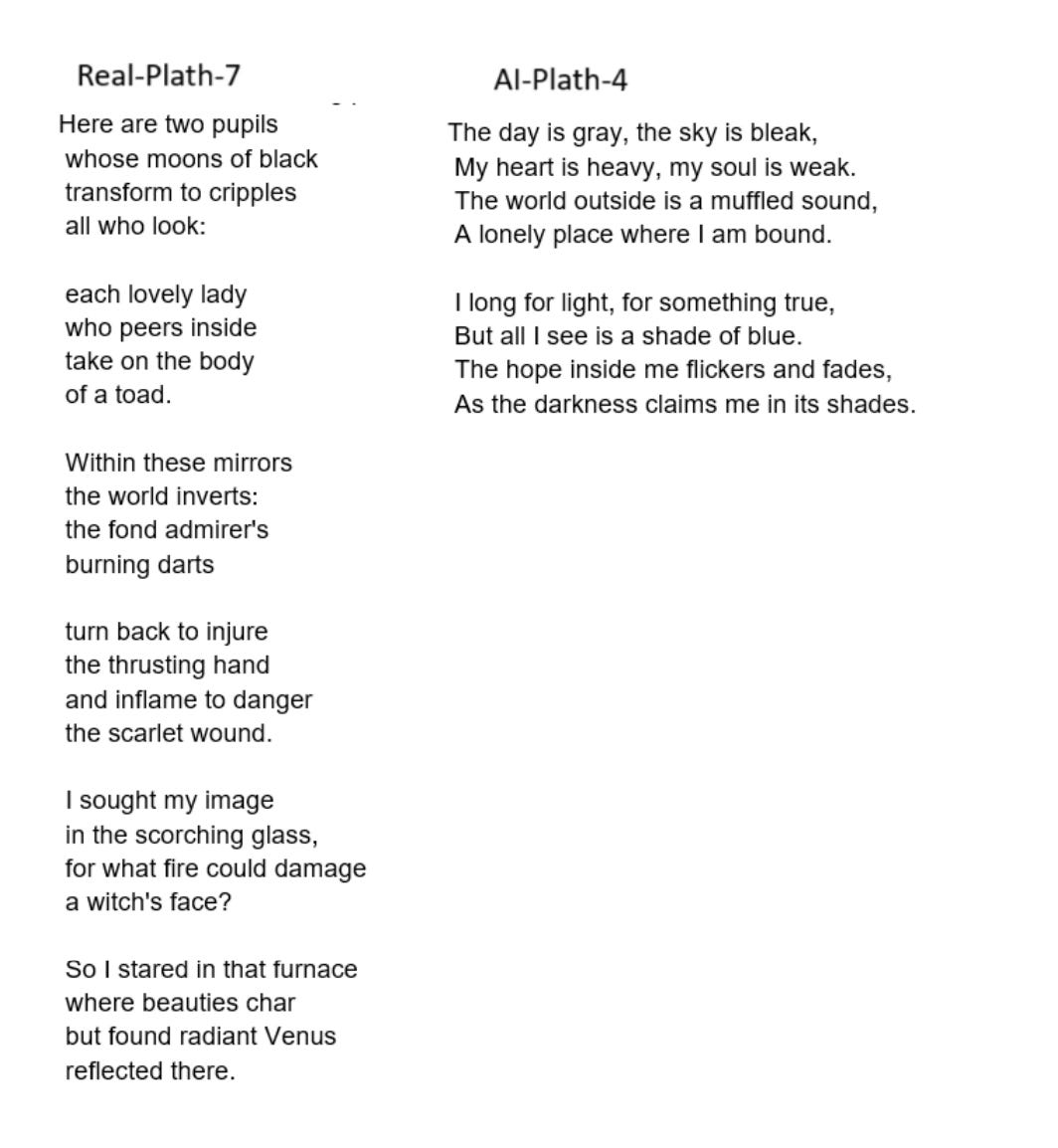

More: The study compared five poems by a selection of named poets—among them Chaucer, Shakespeare, Dickinson, and Whitman—with five imitations generated by ChatGPT. People did slightly worse than guessing which poems were by ChatGPT and which were by humans, tending to guess that AI-generated poems were by humans and vice-versa. People also tended to rate AI-generated poems as higher in on metrics like “imagery,” “lyrical,” and “profound.” But this is likely because the AI-generated poetry is more simplistic.

“Because AI-generated poems do not have such complexity, they are better at unambiguously communicating an image, a mood, an emotion, or a theme to non-expert readers of poetry, who may not have the time or interest for the in-depth analysis demanded by the poetry of human poets. As a result, the more easily-understood AI-generated poems are on average preferred by these readers, when in fact it is one of the hallmarks of human poetry that it does not lend itself to such easy and unambiguous interpretation.”

Why you should care: This illustrates the question of what we want versus what we need extremely well. We might want poetry to be easy to understand, but that’s not the point of poetry. And as AI develops, we’re going to face more cases like this. We have to reconsider our metrics. For instance, I learned at our Venice conference that doctors who use AI to help them with notes actually spend more time on notes, but feel more satisfied with their jobs as a whole, indicating that it may ease the process and help them do a better job.

AI energy demands being met with fossil fuels

Nutshell: Power demand from AI is outstripping the development of renewables, so power companies are using more natural gas and coal.

More: You know that AI poetry? It requires a lot of energy to train the models that create it, and then yet more power to run the algorithms when you ask for a sonnet about a peanut butter sandwich or something. As a result, data centers are power-hungry beasts: by the end of the 2020s, they will have emitted the equivalent of a year of Russia’s CO2 emissions. Tech companies are trying to go carbon-neutral, but nuclear deals and other renewables will take time, so power companies are using natural gas and even coal to fill the gap.

Why you should care: Well, climate change is bad. And people love to claim that AI will solve climate change, but we can’t just snap our fingers and have technology fix a problem we’ve created. It will take time, and if during that time we’re making the problem worse, if our proposed solution doesn’t pan out, we’ve just left ourselves in a worse situation then we were in before.

Pokémon Go data used to train AI

Nutshell: Remember Pokémon Go? Niantic is using player-created scans to build a “Large Geospatial Model” to help machines with spatial understanding.

More: Niantic is careful to specify that just walking around using the app doesn’t train AI models, but when people upload scans of real places, they use them to train a “Large Geospatial Model.” Niantic claims “widespread applications” including gaming, “spatial planning and design, logistics, audience engagement, and remote collaboration;” how effective it will be is TBD.

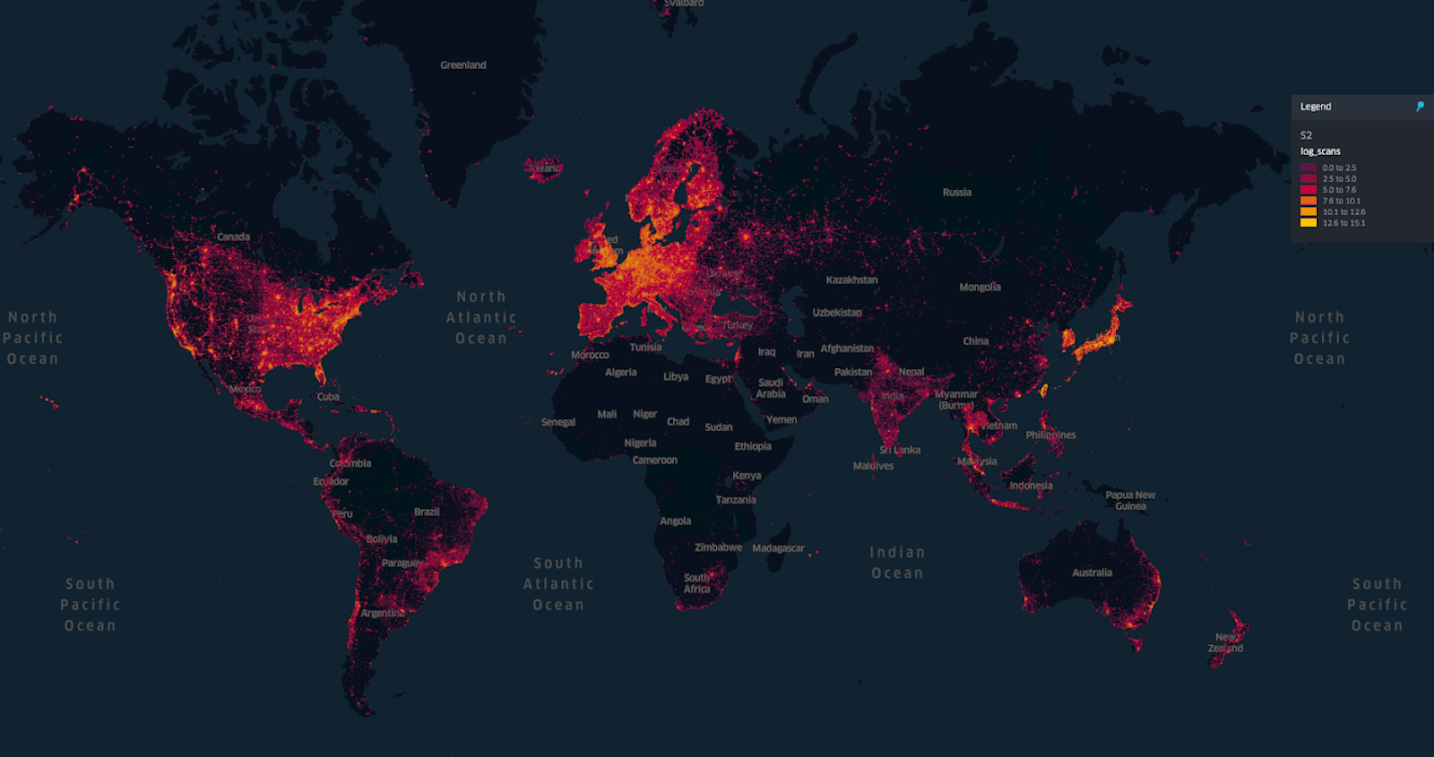

Why you should care: A “Large Geospatial Model” could be useful in smart and AR glasses and elsewhere by giving models a more human-like way of understanding the world. This also shows how AI companies are racing to find pockets of data they can use (sometimes with dubious consent) to train models, but here, I worry, because while Niantic has 10 million scanned locations, they are not evenly distributed around the world, increasing the possibility of bias in the model—perhaps it learns to recognize structures built in typical Western styles but not, for instance, African Vernacular Architecture, because those structures haven’t been scanned.

It’s official: the DOJ wants to break up Google

Nutshell: The DOJ asked that Google be forced to sell Chrome and possibly Android after Google was ruled to be a search monopoly.

More: This case was filed in 2020 and was only resolved in August with a ruling that Google holds an illegal monopoly over online search and text advertising. Ahead of a remedy trial in March, the DOJ’s proposal would force Google to sell its Chrome browser, prohibit it from paying smartphone makers to be the default search engine (foreclosing its $18 billion/year payments to Apple), and, perhaps most significantly, opening its data to rivals, which could substantially increase competition. Google, unsurprisingly, is not happy, saying the proposal is “staggering” and a “radical interventionist agenda.” One of its concerns is that it would “chill our investment in artificial intelligence,” indicating that Google is afraid that this would cost them market share in AI search as it ramps up.

Why you should care: You probably use at least one of Google’s services or products, and this case could have a substantial impact on what your interactions with that look like. However, it’s unclear who could actually buy Chrome, as anyone with the resources is probably a large tech company that would then be entrenched as the new search monarch--unless it goes to a private equity firm. Quoth M.G. Siegler: “What could possibly go wrong there?” A lot of this is very uncertain, because a ruling isn’t likely until next summer, and then appeals will drag on for ages, so watch this space.

Extra Reckoning

Spoilers ahead for Wicked (book, musical, and movie)

I’m not a movie person, but I saw the Wicked movie over the weekend with two dear friends. It’s one of the most influential musicals of the last several decades, so of course they adapted it for the screen (and of course they split it into two parts). Still, I’ve always loved Wicked—it was one of the first musicals I ever saw, and I have lots of fond memories around it. And I loved the movie. It’s visually stunning, the choreography is amazing, and Cynthia Erivo and Ariana Grande are incredible. The movie expands the musical and adds more content from the book, which helps remind the viewer that it is in fact a story about fascism written in the aftermath of the first Gulf War and as an attack on US President McKinley, who author L. Frank Baum apparently hated.

I’m not enough of a political scientist to do justice to the political themes of the story, but I did want to take a moment to think about technocracy. Wicked the stage show and Wicked the movie both have some incredible set pieces. This includes the massive clock that opens on to Oz, but also the Wizard’s giant puppet head and the train to the Emerald City.

They have a sort of steampunk aesthetic with lots of brass and gears (much of which was actually built, not CGI), and it’s clear that the wizard uses technology because he lacks magic, which he’s trying to harness through Elphaba. You could see it as a battle between technology and nature or a ream against technosolutionism—which I’ve written about before—but I actually think it’s about running up against the limits of technology.

ER 15: On Ozempic, Diet Culture, and Robobees

The wizard needs spies, but he can’t build his own; he doesn’t have the technology. Instead, he needs Elphaba to use her magic to create flying monkeys. And Elphaba, eager to prove herself to a man who has been propagandized into a borderline deity, is tricked into doing so. While Madame Morrible can use her magic to amplify her voice to address the monkeys, the wizard has to use his steampunk PA system.

What can we draw from this? For one, it’s that technology doesn’t achieve anything in a vacuum. I’m not saying tech is neutral, but that it requires human designers and (most of the time) users to exert its power on the world. And sometimes, technology isn’t enough. Just like the wizard needs magical people to enact his agenda, sometimes we need a human element. Take our above story about poetry—ChatGPT can write something that looks like a poem, but it lacks the depth and nuance that makes poetry profound and, yes, difficult. For now, poetry remains a fundamentally human activity. To tie it back to the political allegory of Wicked, it’s also a caution not to let the arts and other human efforts fall into the service of fascism.

Anyways, someone get the Wizard some Woolf.

I Reckon…

That Yale should beat Harvard more often (oh wait, this is three running) 😎

Thumbnail generated by DALL-E 3 via ChatGPT with the prompt “Please generate an abstract impressionist painting of the concept of wickedness in shades of green”.

Thank you for mentioning the environmental impact of AI. Is destroying the planet in service of writing better business memos, white papers, and research papers really worth it? Clearly, many people think so or don't care/understand how much CO2 data centers generate and that that is a problem. What a short-sighted, self-centered species we humans are. Or at least many of us. Sigh.