Welcome back to the Ethical Reckoner. In this Weekly Reckoning, we cover LA fire misinformation and the latest in the TikTok ban saga. We’ve also got two pieces of good news (the UK is outlawing explicit deepfakes and some researchers dug up 1.2 million-year-old ice) to shore us up for the Extra Reckoning, where I finally talk about Meta’s big pivot on fact checking, hate speech, and DEI.

This edition of the WR is brought to you by… the MetroNorth

The Reckonnaisance

LA fire misinformation spreads online

Nutshell: As 130,000 people evacuate and thousands of buildings burn, conservative voices are spreading conspiracies about the disaster.

More: According to these commentators (including Elon Musk and Donald Trump) these fires were so bad because of:

A nonexistent “water restoration declaration” that Governor Newsom allegedly refused to sign

DEI

Smelt protection

A “Larger Globalist Plot To Wage Economic Warfare & Deindustrialize The Untied States Before Triggering Total Collapse” (that one was Alex Jones)

Fund misappropriation

Diddy, somehow?

Rolling Stone did a great job debunking most of these, but it’s alarming that “they burned down the Palisades to destroy evidence of Diddy’s secret tunnels and then the fire chief couldn’t handle it because she’s a lesbian” is a more appealing theory than “climate change exacerbated the fire risk in LA, extreme Santa Ana winds wouldn’t allow for arial firefighting at first, and there was just too much freaking fire for any fire department to contain.”

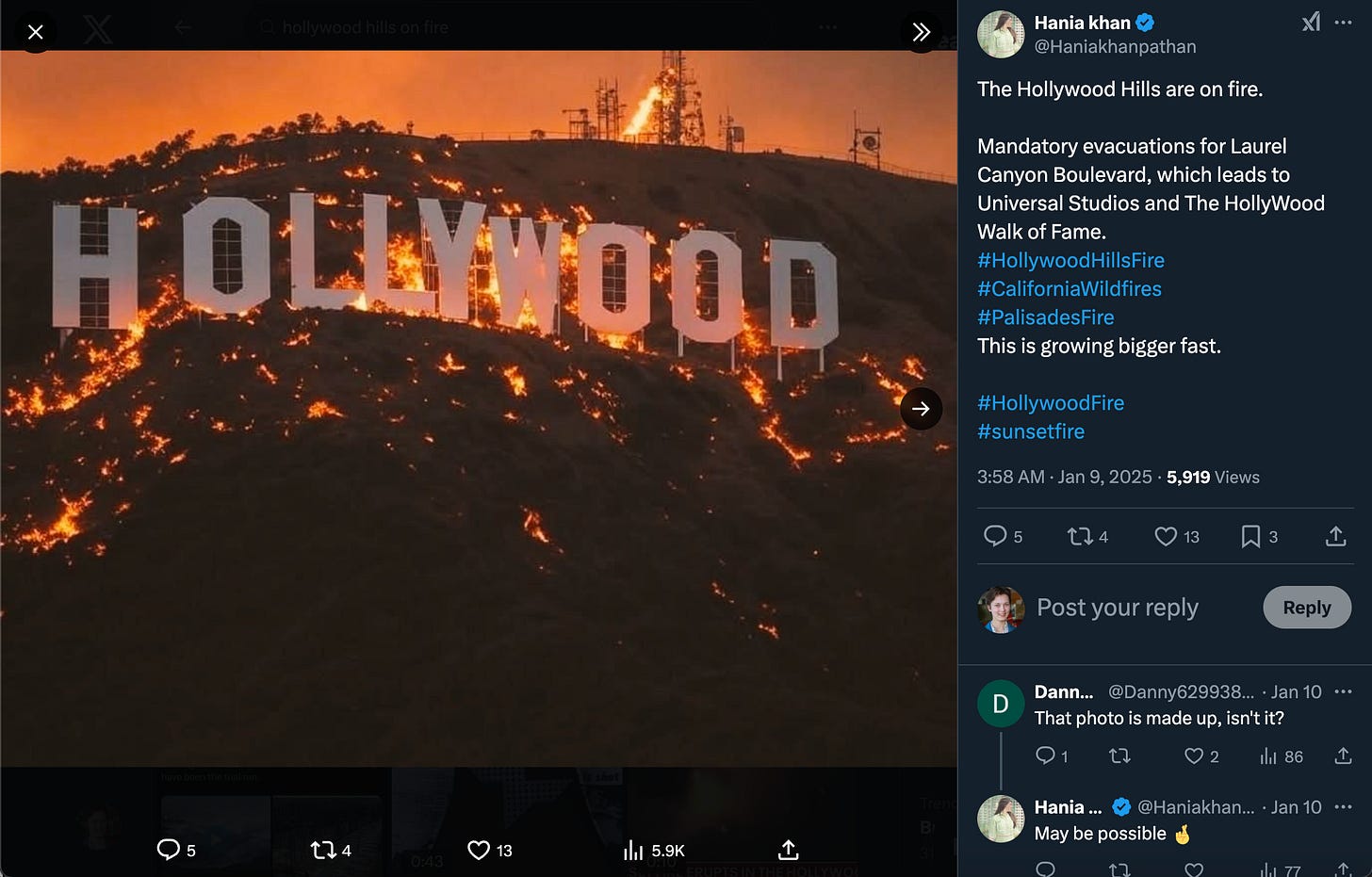

Why you should care: I feel like every WR these days involves me marveling at how much misinformation is spreading online, but this was the first big news event where everywhere I looked on social media, I saw misinformation. In real time, I saw a photo of the Hollywood Hills sign on a fiery hill posted with a note that it was AI-generated, and then the picture was immediately picked up and spread by accounts who did not make that disclosure. But beyond the real risks of misinformation—this is genuinely muddying the waters—I want to highlight two things:

This is also stoking the partisan culture war around DEI and immigration and environmental policy.

Nonprofit and community tech really came through (as did the other states and Canada that sent firefighters and planes).

UK to outlaw explicit deepfakes

Nutshell: The UK is planning to make the creation and sharing of sexually explicit deepfakes a crime.

More: The creation and spread of AI-generated non-consensual intimate images, or deepfakes, has ruined a lot of lives. Highly realistic fake nude photos and videos are spreading across the internet and through schools. They’re as easy to create as uploading a picture to an app, but their harm is visceral and indelible. Criminalizing them is an important first step to protecting all of us, but particularly women and girls, online.

"Intimate-image abuse is a national emergency that is causing significant, long-lasting harm to women and girls who face a total loss of control over their digital footprint, at the hands of online misogyny.” - Jess Davies

Why you should care: It could be you. And if you’re in a country that doesn’t criminalize this, there’s nothing you’ll be able to do. In the US, some states criminalize nonconsensual explicit deepfakes, but often just as a civil offense. This puts the onus on the victim to sue the perpetrator(s), but if they can’t be identified—or if they can be but they live out of state—the laws won’t help.

TikTok fights for its life as wolves circle

Nutshell: The US Supreme Court seemed inclined to uphold the imminent TikTok ban, but people are lining up to buy it… if ByteDance will sell.

More: The “divest or ban” requirement goes into effect on January 19th, one day before Trump’s inauguration. TikTok’s parent company, ByteDance, has been extremely reluctant to sell (perhaps because the Chinese government is extremely reluctant to let them). And while there’s no evidence that national security concerns around TikTok have been realized, the government’s argument that this is like how the government has an interest in restricting foreign entities from owning media outlets seemed persuasive. If the ban goes into effect, the app wouldn’t be wiped from devices but probably removed from app stores and the experience for people who have it downloaded would probably eventually get buggy degrade; any geolocated restrictions could be easily skirted with a VPN.

Why you should care: Chances are you or someone you know uses TikTok, potentially too much. I’m of the opinion that TikTok’s addictive quality is just as concerning as national security risks. Any sale would come without its recommendation algorithm (because it’s ByteDance’s IP, not TikTok’s) so a re-constituted US TikTok might not be as addictive (although ByteDance has published a technical paper about the recommendation system so it could potentially be reverse engineered). Still, an algorithm change would definitely impact creators, who may find that their content isn’t recommended as much or to the same audience as before. All in all, things are not looking great for TikTok—or its users.

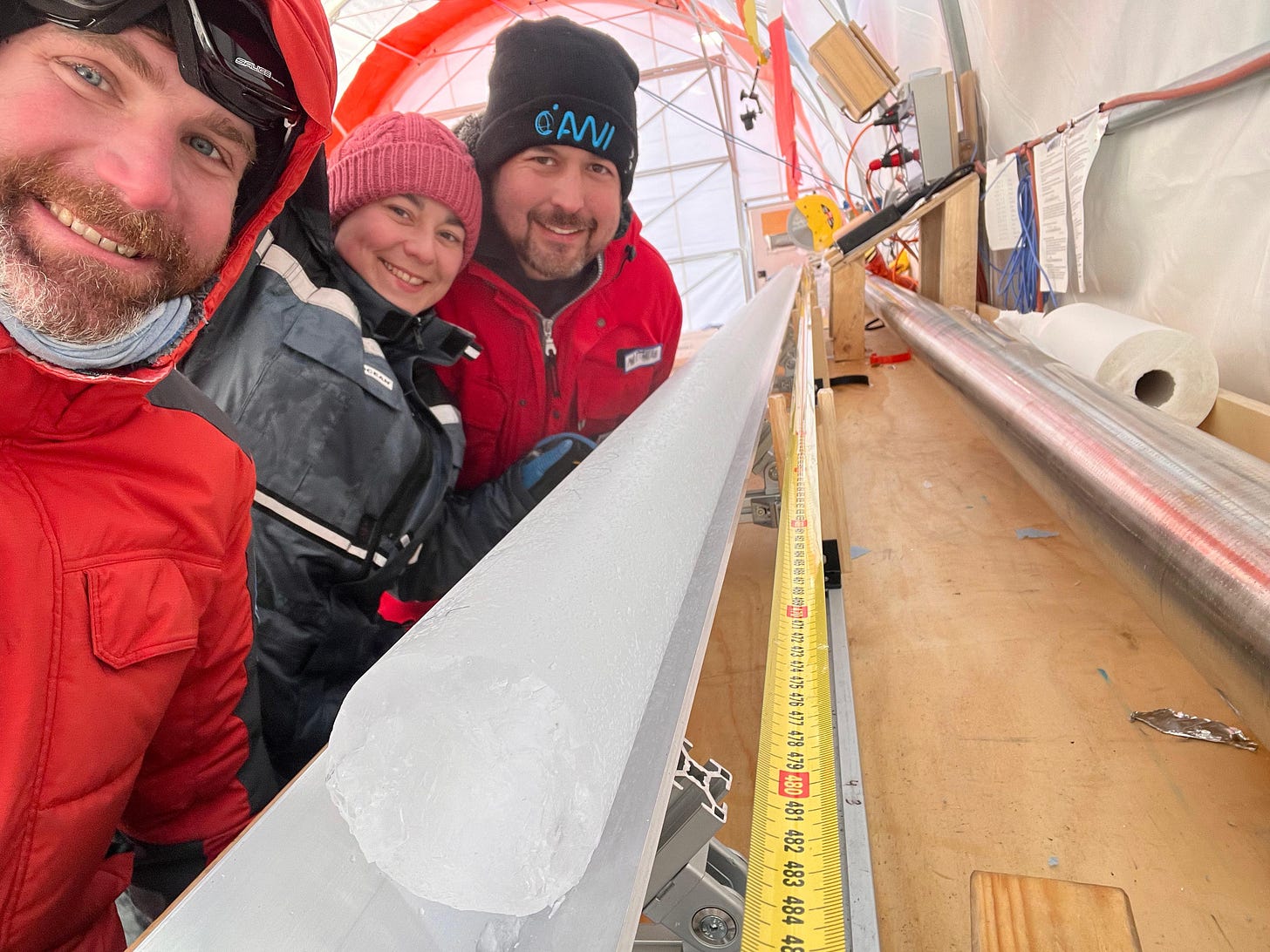

World’s oldest ice core drilled

Nutshell: An international team extracted a 1.7 mile/2.8km ice core from Antarctica, containing 1.2 million-year-old ice that will help show how the Earth’s climate has evolved.

More: The team drilled over the course of four Antarctic summers until they hit bedrock. My favorite quote from an outside scientist was from Richard Alley, a Penn climate scientist, who said, “This is truly, truly, amazingly fantastic. They will learn wonderful things.” And by “they” he means “all of us.” Note that while I have seen this billed as “longest continuous ice core,” I have it on good authority that they cut the core into 1-meter segments as it’s extracted, as there is no 1.7-mile-long freezer anywhere that I’m aware of.

“Thanks to the ice core we will understand what has changed in terms of greenhouse gases, chemicals and dusts in the atmosphere.” - Carlo Barbante

Why you should care: I love good news from other scientific disciplines, and while this one ice core won’t revolutionize climate science, it’s going to be very cool to see what comes out of this. Plus, this team deserves praise—research in Antarctica is incredibly hard work (in terms of the science, the isolation, and the lack of protection for women), and so doing this for four summers is remarkable.

Extra Reckoning

Ok, it’s time to address the elephant in the room. Yes, Meta is ending fact checking on its platforms. Yes, Meta changed its hate speech policies to allow the targeting of LGBTQ people and other groups. Yes, Meta ended its DEI programs.

In case this hasn’t been consuming as much of your headspace as it has mine, here’s a quick rundown of the new policies for Facebook, Instagram, and Threads.

No more fact checking in the US. Meta had a global network of fact-checking organizations that it started working with after allegations of Russia using Facebook to influence US voters in the 2016 elections. Joel Kaplan’s blog post stated that it was used as a “tool to censor” (even though posts didn’t get removed, just flagged, if they were factually incorrect about specific topics) and that it will move to a “Community Notes model” like X uses. Meta will continue fact checking in other countries (for now), some of which require it by law.

No more automated content moderation, except for illegal content. Before, if you posted something that Meta’s algorithms determined were likely against the rules, like a slur, it would automatically flag or filter it. Now, they’ll rely on users to report violations.

No more prohibitions on some abusive speech. For example, you can now call gay and trans people mentally ill, call women property, and insult entire racial and national groups.

No more DEI. Meta is cutting its diversity, equity, and inclusion team; ending representation goals; and kiboshing efforts to source a diverse pool of candidates for open positions.

No more content moderation teams in California, because of “bias.” Now, they’re all going to be in Texas.

More “personalized political content” in feeds, if you want it.

Yes, this is all Bad. Really Bad. How? Let me count the ways.

1. This is bad for LGBTQ people, immigrants, ethnic minorities, and children.

I’ll start with the obvious. These changes are a complete abdication of responsibility for a company that has billions of users worldwide, many of whom can now be explicitly targeted with aggressive and dehumanizing speech. Meta’s policy literally opens with:

We believe that people use their voice and connect more freely when they don’t feel attacked on the basis of who they are. That is why we don’t allow hateful conduct on Facebook, Instagram, or Threads.

And then states “We remove dehumanizing speech.” However, under its new policies, you’re explicitly allowed to post “Mexican immigrants are trash!” and "A trans person isn't a he or she, it's an it.” (These are examples that Meta itself came up with.) Comparing someone to trash is literally dehumanizing, and as Casey Newton put it, “It is hard to imagine speech more dehumanizing than to tell someone that they have no gender and are an ‘it.’” At the end of his announcement video, Zuckerberg says, “Stay good out there.” That is not what these guidelines encourage and is not a workable content policy.

These policy changes are going to lead to more harassment and hateful discourse, and not just in the US. Meta platforms have hosted and promoted content that facilitated ethnic cleansing in the past, and while the guidelines claim to protect people based on ethnicity and national origin, the examples of permitted content show that there’s going to be a lot of latitude to attack those groups. On top of this, these policies apply to children too. Queer and trans and immigrant kids are going to face more bullying, and we know what happens when kids get bullied.

2. This is bad for the political discourse.

These changes have widely been seen as a capitulation to the ascendant American right, which has been attacking DEI, social media “censorship,” immigrants, and trans people for years. Last week, we talked about how Meta has promoted a long-time Republican staffer to run their global policy team, and how company after company are making overtures to the Trump administration. But this is the furthest any company has gone—they had an executive discuss the changes on Fox & Friends, which Trump is known to watch. This is all likely an attempt to position themselves favorably with the White House, avoid antitrust and targeted investigations, and give themselves the best chance to prevail in the AI race. But regardless of motive, this will negatively impact political discourse, and not just in the US.

Getting rid of fact-checking wouldn’t be such a big deal if Community Notes were effective at countering misinformation. Community Notes sound appealing—why not let the community police itself? Maybe people will believe their peers more than preachy fact checkers. Twitter’s Community Notes certainly led to some amazing Internet moments. The thing is, there’s no empirical evidence to support that Community Notes are effective in harm reduction, but there is evidence that Community Notes users are motivated by partisanship and that notes take longer to appear on viral content than traditional fact checks. Plus, “many Community Notes currently cite as evidence fact-checks created by the fact-checking organizations that Meta just canceled all funding for.” Finally, fact-checking is getting sunsetted in March, but the notes system will take longer to roll out, so Meta may have a “fact-free spring” in the US. It has to be said that no, the fact checking system wasn't perfect, even though Meta had fact checkers from across the globe and political spectrum. But it was something, and coupled with other trust and safety efforts to promote them, could have been even better. Regardless, getting rid of it will likely be worse. Right now, people don’t trust the media, and more and more people are getting their news from social media. If Community Notes cause more misinformation to enter the media ecosystem, that will probably only make that problem worse. But I would love to be proven wrong.

Then, there’s the fact that in making these changes, Meta is painting a target on the backs of vulnerable communities. The new “policy rationale” explicitly says that people sometimes “call for exclusion or use insulting language in the context of discussing political or religious topics, such as when discussing transgender rights, immigration, or homosexuality… Our policies are designed to allow room for these types of speech” (emphasis mine). Zuckerberg claimed that their old hate speech rules—that did not explicitly allow for the harassment of LGBTQ people and immigrants—were “out of touch with mainstream discourse” and that Meta is returning to its “roots” of free expression. Zuckerberg has compared Meta’s platforms to “the digital equivalent of a town square," which seems to be the goal. Whether or not we agree with this, it’s their characterization. And yes, anyone is free to go to the town square and shout about anything they want. But just because the preacher with a few screws loose is shouting that the aliens are coming to begin the Rapture doesn’t mean that we should encourage everyone else to shout about how the aliens are coming to begin the Rapture. In creating a fact-free zone where people can be aggressively harassed based on their identities, and explicitly calling out gender identity and immigration status as things that can be targeted, Meta is taking a side in the American political discourse and blowing not just a dog whistle, but one as loud as my camping whistle to that side. They’re also imposing this particular facet of American politics on to the entire rest of the world. Debates about gender identity and immigration are not unique to the US, but the way Meta frames it—“It’s not right that things can be said on TV or the floor of Congress, but not on our platforms”—explicitly links it to American political and First Amendment discourse, even though Meta’s platforms are global and different countries have different definitions of “free speech.” These debates are rancorous, will become more so with fewer facts and more hate speech, and now may spread to the rest of the world as well.

3. This may even be bad for Meta itself.

These announcements came as a surprise basically all of Meta, and it’s impacting trust in the company. Zuckerberg reshaped these policies with input from “no more than a dozen close advisers and lieutenants” during a six-week sprint over the holidays. The rest of the company was just as surprised as we were when they announced it, and while some employees are ok with the changes, it’s apparently “total chaos internally” and employees, especially the LGBTQ+ community, are feeling unsupported and betrayed. Culture change can’t be dictated from the top down, and while some employees will probably leave, the realities of the tech populace is that most of them don’t and won’t support this realignment. There’s also a lot of internal confusion about why this was done in secrecy without consulting anyone from the policy team. External experts are also confused. Normally when Meta is considering big policy changes, they consult external academics, policymakers, and other stakeholders for advice and to assess the potential impacts of any changes. I’ve been part of a couple of these, and they’ve been detailed, thoughtful processes that consciously bring in a variety of voices for careful deliberation. None of that happened here, and it seems like the rushed creation of the new speech guidelines has created confusing and contradictory rules.

I also think that this will continue to be a liability for Meta as they develop new products. Last week, news broke that Meta is planning to create more AI-powered profiles to populate its platforms. By Meta’s new definition of “censorship,” these bots are sure to be “censored.” No PR-minded company would unleash official company-created profiles that even slightly approach what’s now permitted speech. Can you imagine the headache it would cause if Meta AI bots start posting “women are the property of men”? Even though Meta is permitting (and essentially promoting this through their policies), there’s a distinction between allowing/encouraging other people to engage in this speech and doing so yourself, so they would never. But then this creates a situation where Meta seems like hypocrites for creating “censored” bots while promoting “free speech” for everyone else, and another front for people on the right and left to come at them.

The final way this would be bad for Meta is if users leave its platforms. Fewer users, fewer ad dollars, less revenue. But users leaving would not only be bad for Meta’s bottom line, but also our social media system as a whole, and that deserves its own section next.

4. This is bad for our social media ecosystem.

It increasingly seems like we’re entering an era of bifurcated social media systems. On the one side, you have the platforms ruled by god-kings (X and, increasingly, Meta’s Facebook/Instagram/Threads). On the other, you have the new decentralized platforms, like Bluesky, Telegram, and Mastodon. TikTok falls somewhere in the middle, but it might soon be a non-factor. Then we also have a schism within platforms between the US and areas that require content moderation and fact-checking, like the EU. User experiences will now be dramatically different. And if people (specifically liberal users) react to this by leaving Meta platforms, we’re not only going to have algorithmic silos, but wholly separate social media ecosystems. Some platforms will be for liberals, some for conservatives, and never the twain shall meet. It goes back to what I wrote about in the very first Ethical Reckoner about how far-right social media sites like Parler and Gab were not just echo chambers, but echo palaces. In an echo chamber, you can hear what’s going on in the chambers around you, and you can go across the hall to see what’s going on. In an echo palace, you’re surrounded by aligned discourse, and there is nothing else to hear. If our social media platforms fragment along US political lines, we’ll have fewer opportunities for discussion, and political discourse will only deteriorate.

This has been a long Extra Reckoning. Thanks for bearing with me. I’d like to close on a happy note, but I’m feeling profoundly demoralized by this. If we’re entering a new era of fragmentation and platforms shifting with the political winds every four years, it’s honestly going to make the work that my research community and I do much harder. Next week, I’m planning to have some constructive ideas for how to move forward. But I don’t want to make this all about me. These new policies are going to cause genuine harm to many people, and that should be foregrounded. I will say, though, that what I’ve seen from my communities online has been heartening. We exist to research technology, mitigate its negative impacts, promote its positive ones, and change it for the better. We will keep doing that work. We are ready.

I Reckon…

(on a much lighter note) that AI litter boxes are the pinnacle of technological achievement.

Thumbnail image generated by ChatGPT with the prompt “Create an abstract impressionist image in shades of blue and white representing the concept of institutional change”. Thanks to my alpha readers for their excellent feedback.

Great post, was super helpful! Thank you!