ER 26: On curling and AI governance

Or, gentleman’s agreements didn’t work for “the roaring game,” so why should we expect them to work for AI?

Welcome back to the Ethical Reckoner. If you’re new around here, you’ve joined us just in time for the latest edition of this newsletter’s longform piece, where I connect the dots between topics in tech ethics. This comes out the last Monday of every month. All the other Mondays, you’ll get an edition of the Weekly Reckoning, my rundown of the tech news you need to care about. Check out last week’s here:

This edition of the Ethical Reckoner is brought to you by… Boston, which I didn’t give nearly enough credit to when I lived here.

Today, I want to talk to you about curling. Yes, curling, the sport that you remember exists every four years during the Winter Olympics when you watch it in fascination for a few minutes before flipping over to the figure skating or luge or ski jumping. More specifically, I want to talk to you about what AI governance can learn from the biggest scandal curling has ever known.

Stay with me here.

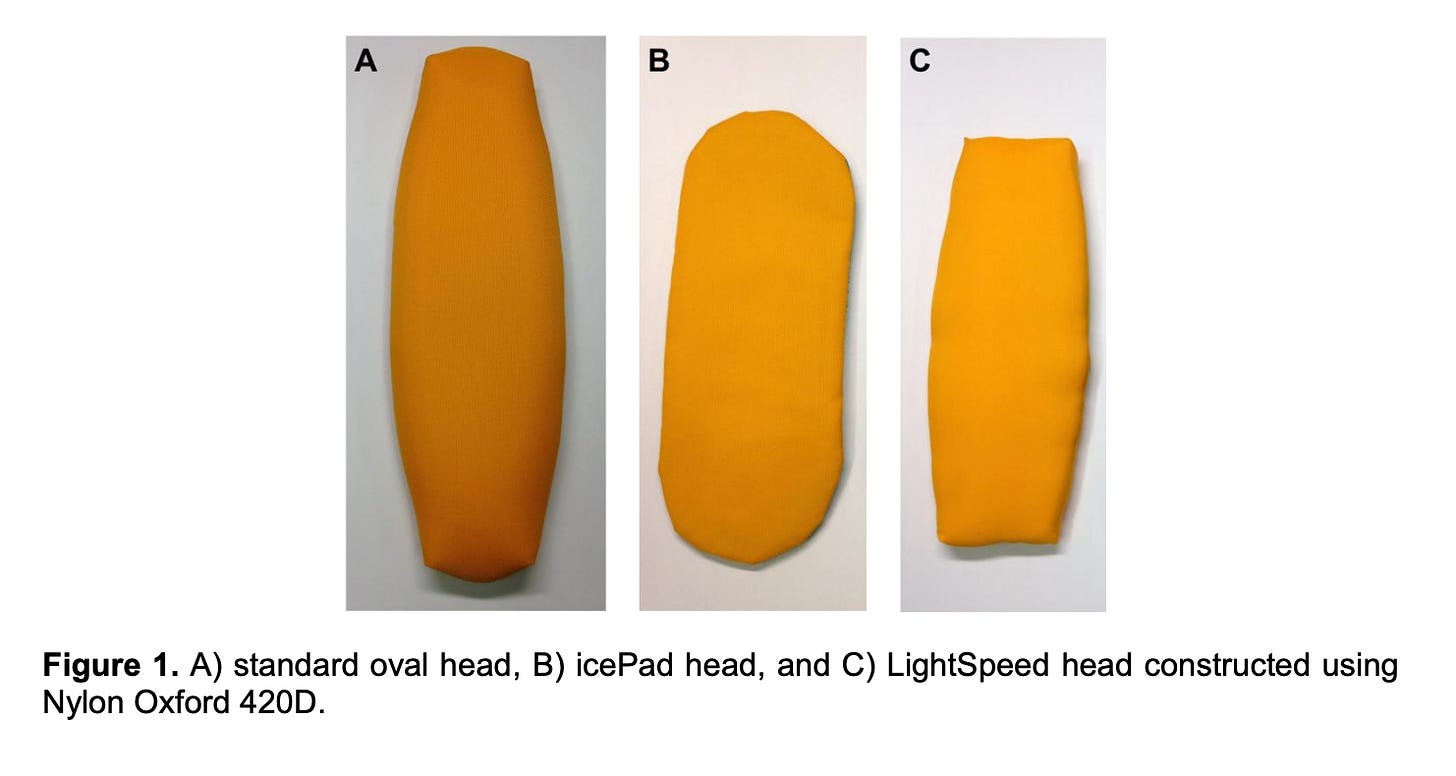

Curling is played in teams of four: one thrower who shoots the rock down the ice, two sweepers who sweep the ice with Swiffer-like brooms to guide the rock, and a “skip” who calls strategy. It’s generally a very convivial, close-knit community, especially in Canada… until 2015, when a new kind of broom came on the market. The broom used a mystery “directional fabric" on its pad that let sweepers control the puck “with ‘joystick’ precision,” and it tore the Canadian curling community apart. The new CBC podcast “Broomgate: A Curling Scandal” describes the scandal in fantastic fashion. There were two companies making the controversial “superbrooms”—the “icePad” and the “Black Magic”—and there were tensions between the teams that used the different companies’ brooms, and also with the teams that used traditional brooms. The new brooms actually scratched up the ice, and other players complained that they were too good, that they were taking the skill out of throwing, that sweeping was becoming too easy, and that the brooms were ruining the ice for the other team. One team even started using just one sweeper to demonstrate how ridiculously advanced the superbrooms were.

Everything came to a head in a “bonspiel” (curling tournament) at High Park curling club in Toronto on Thanksgiving weekend of 2015. Things got so rancorous that the official in charge, Jerry Gertz, “couldn’t take it anymore” and stopped the tournament. He dragged all the skips into a meeting room and made them hash it out face-to-face. Curling doesn’t have referees, so the only thing that could be done was a “gentleman’s agreement” that anyone with a “superbroom” wouldn’t use it.

“Some teams are accusing other teams of cheating, but there’s no cheating because there’s no rules.” - Brad Gushue, curling gold medalist

(Actually, this sport is kind of amazing.)

This broke down almost immediately. The two teams who had been at the center of the controversy, one using each superbroom, ended up facing off in the finals. The icePad team stuck to the gentleman’s agreement and used their old brooms, while the Black Magic team kept using their super brooms—not in a dastardly attempt to steal the tournament, but because they wanted to show once and for all that the brooms were ruining the sport. Over the next three and a half hours (which exceeds my attention span for just about anything, but apparently it was gripping) the two teams did battle, with the Black Magic brooms chewing up the ice and the other team repairing the ice after each shot. In the end, the Black Magic broom caused its own downfall, because a game-winning shot turned into a game-losing shot when the stone hit a track that the superbrooms had carved into the ice and went off course. Even though the Black Magic team lost, they had shown that the superbrooms were a risk to the sport. The match ended without the traditional post-game beer (the horror!) and everyone went home feeling generally dreadful.

News of this spread around the curling world, and the World Curling Federation held a “Sweeping Summit” (with the National Research Council Canada’s1 “Security and Disruptive Technologies” division) where they concluded that directional sweeping brooms were too powerful and that going forward, a standardized fabric would be adopted for all brooms. The communications director for Curling Canada said, “It was unanimously agreed that the situation was not acceptable. Curling was meant to be played a certain way and that's basically what everybody agreed.” And from what I can tell, it’s pretty much returned to that, and Stephen Colbert has had to find other things to make jokes about.

At this point, you’d be excused for wondering what exactly we’re doing here. Yes, this is a tech ethics newsletter. So now, let’s talk about AI. Right now, everyone is discussing AI governance: who sets the rules for AI, what should they be, and how should we enforce them? Different countries are setting different rules. The EU just adopted an AI Act laying out rules for “high risk” AI and China is passing laws regulating AI in specific sectors (like generative AI, recommendation algorithms, and facial recognition). The US, on the other hand, is struggling to pass any concrete legislation. What we have instead is a patchwork of few executive orders that guide how AI is used in the federal government, a couple of bills encouraging AI development, and some voluntary commitments from big technology companies that they won’t let their AI products be dangerous. The US is not known for its proactive regulatory approach, especially when it comes to technology—the common line is that “regulation stifles innovation,” even though it’s not that simple—and AI is proving no different. We’ve released lots of promising documents, including the Blueprint for an AI Bill of Rights, but nothing is enforceable.

One might even call these gentleman’s agreements. But, like in curling, they’re not working. The developments of the last couple of weeks are showing that. Google promised that their products would be “safe before introducing them to the public,” but their new AI search overviews are telling people to eat rocks and add glue to their pizza sauce to make cheese stick. OpenAI has promised to respect people’s rights, but it released a Her-like AI voice that sounds scarily like Scarlett Johansson, even after they asked her if she wanted to be the voice actor and she said no. Now OpenAI has pulled the voice, and lawyers are sending letters. (Also, OpenAI’s latest model, GPT-4o, also claims to recognize emotion, which is explicitly illegal in places of work and education in the EU.) Corporate self-governance is difficult: In a blog post earlier this year, OpenAI said that it wanted there to be a “no-go voice list that detects and prevents the creation of voices that are too similar to prominent figures,” but apparently this hasn’t been implemented. The whole ScarJo scandal seems to be a spectacular case of corporate miscommunication where a team within the company recruited a voice actor to be the “Sky” voice, and then CEO Sam Altman separately pursued Johansson, but this shouldn’t be an excuse, and it’s problematic for a myriad of reaosons. The voices don’t sound exactly the same, and OpenAI was looking for “voices that were warm, engaging, and charismatic,” which is probably what the filmmakers behind Her were going for. I’ve written a bit about why the OpenAI Sky voice is problematic, and Altman literally tweeted “her” the morning of the release, so it’s clear that the inspiration was in their head. Regardless, at least one reason that they’re similar is because the movie and OpenAI were trying to embody the same stereotype—the flirtatious female assistant.

Anyway, there’s a myriad of reasons why we should regulate AI, and not just generative AI. People are being wrongfully arrested based on facial recognition misidentification. AI is in your credit score, your loan approval, your social media feeds. AI could generate disinformation like we’ve never seen. Our data feeds AI, and the US has no federal data privacy law. It’s too weighty an issue to expect companies to self-govern. Indeed, many of them say they want responsible and proactive regulation, but Congress unsurprisingly hasn’t been able to pass anything. Instead, the US is left with a patchwork of executive orders and voluntary commitments in the face of a head-spinning pace of development.

Some things are not built to cope with the modern era, such as our legislative process. We can’t blame it; who could have thought when we were outlining our government over 200 years ago that we’d be faced with such a rapidly iterating technology? “Technology moves too fast for governments to cope” has long been an argument from companies for prioritizing self-regulation, but others have gotten around it. The AI Act had to scramble to adapt to generative AI when ChatGPT dropped (which also caused China to pass a whole new law on generative AI after it had just passed one on “synthetic content”). But the best legislation focuses not on technologies themselves, but on their impacts, so we can regulate AI. We just can’t let ourselves get fazed by the progress it’s making.

Curling has existed at least since 1541 with brooms of hair and twigs. No one could have envisioned that, centuries later, new broom technology would be jeopardizing the entire sport. But, when push came to shove, the sport tried to solve its problems. Sometimes, though, as charming as they are, the old ways just don’t work. Handshakes and gentleman’s agreements are inadequate when one party is sufficiently motivated to break them, whether out of concern for the future of a sport or the billions (perhaps trillions) of dollars at stake in AI.

Other sports have faced this as well. Full-body neoprene swimsuits were banned by FINA (the world governing body of swimming) in 2010; the carbon-plated sneakers Eliude Kipchoge wore when he ran the first sub-two-hour marathon were also banned by running governing body World Athletics.

I mention the governing organizations because in each case, it’s the responsibility of the group that knows the sport best and that has the legitimacy to govern it. While the world has international sports organizations, like the International Olympic Committee (IOC) and the World Anti-Doping Agency (WADA) that issue rules universal to all sports (like those banning controlled substances), they delegate sport-specific regulation to international sporting bodies. And this makes sense; those organizations know the sport best and have the knowledge, infrastructure, and legitimacy to make rules for their sports. I bring this up because the debate over AI regulation isn’t just national, but global. AI is such an enormous and cross-cutting issue that many people argue we need some form of international AI governance. Some say this should be a single centralized body, like what we have for nuclear governance. However, setting up new centralized international agencies is hard for a myriad of reasons, and it’s difficult to come to agreements when you’re trying to do everything under one roof. It’s a recipe for well-meaning platitudes and high-level agreements, not the nitty-gritty details we need to hammer out for effective global AI governance. Instead, we should leverage the vast network (or “regime complex”) of international institutions we already have, like the WHO, ISO, WTO, and other specialized organizations. They can wrap AI into their existing purviews and leverage their domain-specific expertise to assess the risks and benefits of AI within their organization. If we increase coordination between organizations, we can avoid overlap and capture by industry interests in a way that might lead to truly effective AI governance. This isn’t to say we absolutely shouldn’t have a new body, like something in the UN, but that if we do, it should be more of a coordinator for the work of the other bodies rather than a regulator in its own right. This same metaphor same applies to the dynamic between international and national AI governance. An international governance regime can, like the IOC and WADA, set the baseline for what is acceptable, and then each country can iterate according to what’s needed according to their national contexts; for example, the US, EU, and China all have different approaches to privacy.

Unfortunately, I worry that, like Canadian curling in 2015, we might need to wait for an inciting incident, a shock point, to trigger action, and with AI, that could lead to more than just a snub at a bar—it could cause genuine human harm. Here’s to hoping that the AI governance community (which you can be a part of! Call your congressperson!) can take a leaf out of curling’s book to knock some heads together, move beyond gentleman’s agreements nationally, and delegate AI regulation to the groups best suited to handle it internationally.

Curling, see you in Milan 2026.

This is the “primary national agency of the Government of Canada dedicated to science and technology research and development” and I shall make no comment on its priorities.

Thumbnail generated by DALL-E 3 via ChatGPT with variations on the prompt “make me the most abstract impressionist painting of curling you can”.

Now that was a great read indeed! Thank you!

Got to me wondering about "necessary flashpoints" that might be necessary address the issues and proactively.

Point being, considering our human nature and history, the "gatekeepers" of these flashpoints would probably be exploiting them to further their agendas.

Love this! Fun semi-related fact, the term “bias” originates in English from curling’s grass-based equivalent, bowls