WR 11: I, for one, welcome our new app (& whale) overlords

Weekly Reckoning for the week of 18/12/23

Welcome back to the Ethical Reckoner for the last Weekly Reckoning of 2023! In this edition, Santa is bringing deepfake disinformation for the bad kids and (maybe) more privacy protection for the good kids. All I want for Christmas, though, is a dictionary of whale language, which we’re getting ever-closer to. Relatedly, the best present I’ve gotten this year is an anti-jet lag app, but in the Extra Reckoning, I have to ask: am I a technosolutionist hypocrite?

The Reckonnaisance

More privacy online for US children… maybe

Nutshell: The US Federal Trade Commission proposed revisions to the Children’s Online Privacy Protection Act, one of the only federal data protection laws the US has.

More: The measures would limit the monetization of children’s data, clarify education tech rules, and prohibit “nudging” kids to stay online. Parents would have to opt-in to data sharing for behavioral advertising. It would include biometric data in “personal information,” which could have implications for data protection in VR/AR. Big Tech companies are not especially fans of the proposed measures; TBD what parents think.

Why you should care: This may make kids in the US marginally safer online and could potentially curb how apps use push notifications to draw kids back in, although it seems like it would only ban notifications that use data like phone numbers. Meanwhile, other children’s data protection laws are stalled, and don’t even ask about a bill to protect adult data privacy.

Bangladesh’s election is an AI disinformation battleground

Nutshell: Cheap deepfake tools are being used to create deepfake videos of candidates in Bangladesh in the lead-up to their January election, but are being largely overlooked by platforms and researchers.

More: Most disinformation is still being created with conventional editing techniques, but there have been many deepfake videos floating around, muddying the informational ecosystem both through the content they promote and by making it easy for politicians to claim that any content they don’t like is a deepfake. Meanwhile, social media platforms aren’t taking issues in Bangladesh and other developing countries seriously.

Why you should care: This may or may not be a preview of what’s to come in US and EU elections; we can’t know for sure. Research on election disinformation is mostly Western-centric (and suggests that US voters are so polarized that disinformation essentially bounces right off), as are the solutions proposed by platforms. For instance, political ads aren’t as big a part of political communication in Bangladesh, so labeling political ads that use AI won’t have a significant effect. But platforms are derelict in their normal content moderation duties: if it takes the FT reaching out to Meta to get an anti-opposition deepfake removed from Facebook, clearly their system isn’t working for the Global South (an issue that is very well documented).

TikTok’s parent company caught using ChatGPT to train its own LLMs

Nutshell: ByteDance was caught using ChatGPT to train and evaluate its foundation model, prompting OpenAI to suspend its account.

More: ByteDance claimed that they stopped using ChatGPT in April (when they gained regulatory approval in China for their own chatbot, Doubao), but The Verge claims that ByteDance is still using it and trying to cover it up. This violates OpenAI and Microsoft’s terms of service, but the practice seems widespread among start-ups. Still, it’s unusual for a company of ByteDance’s size to be doing this.

Why you should care: Most of you probably aren’t about to go out and use a Chinese chatbot, but there could be broader effects. Model collapse—where large language models train on AI-generated output and then get worse—is already well-documented, including with xAI’s Grok. We don’t know exactly how ByteDance was using ChatGPT, but there are concerns that this practice could exacerbate model hallucination, and if a lot of companies are doing this, there will be broader issues.

We’ve made first contact… with humpback whales

Nutshell: Researchers held a 20-minute conversation with a humpback whale.

More: The researchers played “contact calls”1 from a boat and a humpback whale named Twain kept responding, even matching the delays between boat broadcasts, prompting researchers to theorize that it was an “intentional exchange.”

Why you should care: Whales are incredibly intelligent and have their own language, so while we’re not closer to understanding it, we’re at least closer to knowing how to converse with them. And also maybe aliens, as one of the researchers theorized. But I think that what’s more promising is that this will help us understand how smart animals actually are. And really, this is just… cool.

Extra Reckoning

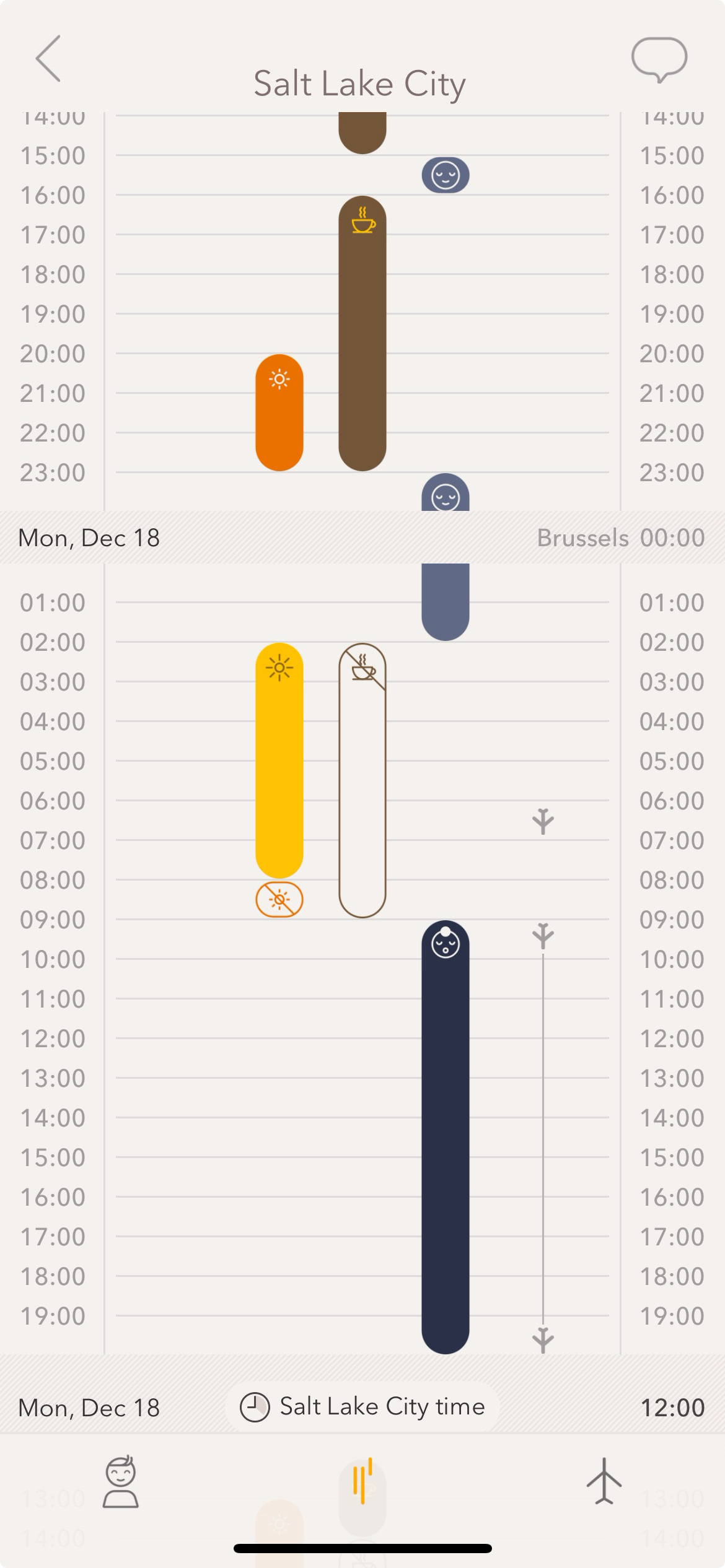

I’ve written before about how I’m generally anti-technosolutionism (using tech to wallpaper over our problems in ways that often create more problems). However, I ran across one tech solution that I’m a big fan of: the Timeshifter app, which uses Circadian rhythm science to prevent jet lag. It recommends when to see or avoid light, drink caffeine, and—of course—sleep to adapt the Circadian cycle faster and overcome jet lag. I’m pretty used to doing a 5- or 6-hour shift (East Coast to Europe), but this holiday season, I had an 8-hour shift to deal with, and that made me a bit nervous. Someone recommended the Timeshifter app to me after it helped them on a grueling work trip to South Korea, so I figured I would give it a try. When I first saw my plan, I was… skeptical.

Still, I stuck to it, and felt fine when I arrived. And, despite having to wake up for a 5am meeting the day after I landed, I had energy the whole day, and you bet I rubbed it in the face of friends who laughed when I sent them selfies of me wearing sunglasses indoors to avoid light. “and if the jet lag app told you to walk off a cliff you’d do that too??” quipped one friend.2 Another called it the “most techy thing I’ve ever seen,” and that made me wonder if I was falling into the technosolutionist trap.

Jet lag is a modern problem. Travel on foot or horse or ship was so slow that you adjusted during the journey. But our bodies don’t know what to do when we get in a big metal tube and, a few hours later, emerge thousands of miles away somewhere the light and schedule is completely different. Before, I would basically power through the day and/or use sleeping pills to try and force my body to adjust. One of my major issues with technosolutionism is when it ignores or disrespects nature, and sedatives definitely aren’t respecting the body. The other natural solution to jet lag is slow travel (which is an actual movement), and while I’d love to take chiller (and often lower-carbon) methods of travel, I also can’t spend a month traveling home for Christmas. If we accept that jet lag is an embedded part of modern life (a view we shouldn’t extend to all problems), using light is definitely a more natural way of adjusting, presented in a modern form factor.

But, I just found out that Timeshifter also has a shift work app. “Shift work disorder” (SWD) is also a modern invention, a product of the Industrial Revolution that affects workers who work outside of typical business hours, causing sleep difficulties in an estimated 26.5% of shift workers. Shift work is a part of modern life. Some things will always have to run 24 hours, and while there are ways we can and should make shift work easier on workers (like flexible scheduling and avoiding random-pattern rotation), SWD will always exist. If workers find apps like Timeshifter helpful, they should use them. But the amount of marketing copy on the website targeted at employers—who can purchase the app for their employees—makes me uncomfortable.

SWD is disruptive and even dangerous (causing more work and car accidents, for example), but the primary concern shouldn’t be for the company bottom line. Using the app was disruptive, and I wouldn’t want it to dictate my life any more than it did. It would be good if workers could access it for free through their employers on a voluntary basis, but if companies start mandating it for their workers (even unofficially) and governing how they live outside of work, that seems wrong, an intensification of the scheduling and monitoring workers are already subject to. I’m not saying this will happen, but the question of when tech that enhances job performance (like generative AI in some cases) becomes de facto mandatory is something we’ll have to keep an eye on.

Where does this leave us with technosolutionism? I’m pretty comfortable saying that it’s ok to use tech to naturally reset the body on a voluntary basis but not ok to grease worker-cogs in a corporate machine. I guess this is what they call “human-centered” technology, but as I’ve written before (and will again), I do not like this term. But I hope that we can make our way towards a more symbiotic, rather than domineering, relationship with technology—even if we need technological aids to do so.

I Reckon…

that we’re all in for a better holiday season than Apple.

It was the same call over and over, so for all we know, they were just pestering Twain with “hello? Hello?” Honestly shocked she kept responding for 20 minutes.

My response: “is it a different time zone at the bottom?”

Thumbnail generated by DALL-E 3 via ChatGPT with the initial prompt “An abstract interpretation of whale conversation in dreamy shades of blue”.