Welcome back to the Ethical Reckoner. In this Weekly Reckoning, we have to talk about the AI-generated deepfakes of Taylor Swift that sparked outrage, thinkpieces, and maybe concrete legislation. But we also cover how generative AI might be making code worse—and how right-wing media outlets might be trying to redpill it—and touch on TikTok’s data protection plan, which is going about as well as you might think.

This edition of the WR is brought to you by: cycling 75 minutes for the false promise of waffles ☹️

The Reckonnaisance

Explicit deepfakes of Taylor Swift go viral

Nutshell: Sexually explicit, AI-generated images of Taylor Swift went viral on X, remaining up for hours and garnering millions of views.

More: At least some of the abusive images were made on Microsoft’s free AI image designer and originated on 4chan and in Telegram groups dedicated to getting around platform safeguards and generating non-consensual pornography of women. X, having gutted its safety teams, attempted to limit the spread by blocking searches for “Taylor Swift,” while Swift fans tried to drown out the images with tweets of their own. Now, legislation has been introduced in the US that would allow victims of “digital forgery” to take perpetrators to civil court.

Why you should care: Taylor Swift was targeted because of her celebrity, but she was also protected because of it, which rallied fans, platforms, and legislators to her side. She should not have had to go through that. At the same time, most women1 don’t have her resources, and small-scale image generation and targeting flies under the radar but is incredibly damaging—to mental health, self-esteem, reputation, professional opportunities, I shouldn’t have to list these—and can be done by anyone with just a handful of pictures of the victim. “Revenge porn,”2 the nonconsensual sharing of intimate images, was already a huge problem, but now perpetrators don’t even need an actual intimate photo. The only real way to protect yourself is to avoid posting images of yourself online, educate others about why this is violating, and advocate for better legislation.

The dark side of AI coding assistants emerges

Nutshell: A new study shows that, since AI code assistants have been out in the wild, code quality has decreased.

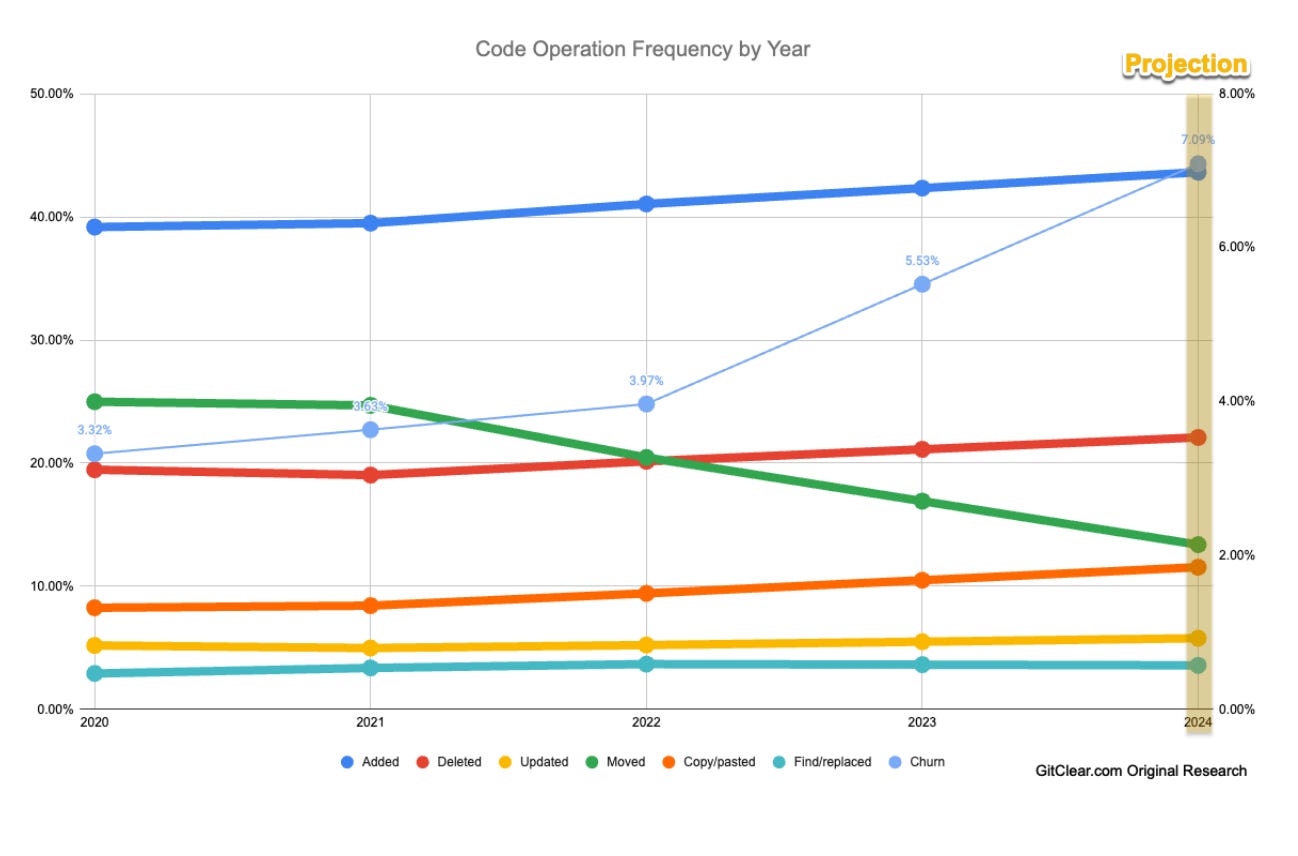

More: The study measured “code quality” in terms of “churn”—how much code gets edited or reverted within two weeks—and code reuse (relying on existing code rather than copy/pasting, which repeats functionality and bloats files). They found that churn is increasing and reuse is decreasing, implying that code quality overall is decreasing.

Why you should care: Studies about AI code assistants so far have shown that programmers complete tasks faster with AI, but they don’t take into account the long-term maintainability of code. Speaking as a former software engineer, sometimes it’s better to go slower and refactor your code so that it integrates better with the codebase—and so that your coworkers don’t come to you in a week complaining that you’ve introduced a bunch of bugs. This is more proof that AI is good at one-off coding tasks (RIP Leetcode3) but not tasks that require a lot of context (like knowing how it’s supposed to fit into an existing codebase). And while this matters for your job if you’re a developer, worse code means more breakages, and that impacts anyone who uses digital services.

Mainstream sites block AI crawlers; right-wing news sites don’t

Nutshell: 88% of top-ranked news outlets in the US block the bots that AI companies use to gather training data and chatbots use to browse the web, but right-wing news outlets are more permissive.

More: Two theories for why this is have been floated: this is an ideological stance designed to increase the proportion of right-wing training data in response to a perceived political bias, or the right-wing sites tend to be smaller and less-resourced and thus not prioritizing blocking bots; two of the sites started blocking them in response to Wired’s questions.

Why you should care: Concerns have been raised that this will cause generative AI training datasets to be more right-leaning overall, but between the sheer volume of training data and the use of human feedback to tweak trained models—plus the incentives on AI companies to have their chatbots appear neutral—I don’t think this is a huge risk. What’s more concerning is that it may give chatbots an ideological bent while browsing. When chatbots like Bard or ChatGPT browse the web in response to user queries, they can’t visit sites that block bots, so next time you ask ChatGPT about how the election is going, it won’t be able to pull results from CNN, but it will be able to pull results from Breitbart. Watch this space as publishers sign licensing agreements with the big AI companies.

TikTok’s data protection plan isn’t going well

Nutshell: TikTok’s “Project Texas” promised to protect US user data from its Chinese parent company, ByteDance, but it may have been given an impossible task.

More: Project Texas employees are tasked with keeping US user data walled off and reviewing all code changes for “signs of Chinese interference,” but they’re often required to download and share user data (including personal data like email addresses) with ByteDance employees, and the code volume they have to review is untenable. There’s no evidence of the Chinese government trying to access US data, but as the article notes, “it shows TikTok’s system is porous.”

Why you should care: I’m still of the opinion that the biggest risk that TikTok poses is its addictiveness, not the potential for Chinese interference, but regardless, this isn’t a good look for a company that’s staking its reputation on being able to protect user data. And, if you’re worried about your data—or whether you’re on a list of, say, users watching LGBTQ+ content—you legitimately have something to worry about, because it may not stay in the US.

Extra Reckoning

I want to think for a minute about what the Taylor Swift X debacle says about the state of safety on X. (Spoiler alert: nothing good.) Twitter/X has been delegating fact-checking to the community for a while through Community Notes, which has been helpful on occasion but is cumbersome, slow, nontransparent, and subject to manipulation. We could have a whole conversation about this, but it seems clear that Community Notes are not a substitute for actual trust and safety staff.

Now, X is unofficially delegating content moderation to the community as well. Even with millions of eyes on the images of Taylor Swift—including a fan army actively reporting them—it took 17 hours for the most-viewed image to be taken down. And afterwards, the best X could do was block searches for “Taylor Swift” while they slowly chased down and removed the images and accounts spreading them.

X is breaking the basic agreement it makes with its users: that it will protect them from active harm and prevent the platform from becoming an active cesspool; anyone now could be a target. There’s a budding industry around making this AI-generated abuse imagery, and it doesn’t just target celebrities. Those of us without a millions-strong fanbase that will rally to your side—although I know you, dear readers, would (right?)4—are out of luck. Abusive images of most people won’t go as viral as Swift’s did, but the scale doesn’t really matter. Whether these images are seen by ten people, a hundred, a thousand, or millions, the people who are going to see them are the people you’re associated with online—your friends, family, and colleagues. No matter the size of that circle, having images like this spread in it would be awful.5 But if the only way to get them removed is have essentially the entire world rally for you and against the platform? We’re out of luck.

Taylor Swift is so big right now that she’s gotten TicketMaster investigated and legislation to let people sue over fake explicit images introduced. But advocates have been pushing for legislation on AI-generated nonconsensual explicit photos for a long time, and she shouldn’t have to have gone through this for it to be regulated; it shouldn’t take a high-profile case of abuse to get action when the evidence is all there. Women have been targeted by revenge porn and explicit deepfakes for years,6 and all we have is a patchwork of 48 state laws that criminalize revenge porn to various extents; only a handful of states address deepfakes. The situation is similar worldwide. And the proposed US legislation, if it even passes, only makes this a civil offense, not a criminal one. At worst, if nothing passes, we have to ask:

If this—the most famous woman in the world getting targeted with explicit, violating pictures—doesn’t get legislators to act, then what will?

And even if something does pass, it doesn’t fix the problem of inadequate platform moderation, and we still have to ask:

If the only way we can regulate is via celebrity, and the only way we can moderate is via fandom, where does that leave the rest of us?

I Reckon…

that every family should have an AI safe word.

This is getting rebranded as Nonconsensual Distribution of Intimate Images (NDII) to erase the suggestion that the victim did something to deserve it and to cover cases not motivated by revenge, which I fully support, but I use the old term to minimize confusion.

I’m actually dancing on its grave, I hated coding challenges.

You are not millions strong, but maybe someday :P

To be clear, having them spread outside of it is also awful, but for the average person, they almost certainly will be seen by people they know.

There’s also now issues with generated child sexual abuse material, which legislators worldwide are struggling to address.

Thumbnail generated by DALL-E 3 via ChatGPT with the prompt “Generate an abstract impressionist painting with a consistent color palate of earth tones and a hazy atmosphere abstractly representing the phrase "Regulating by celebrity, moderating by fandom””.