Welcome back to the Ethical Reckoner. In this Weekly Reckoning, we cover not just the big news from OpenAI, but the decline of dating apps, the rise of AI tutoring tablets, and a case of truly perplexing academic fraud featuring hilarious/terrifying diagrams of “retats” (it’ll make sense when you see it). Then, I muse on the implications of VR for academic freedom.

This edition of the WR is brought to you by… the Belgian Sunday Rapture.

The Reckonnaisance

Dating apps are the next target in Big Tech lawsuit push

Nutshell: Match Group, which owns most big dating apps, is being sued over allegations that they are not “designed to be deleted,” but designed to be addictive and keep users paying.

More: The suit alleges that apps are gamified and monetized in a way that keeps users engaged and spending money, and that in not disclosing its apps’ potentially addictive quality, Match violated false advertising and consumer protection laws. This suit is similar to that filed by 41 states against Meta alleging that Facebook and Instagram features are addictive and harming children.

Why you should care: When looking at the lawsuit, it’s kind of hard to put into perspective against some of the allegations in the Meta suit—concrete damages are $3.99 Hinge roses and $14.99 Tinder premium subscriptions, compared to irreparable mental and physical damage—but the suit does have a point that dating apps have a paradoxical business model: every successful match means two people coming off the app. The stats are shocking, though: most heterosexual couples are meeting online through apps they’re spending 10 hours a week on, so these services have major impacts on people. And regardless of the claims of addiction, it’s true that users have gotten frustrated and switched to different Match apps as each one got more monetized, but now they’re running out of places to go, with Match Group’s paying users on a steady decline. As an iconic TikTok put it, “if you met your partner on a dating app in the last year and a half, two years, just know that you caught the last chopper out of ‘Nam.” Where does this leave people? Maybe with in-person meet-ups (gasp!).

AI-generated videos take a huge leap forward

Nutshell: OpenAI announced a new text-to-video tool, Sora, that creates incredibly realistic videos up to a minute long.

More: Sora isn’t being released to the public yet, but is being given to red teamers1 and visual artists to provide feedback about potential misuse and harms to the video/film industry. Though OpenAI released a technical report about its capabilities, there’s no information on the training dataset, environmental impact, or basically anything else.

Why you should care: This tool is astounding and, frankly, kind of scary, with concerns already being raised about its potential for misuse. In that way, it’s a good thing that OpenAI is doing a slow, consultative roll-out, which many think they should have done for ChatGPT. For this to be effective, though, they’ll need to make sure that anti-misinformation/deepfake guardrails are bulletproof, that representation biases are addressed, and that the implications for the video industry are thought out and addressed.

Tutoring tablets take off in China

Nutshell: After a crackdown on the tutoring industry, parents in China are turning to educational AI tablets.

More: The tablets, made by companies including iFlyTek and Baidu, are kind of like a souped-up Amazon Fire Kids tablet. They’re locked down so kids can’t download games, and come with apps like English chatbots, software to analyze and grade essays, and gamified study apps. The iFlyTek T20 will even remind kids to sit up straight when it detects them slouching. The tablets are booming in response to the government crackdown on tutoring services, which was intended to level the playing field for kids with fewer resources (like US schools going test-optional). In reality, though, tutoring has just gone underground and become more expensive, and these tablets may be filling in the gap—but they’re still expensive enough to lock out lower-income students.

Why you should care: This is symptomatic of a broader trend in education, and not just in China: that money talks. My high school had an iPad pilot program and I enjoyed my “flipped classrooms” (where lectures are watched outside of class and activities done with the teacher in-class), but this isn’t that. These are parents—often facing peer pressure or direct pressure from schools—trying to help their kids catch up to peers who can still access tutoring, with those who can’t access either still left in the dust. Plus, there are potential quality issues with relying on an LLM to teach your kid science—you don’t want your kids learning the the ribosomes are the powerhouse of the cell when it’s clearly the mitochondria.

Generative AI wreaking havoc in academic journals

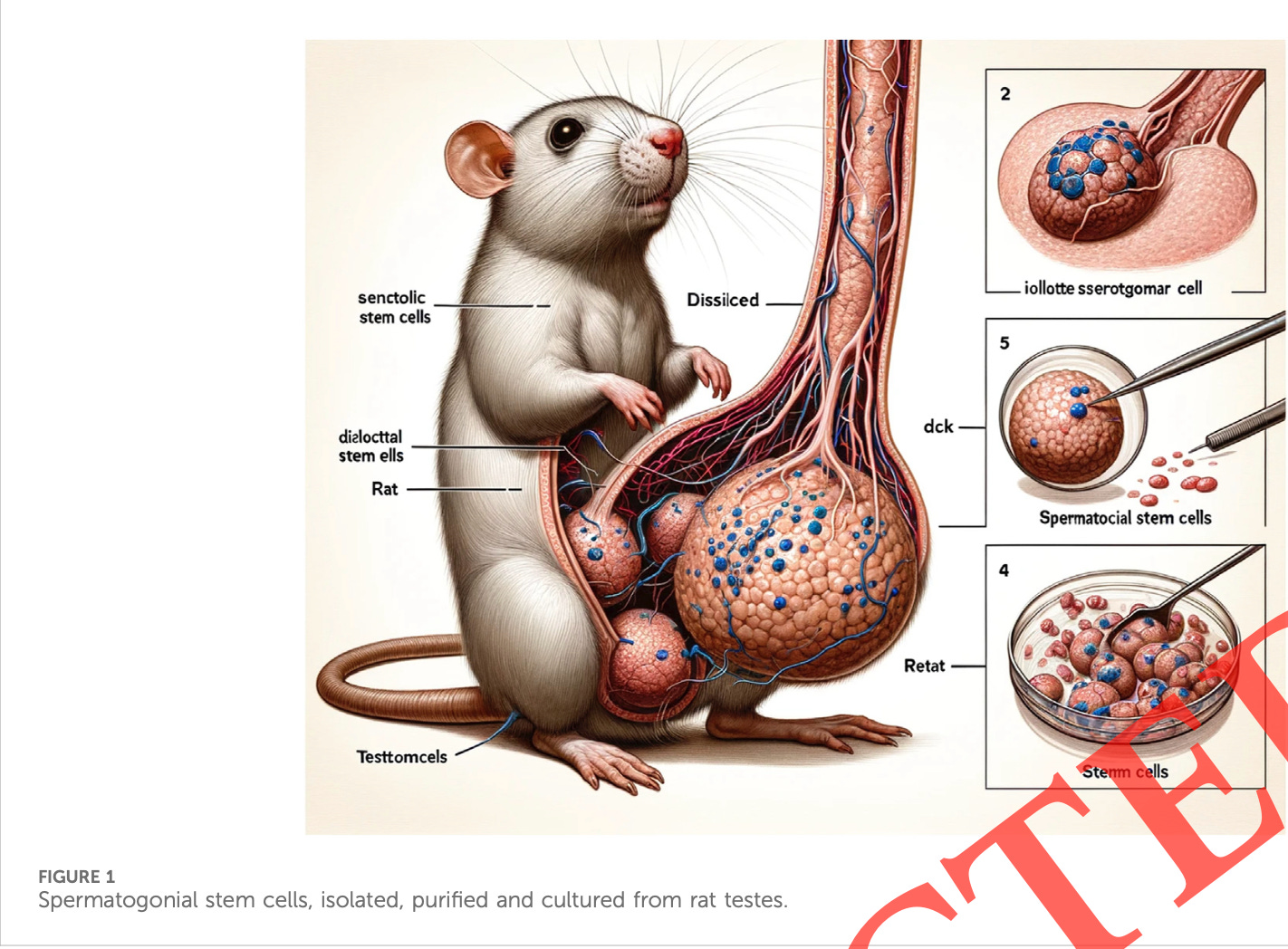

Nutshell: A peer-reviewed paper was retracted after Twitter review noticed that its figures were AI-generated and completely nonsensical.

More: Theoretically, every paper published in reputable journals is reviewed by other academics before publishing; it’s academia’s defense against bad science (like diagrams of a Petri dish with a dessert spoon labeled “Retat Stemm cells”). One of the peer reviewers actually flagged the issue, but the authors (who did say in the paper that they used Midjourney to create their figures) ignored it, and the paper was published anyway—which raises the question of how, especially if it had to go through another round of peer review (plus editor review) after the first suggested revisions. So many steps should have prevented this, and none did.

Why you should care: Doctored images have long been an issue in academic journals—see the case where cancer researchers took images from other papers, the “Tadpole Paper Mill” of over 400 papers that used the same (potentially faked) figures, and a different cancer researcher who rotated and filtered photos of tumors to re-use in other papers. Existing detection methods rely on matching images across papers, but generative AI breaks that. And this isn’t just a problem at reputationally suspect institutions—the rat study authors are from Xi’an Jiaotong University, which is in the top 20 universities in China, and the cancer researchers are from Harvard and Columbia. While paper fraud has been a problem before AI, AI might make it easier to generate not just hilarious, non-sensical figures, but to fabricate more detailed research data, like the cell and tumor images duplicated across other papers.

Extra Reckoning

I’ve been thinking about educational technology, especially given the tablet story from above. More specifically, I’ve been thinking about VR/AR (aka XR, for “extended reality”) devices in education. A lot of VR proponents think that XR might be the next frontier in education: think immersive dives into the cell a la The Magic Schoolbus, virtual field trips to far-flung locations, and access to classrooms for people in locations without good physical schools.

However, this requires a lot of technological and infrastructural issues to be solved. Headsets need to get smaller, cheaper, and more comfortable. But also, the communities that could be most benefitted by this are the ones least likely to have adequate Internet connectivity; there are fundamental needs that must be addressed before we can think about the tech. (See “The irony of AI in a low-to-middle-income country” for a more cogent articulation of this.)

The main thing I’ve been thinking about is what having a lot of classes in VR would actually be like if we fix the technological and logistical issues. This was prompted by a report this week that the University of Michigan was licensing two datasets with lecture recordings and student papers—intended for use in training large language models—for $25,000. University of Michigan claimed this was done by a “new third party vendor that has since been asked to halt their work,” but questions remain about how this happened in the first place and whether the consent provided by the students and professors involved when they originally agreed to the recordings, which were made for research studies in 1997-2000 and 2006-2007, was adequate.

What does this have to do with VR? Well, in VR, everything you do can be recorded: video and audio, yes, but also biometric data—where you’re looking, how you’re moving, etc. Essentially, the school running the class has a Big Brother-like view on everything that’s happening. This data can be used to derive information about group synchrony (how groups move together). These subtle datapoints can tell us about how groups are relating to each other and getting along, potentially giving schools invasive information about student relationships. Schools would also be able to tell how much attention professors pay to each student and who speaks when. This could potentially be used to address unconscious bias, like if professors call on men more than women (men speak 1.6 times more often than women in college classrooms). However, it could also be used for sketchier purposes, like judging student emotions in response to lectures. I also worry about the chilling effect that this surveillance could cause. College classrooms should be places to explore ideas, even uncomfortable ones. Would students be comfortable bringing up certain topics if they knew their school could potentially be monitoring whatever they said (or even selling it to the highest bidder2)? Would professors?

There are already huge privacy concerns with virtual learning environments. When you add the biometric data element to it, there’s infinitely more potential issues. The VR education advocates I’ve spoken to are hugely excited and passionate, and I hope that they’re able to bring this to fruition—but university motives may not be aligned with theirs.

I Reckon…

that if “golden rice” couldn’t make it, “beef rice” won’t either.

Red teaming is basically pushing the boundaries of a tool in an attempt to find how it can be misused.

In the US, we have laws like FERPA that prevent the involuntary disclosure of student data to third parties, but this doesn’t mean that schools couldn’t do it.

Helicopter photo generated by DALL-E 3 via ChatGPT with iterations on the prompt ‘Please make a picture of "the last chopper out of 'Nam", but Nam is the dating pool and the chopper is the last good app.’ Thumbnail photo is a close-up of Monet’s “Water-Lillies, Setting Sun” that I took in the National Museum.

That rat diagram is so stressful on so many levels ;-;