WR 29: A Golden Gate lobotomy; what cli-fi tells us about the future

Weekly Reckoning for the week of 2/6/24

Welcome back to the Ethical Reckoner! If you’re new around here, this is what the newsletter looks like the first three or four Mondays of the month: a rundown of the tech news you need to care about(/that will make you sound smart if you bring it up around the water cooler/bridge table/Discord chat), plus a reflection about something I’ve been thinking about.

In this Weekly Reckoning, we cover how AI researchers got into the mind of an LLM (and made it obsessed with the Golden Gate Bridge in the process), the rise of hikikomori outside Japan and the loss of an Internet subsidy program in the US, and then my thoughts on how The Future and The Ministry for the Future present the end of the world—and how to save it.

This edition of the WR is brought to you by… the joys of fresh strawberries and Yorkshire puddings.

The Reckonnaisance

Researchers get inside the “mind” of a large language model

Nutshell: Anthropic, which makes the LLM/chatbot Claude, mapped millions of concepts within its smallest model, cracking open the “black box.”

More: Lots of AI isn’t explainable or interpretable, meaning we don’t really know why it works (hence the idea of it as a black box). But being able to identify where specific concepts live in the model means that we can more easily change it. This includes relatively silly things—Anthropic briefly made available “Golden Gate Claude,” which was fixated on the Golden Gate Bridge because they amplified a feature that was associated with the bridge—but also things that impact model safety, like sycophancy and the ability to write scam emails. And, while scaling this mapping approach to larger models is currently infeasible, advances might make it more efficient.

Why you should care: First off, the responses from Golden Gate Claude are hilarious. But second, this is really interesting research that could help us solve one of the fundamental problems of much of AI, which is we don’t really understand why it works. Also, this sort of “model steering” could actually be useful to create LLMs to help you understand different topics—whether the Golden Gate Bridge or something else.

Internet subsidy program ends in the US

Nutshell: The Affordable Connectivity Program, which helped 1/5 US households get Internet access, expired on Saturday.

More: The ACP had been going for 2.5 years and helped subsidize Internet access for over 60 million people across the country, including on tribal lands, which are expected to be hit the hardest by the program’s collapse. Efforts to extend the legislation failed in the face of Republican inaction, but the White House got several ISPs to commit to providing subsidized Internet for 10 million of the 23 million impacted households.

Why you should care: None of this digital ethics stuff matters if you can’t access the Internet. Every so often I remind myself of this to keep things in perspective, because while I’m over here worrying about what AI overviews will do to Google Search, there are billions of people who can’t access Google to begin with.

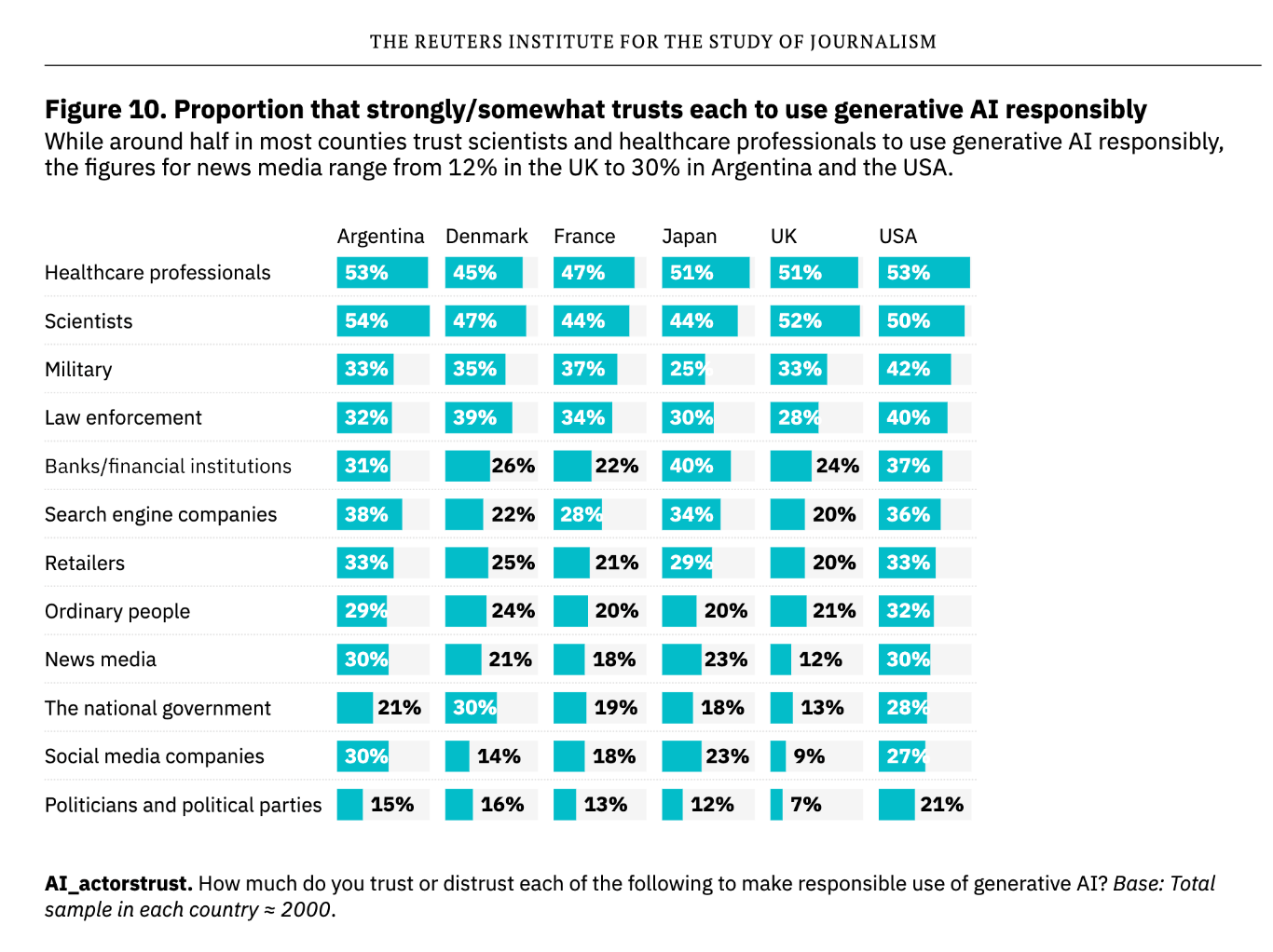

Public skeptical of generative AI in news

Nutshell: A huge survey of people in six countries showed that people are unsure of how generative AI will affect the news and life more broadly.

More: The survey aimed to analyze the public’s awareness and attitude towards generative AI (genAI, like text generator ChatGPT and image generator DALL-E), especially regarding news media. 72% of people think it will have a large impact on search and social media, and 2/3 think it will have a large impact on the news media, but only 48% of people think it will have a big impact on ordinary people. This is despite the fact that most people do not trust anyone to use genAI responsibly, including journalists. But 30-40% of people think that journalists already always or often use genAI for various tasks, plus 17% for “creating an artificial presenter or author,” which reflects a “degree of cynicism about journalism.” This is on top of the fact that most people are not comfortable with news generated entirely by AI, but less than half endorse various labelling or disclosure techniques.

Why you should care: This survey is somewhat perplexing, but I’m glad it was done. There are no glaring red flags—except for the 5% of people who are using AI to “get the latest news,” which it is not designed for—but overall it reflects a lot of polarization and a lot of uncertainty. It’s surprising to see that nearly half of people haven’t heard of genAI, one of the things I’ve spent a lot of time reading and writing about these last two years (oh, the techie/academic bubble), but it’s always good to keep things in perspective and to see that people are really uncertain about how this will impact their lives despite the relentless drumbeat of optimism from tech companies.

Feel free to comment below what for.

Hikikomori gain notice outside Japan

Nutshell: Usually associated with Japan, the social recluses called hikikomori are being identified elsewhere.

More: Hikikomori (translated as “shut-ins”) are people, typically young men, who isolate themselves for months at a time. Some leave occasionally for groceries, while others never leave their bedroom. COVID made things worse, but it’s been a known phenomenon since the 1980s. A (visually and factually) stunning report from CNN illustrates the stories of hikikomori in Japan, South Korea, and Hong Kong. There are organizations dedicated to helping them reintegrate into society, but the scale seems inadequate to address the scale of the issue, and the social issues that contribute to it—including the shift away from physical communication and increased economic instability—are global.

Why you should care: The hikikomori phenomenon is often “thought to be bound to Japanese [c]ulture,” but there are cases popping up across the world. If contributing factors are truly global, then we may be seeing more of this. But their relationship to digital technology is also interesting; for many, it’s their only way they interact with the outside world, but it also gives them something to rather than leave isolation, and it may also contribute to the social changes that encourage becoming hikikomori.

Extra Reckoning

[Spoilers in the next two paragraphs for The Future by Naomi Alderman and The Ministry for the Future by Kim Stanley Robinson]

I recently finished The Future by Naomi Alderman. Basically, it’s about about the supposed end of the world in a lightly fictionalized future version of our society. The CEOs of the three biggest tech companies are told by an advanced AI system that there are early signs that a world-ending pandemic is developing and they need to get to their bunkers ASAP, but their plane crashes on a deserted island, and they have to survive there as—they think—the world ends around them. It turns out that it was all a plot by a few other characters to get them out of the way and take over their companies so that they can use them to save the world. The plotters change the companies, embrace antitrust breakups and wildlife preservation, and nudge people into different opinions. As a result, “voters, slowly but surely around the world” start to vote for “more radical policies on environmental protection.” One of the companies works with governments to transform city infrastructure to prioritize walking, biking, and autonomous electric car sharing; another buys huge swathes of land for preservation. The world is saved by leveraging the existing system, albeit with more advanced technology.

This technocratic vision of how to save the world was an interesting contrast with Kim Stanley Robinson’s The Ministry for the Future, which opens with a blisteringly wrought heat wave that kills millions across India. The resulting outcry shocks the world into action. A UN agency—the Ministry for the Future—is formed, and the rest of the novel follows its efforts to pull the world into compliance through politics, science, and (allegedly) ecoterrorism. It goes into far more detail than The Future, which sums up the world-saving bit in a chapter at the end, but ultimately, the world is saved by burning the existing system to the ground. A “carbon coin” is issued, central banks are convinced, private banks and energy companies are nationalized, and geoengineering technology advanced. The only time tech companies are really mentioned is when social media gets replaced in the span of two paragraphs by YourLock, a open-source, cooperatively owned, blockchain-based system that facilitates data micropayments and green investing. Even though tech is part of the solution, tech companies are not, and overall the book focuses much more on how to shift economic incentives to make change.

[spoilers over]

The two books take very different perspectives1 on saving the world. Like I said, one leverages the system as it exists, while the other makes fundamental changes, but they also say different things about who catalyzes change. The Future starts with a few key pivot people making decisions and then zooms out to the masses helping forward those changes through democracy—to the extent they can within a technopoly—while The Ministry for the Future is built on the bodies of millions of everyday people and then focuses on a few key players working to make the Ministry effective. That’s not to say that democratic participation doesn’t play a factor, but it’s much more about the government machinations and nitty-gritty work that makes change within institutions.

I really like this brand of speculative fiction/climate fiction/manifestos-turned-novels because of what they say say about our present and possible future. These two books show that there are many possible ways forward, which is somewhat heartening, but they agree that for us to be able to get ourselves out of the climate crisis, something has to initiate action. Alderman and Robinson are able to decide what that is; they don’t have to wait as dozens of fuses—Delhi being above 104 for days on end? The Great Barrier Reef collapsing? The most destructive hurricane season on record?—to fizzle out. Which raises the question: which one will catch?

I Reckon…

that EU data regulators not allowing paid targeted-ad-free social media is just subsidizing buying Europeans more privacy with American data collection.

One commonality is between the books is the creation of massive wilderness preserves, which shows that part of the solution is just leave it alone.

Thumbnail generated by DALL-E 3 via ChatGPT with variations on the prompt “Make an abstract brush Impressionist painting of the concept of bridging two opposing concepts”.