WR 3: AI news, the Whole Earth Catalog, and a crypto heist

Weekly Reckoning for the week of 16/10/23

Welcome back to the Ethical Reckoner. In this Weekly Reckoning, we’ve got quite a bit of AI news—from AI lawyers to AI summits to AI company values—but also an investigation into crypto heist and a time portal back into 1970s counterculture. We’ll also take a deep dive into OpenAI’s new “core values” and see what they portend for the company’s future.

The Reckonnaisance

Hip-hop legend convicted of being an unregistered foreign agent seeks new trial, alleging his lawyer relied on an AI tool he was shilling

Nutshell: He’s alleging that his lawyer over-relied on AI to the extent that it deprived him of his right to effective counsel.

More: Pras Michel of the Fugees was convicted in April on campaign finance, witness tampering, and foreign agent charges. It turns out his attorney may have an undisclosed financial stake an AI “litigation assistance technology” company. After the trial, he boasted that “AI wrote our closing [argument],” which according to a court filing, did not go well for the defense. After the trial, the company proudly crowed that they “made history… becoming the first use of generative AI in a federal trial”—without mentioning that they lost, and also that it’s not true, because a lawyer used ChatGPT in filings in a federal court in NYC earlier this year and got roundly torn apart for it.

Why you should care: You definitely should if you’re about to be on trial with a sleazy lawyer, but even if you aren’t, you should be wary of claims that AI can help “10x” productivity (a phrase that software engineers know all too well), which create a techno-solutionist narrative that AI is a panacea and you’ll be worse at your job if you don’t use it. In some cases, you’ll be worse at your job if you do use it. There are times and places when AI can be helpful, but in a courtroom with your client’s liberty on the line right now is not one of them. People have also been heralding AI for being able to “democratize” access to services, but there’s a risk of it turning into a two-tier system where those who can pay get human services, and those who can’t get a worse AI.

The UK’s AI Safety Summit is trying to position Britain as a regulatory leader

Nutshell: PM Rishi Sunak is using next month’s “AI Safety Summit” to position the UK as an AI safety hub, but there are concerns that it’s focusing too much on low-probability existential risks.

More: The summit focuses on “frontier AI,” which seems to basically be advanced AI. Its first day will focus on risks to global safety from misuse, risks from unpredictable advances in capability, risks from loss of control, and risks from the integration of frontier AI into society (which includes concerns like bias and election disruption). This is heavily weighted towards existential risk concerns, and Sunak will reportedly use Day 2 to pitch an “AI Safety Institute” to allies However, it’s unclear if the summit will be productive. Allies seem skeptical, and while China was invited, the attendee list hasn’t been announced.

Why you should care: As I’ve argued, AI probably isn’t going to kill us all, so if existential risk becomes the focus of too many governments, it could be detrimental to AI governance as a whole. On the other hand, there is a place for AI safety research, so if the UK is staking its claim here, it could encourage other countries and agencies to focus more on present risks—unless it drags the rest of the world in.

The entirety of the Whole Earth Catalog and its sibling publications is now online (and free)

Nutshell: The Whole Earth Catalog was a counterculture magazine and catalog published by a man at the center of 1960s/70s counterculture (and cyberculture), Stewart Brand. Think Sears, but for hippies and Deadheads.

More: The Internet Archive and the Long Now Foundation have collaborated to digitally archive the entire back catalog of the publication that Steve Jobs described as “one of the bibles of my generation,” Google before Google existed. It encouraged self-sufficiency and holism, which was carried forward in its descendants, Coevolution Quarterly, the Whole Earth Review, and Whole Earth Magazine.

Why you should care: These are incredible relics of counterculture from 1970 through 2002. I’ve been using them to research the history of VR, but even if you aren’t academically interested in them, they’re still a great way to spend some time scrolling online (instead of doomscrolling). I opened a random issue of Coevolution Quarterly and found poetry, political theorizing on plutonium coupled with a “Plutonium Chant,” a critique of pork-barrel politics, and several illustrations of different peregrine falcons. What more could you want?

Someone—maybe Russia—stole a bunch of crypto from FTX and is cashing out as SBF’s trial continues

Nutshell: In the confusion of FTX’s collapse, someone stole more than $400 million from the exchange. It seemed like an inside job, but now researchers suggest the thieves may have links to Russian cybercrime—but whoever they are, they’re cashing out.

More: After the heist, the thief/thieves started moving the stolen crypto around to make it harder to trace. There are services like decentralized exchanges, cross-chain bridges, and crypto mixers that make it easy to swap coins, move cryptocurrency across blockchains, and mix it with crypto owned by other people, muddying the blockchain trail. Some of the services the thieves used have since been seized or sanctioned. Researchers found that some of the crypto has been mixed with funds from Russia-linked criminal groups, indicating the thieves might be affiliated with Russia. Now, the thieves are cashing out during Sam Bankman-Fried’s trial, which is either incredibly stupid or incredibly brave.

Why you should care: Crypto is becoming a common target for cybercriminals, including those linked to Russia and North Korea. North Korea-affiliated hackers stole $1.7 billion in crypto in 2022, making it a significant chunk of its economy and likely its nuclear program. Also, blockchain detective is becoming a legitimate profession, and a new genre of fascination for the rest of us.

Extra Reckoning

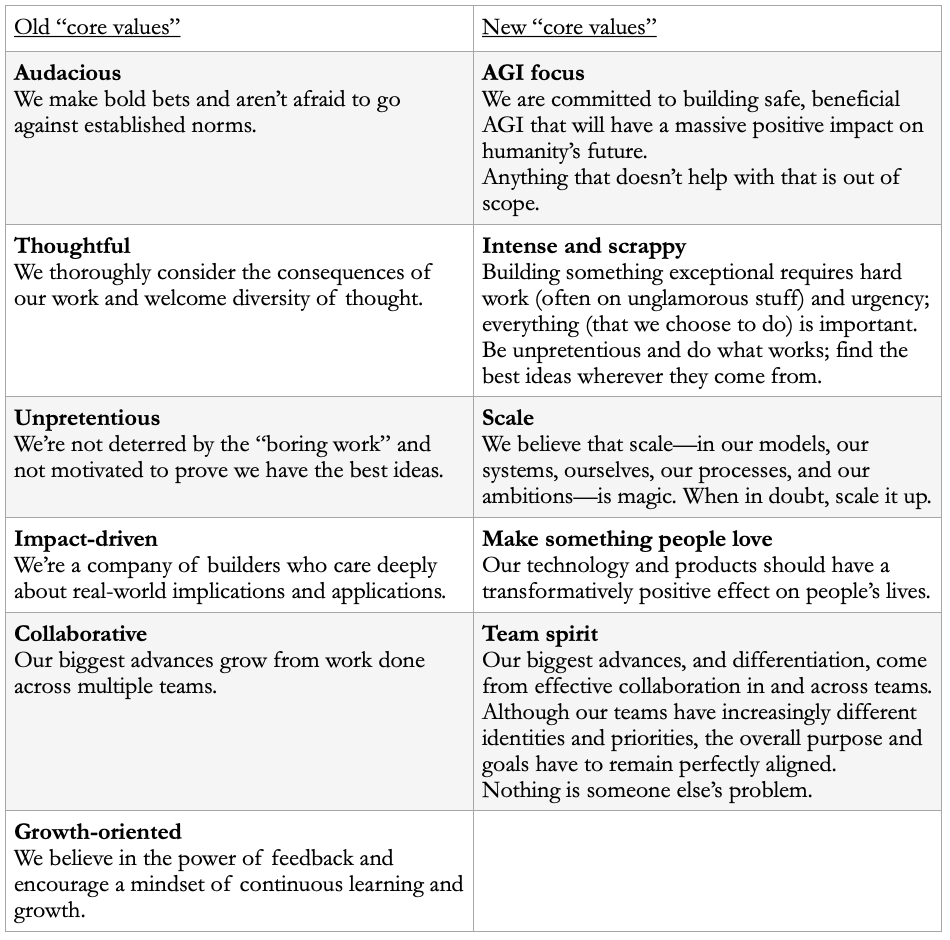

OpenAI, the company behind ChatGPT, quietly changed their company principles recently. Let’s compare the old and new and see what we can find.

The headline change here is obvious the full-throated focus on artificial general intelligence (AGI).1 OpenAI has always considered themselves an AGI company working for the “best interests of humanity,” but their changes to their non-profit structure and refusal to open-source their models (or disclose much information about them), among other issues, has made some question their intentions. The declaration that anything they think doesn’t benefit that mission is “out of scope” will probably only fan those flames, as will the focus on being “intense and scrappy”—echoes of Musk’s “extremely hardcore”?—and the “magic” of scale. “When in doubt, scale it up” might be the new “move fast and break things,” which Facebook eventually distanced themselves from. There’s a question as to whether making bigger AI systems is the right strategy (for instance, smaller language models with better data can outperform larger ones), but OpenAI has the resources and is committing to this path.

I also want to point out that making “something people love” isn’t the same as having a “transformatively positive effect on people’s lives”—we can love things that are bad for us (e.g., cigarettes, Twitter, etc.). I’m curious about how OpenAI will measure their impact and account for externalities that we may actually like.

Overall, the first set seems to be focusing much more on how OpenAI works and defining their company culture, while the second focuses much more on what they want to do. “Collaboration” and “unpretentious” are still mentioned, but the primary goal is AGI. OpenAI may be moving into a new phase of hustle where company culture is secondary to achieving company goals. If AGI isn’t right around the corner, though, and if these new values cause a significant shift in how OpenAI operates, we’ll have to wait and see how long they’re able to maintain this sprint.

I reckon…

that Twitter is irreparably broken.

They define AGI as “highly autonomous systems that outperform humans at most economically valuable work.”

Thumbnail generated by DALL-E with the prompt “An abstract oil painting embodying law, politics, and Silicon Valley”.