Welcome back to the Ethical Reckoner. In this Weekly Reckoning, we of course touch on Apple’s new AI features and what they mean for Apple and generative AI, but also two tech safety issues in Chinese self-driving cars and American healthcare, plus the death of an important Stanford research group. Then, I give a quick recap of a great conference I went to in Spain.

This edition of the WR is brought to you by… accidental Alicante bushwhacking

The Reckonnaisance

Apple gets with the AI program

Nutshell: Apple announced a bunch of new AI features, coming soon to a device near you.

More: The specific “Apple Intelligence” features—including a better Siri, generated emojis, and a ChatGPT integration—have been getting a lot of attention, particularly for their focus on privacy (including processing most data on device instead of sending it to a different service). But they’re just as notable for what they lack: Apple isn’t touching news with AI (for good reason), and apparently no money is changing hands in the Apple-OpenAI deal, meaning that both are betting that this partnership will be bilaterally profitable, whether through more publicity, customers, eventual licensing deals, or something else.

Why you should care: “Apple rains on the AI hype cycle” is what Politico headlined their coverage, and their point is that Apple, which has always been the deliberative, mature tech company in the room, is showing how consumers will actually use AI. Given that most people haven’t used and many haven't heard of generative AI, a lot more people are about to be exposed to it, and Apple will likely define people’s expectations for how AI should integrate into our lives going forward. They’ve also “Sherlocked” a bunch of apps that will now be redundant, so if you were planning a generative AI startup, maybe hold off. Notably, though, Apple Intelligence will only be available on the latest iPhone 15s, making their tagline “AI for the rest of us” a little disingenuous.

China’s self-driving cars leap forward with little oversight

Nutshell: China has more autonomous cars on the road than anywhere else, but the government is accused of covering up accidents.

More: At least 16 cities are allowing self-driving cars from 19 companies on public roads, but news of accidents has been censored on Chinese social media. Companies like Waymo and Cruise have been operating in the US for a few years, but under heavy scrutiny (both of them are under investigation for safety issues). Although China has a permitting regimen and some regulations, no government agency is directly responsible for safety and detailed rule making has been deferred until 2026. China’s innovation strategy usually involves balancing innovation and disruption—and sometimes selective enforcement of rules—and apparently sweeping a few accidents under the road is considered an acceptable price to pay to keep the industry going full speed ahead.

Why you should care: “Move fast and break things” is not a great idea when the things moving fast are two-ton hunks of metal and the things getting broken are people.

Stanford Internet Observatory disbanded

Nutshell: The US’s preeminent disinformation and child safety research group is shutting down after a conservative onslaught.

More: Stanford spent millions of dollars defending the group in court after three lawsuits by conservative groups alleging that the SIO colluded with the federal government to suppress speech. They’ve apparently decided it’s no longer worth the trouble, with its researchers recently informed that their contracts are not being renewed. The SIO did groundbreaking work identifying how election and COVID misinformation spreads, which made them a favorite of the news media—they had thousands of media citations in 2022, their third year of operation—but also a target of conspiracy theorists and Republican legislators, who started suing it. The SIO’s child safety work and some of its trust and safety work will continue separately, but this is a loss for Internet research.

Why you should care: The SIO was doing really great work, and it will be sorely missed. But this was just one front in a broader war on academic freedom, especially in the field of disinformation and political influence campaigns. The University of Washington and New York University are also being targeted, and if academics become afraid to do this kind of research because they might be personally named in a lawsuit or subpoenas—as Stanfords staff, undergrads, and grad students have been—or universities are afraid to support it, then we’ll all be worse off.

Amazon’s One Medical triaging sick seniors with undertrained call center workers

Nutshell: At least a dozen elderly patients with urgent symptoms (including of blood clots and heart attacks) were not escalated by call center workers, putting their lives at risk.

More: Those patients should have been told to go to emergency rooms or urgent care, but were often instead given doctor’s appointments for days later. Call center employers, many of whom are contractors, are not required to have medical experience. Employees say that the two-week training period is extremely crammed and worry that “call center staff were making medical decisions they weren’t qualified to make”… which seems true since they’re literally triaging elderly patients. Unfortunately, remote triage is basically unregulated.1

Why you should care: Telehealth and remote healthcare are becoming more common, but when they’re not being run by medical professionals, patient wellbeing might actually be at risk. Unfortunately, this is a logical consequence of a for-profit healthcare system that’s run by bigger and bigger health/pharma/tech companies.

Extra Reckoning

Last week, I was fortunate to attend the International Congress on the Responsible Development of the Metaverse in Alicante, Spain.2 We had two packed days of presentations, and I was on the Metaverse Governance panel (I was the first presenter after the keynote speakers… no pressure, right?). Anyway, my presentation was on a paper I wrote with the wonderful Josh Cowls (of plus/minus) and my equally wonderful supervisor Luciano Floridi. We lay out how extended reality (XR) technologies (virtual reality (VR), augmented reality (AR), and the like) create unique benefits and risks to freedoms of assembly and expression.

A few highlights:

The ability to overcome time, distance, and state constraints to assemble in VR.

Concerns for protest in the physical world if police have AR facial recognition capabilities.

The potential devaluation of virtual protest as a new form of clicktivism.

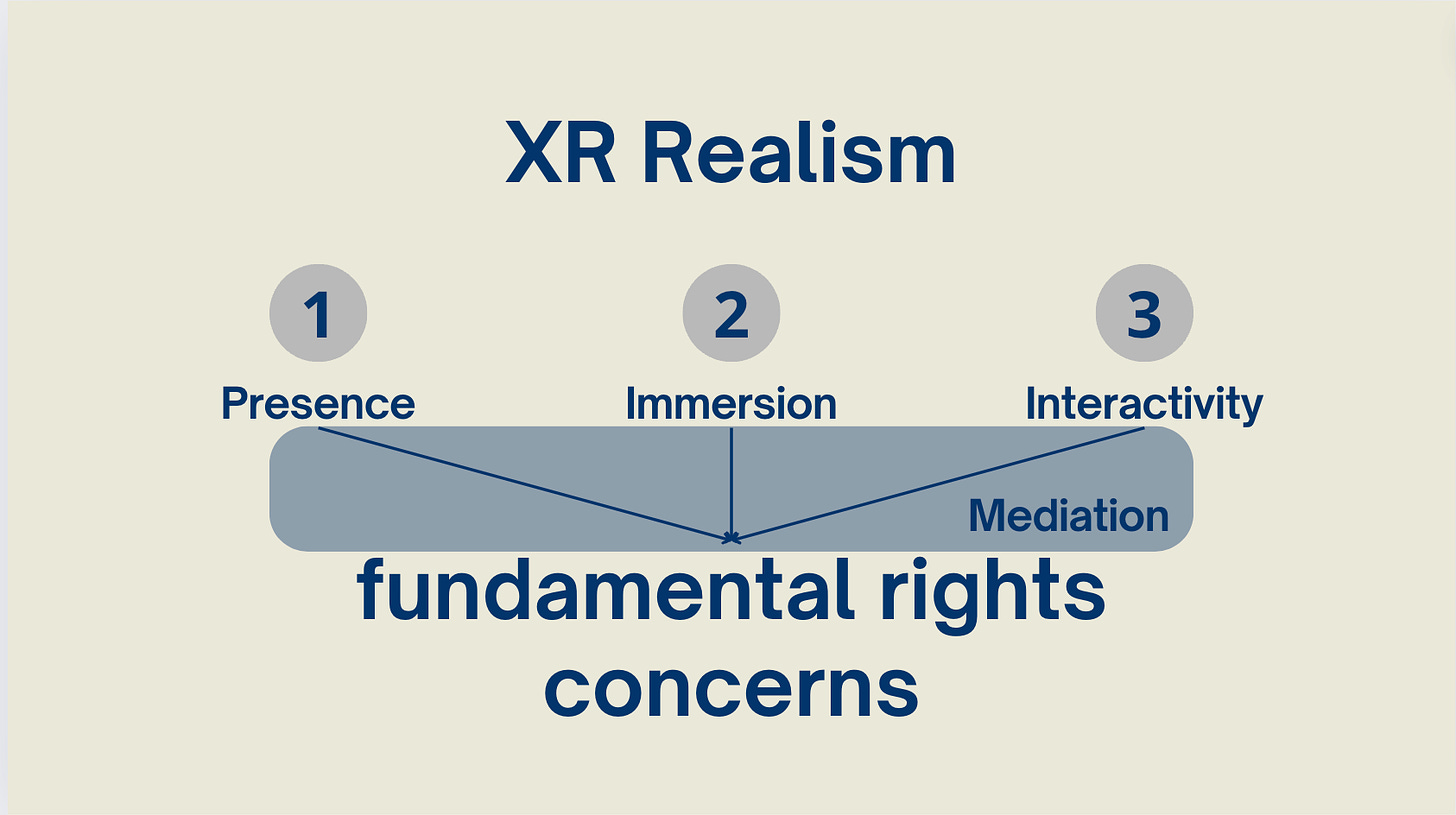

Many other digital technologies also implicate freedoms of assembly and expression, including social media, but the embodiment and realism that make XR experiences really unique and delightful also mean that restrictions and violations of rights become a bigger deal. We argue that because of this, we need a new way to think about platform responsibility to uphold fundamental rights that takes into account the level of embodiment of a platform, how much of a state function the platform is taking on, and some amount of due process when taking action against users. Stay tuned for news about when and where the paper will come out, but feel free to reach out if you’d like to read the working piece!

A few observations about the rest of the conference: for an event about “the metaverse,” there was little agreement on what the metaverse actually is, and there was an interesting division of opinions: a lot of pessimism about virtual social worlds (like VRChat/Horizon Worlds), but a lot of optimism about the broader bundle of XR technologies. This was accompanied by healthy debate over how we should go about addressing their potential impacts. Several papers addressed specific areas of EU legislation, and the consensus was that XR/immersive tech brings up enough novel issues that we’ll probably need new policies and legislation to address their impacts. But this itself is not a new problem—tech is always advancing, and that often means that our laws either have to be reinterpreted, revised, or rewritten to adapt. The fact that these conversations are happening, and that academics, industry, and policymakers are all in the same room, gives me hope that the EU, at least, will be able to stick the landing. US, your move.3

I Reckon…

that generative AI is also bringing back “move fast and break things.”

Thanks to my brilliant colleague and Health Data Nerd Jess Morley for pointing this out.

Alicante review: great city! Wish I’d had more time to explore, but the sights, weather, and food were all excellent. Would highly recommend walking up to the castle and grabbing a pastry at the central market.

It will be many, many moves considering how far behind we are on tech regulation in general.

Thumbnail generated by DALL-E 3 via ChatGPT with the prompt “Please generate an abstract, brushy Impressionist painting in shades of grey and green representing the concept of the metaverse.”

![SIO home page. A program of the Cyber Policy Center, a joint initiative of the Freeman Spogli Institute for International Studies and Stanford Law School. Search Search Internet Observatory Home Research Trust and Safety Teaching About in·ter·net ob·serv·a·to·ry [ˈin(t)ərˌnet əbˈzərvəˌtôrē] n. a lab housing infrastructure and human expertise for the study of the internet SIO home page. A program of the Cyber Policy Center, a joint initiative of the Freeman Spogli Institute for International Studies and Stanford Law School. Search Search Internet Observatory Home Research Trust and Safety Teaching About in·ter·net ob·serv·a·to·ry [ˈin(t)ərˌnet əbˈzərvəˌtôrē] n. a lab housing infrastructure and human expertise for the study of the internet](https://substackcdn.com/image/fetch/w_1456,c_limit,f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2Fd0195a57-2d16-4e58-97dd-8fb6d65aaea0_2382x848.png)

It’s such a pity that SIO is going to end. I think that It has provided many cross-disciplinary observations.