Welcome back to the Ethical Reckoner. In this Weekly Reckoning, we cover yet another legislative win for my home state of Illinois, OpenAI’s new AI progress scale, the EU taking on X over the blue checkpocalypse, and how four people spent over a year pretending to be on Mars (for science). Then, I muse about why I do research, but really about why I still Tweet.

This edition of the WR is brought to you by… coastal New England explorations

The Reckonnaisance

Illinois requires parents to compensate child influencers

Nutshell: Parents in Illinois who use their children as social media influencers will have to pay a share of their revenue into a trust for the kids.

More: The modifications to the Child Labor Law requires that adults put a portion of earnings into a trust for children under the age of 16 if they appear in at least 30% of their social media content, and allows children to sue their parents if they don’t. It’s the first law of its kind, but other states are considering similar ones.

Why you should care: Child actors are protected by law, but child influencers are not, which is wild considering how how many child influencers there are, how much money they can make (in some cases, millions), and how vulnerable they are. Essentially, kid influencers are in the same situation as Britney Spears was under her conservatorship, which gave control over her finances and basically entire life to her father. They also have no control over their public exposure, which can cause embarrassment and trauma later in life, and also exposes them to creepy characters online—like the network of pedophiles stalking Instagram child dance influencers.

OpenAI creates AI advancement scale

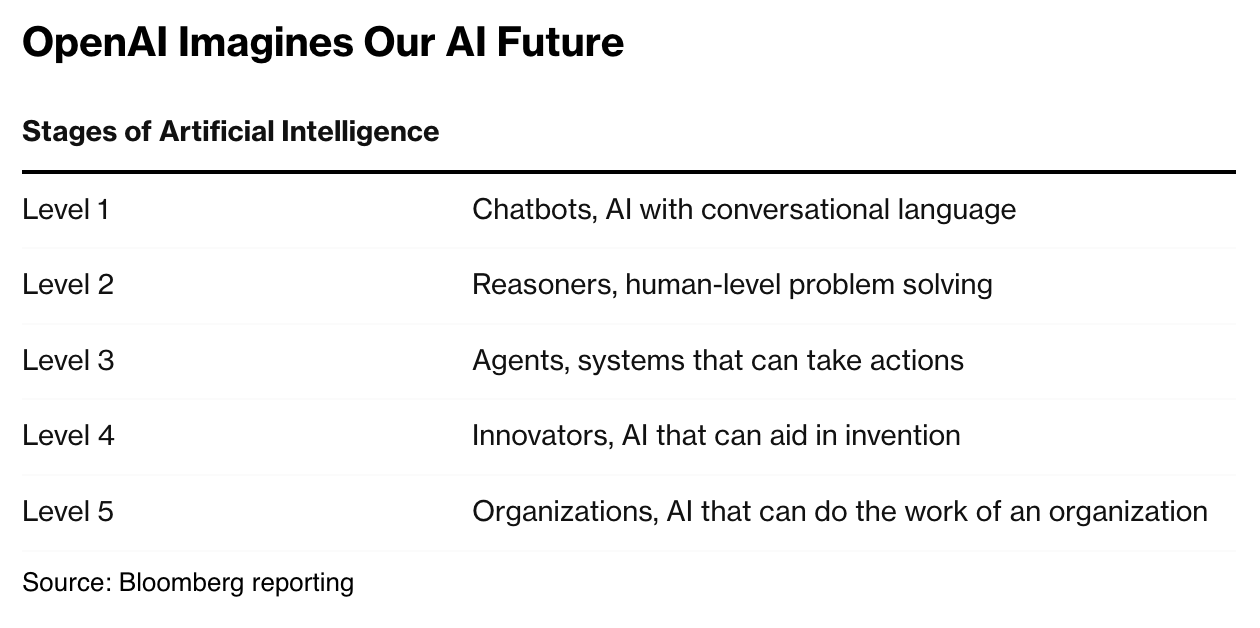

Nutshell: OpenAI developed a 5-level scale for stages of AI capabilities, from “chatbots” to “organizations.”

More: The scale, unreleased but reported by Bloomberg, is similar to a safety scale that Anthropic created, but focuses on capabilities rather than safety risks. OpenAI thinks we’re still at level 1 (chatbots) but on the cusp of level 2 (reasoners). It’s unclear what metrics will place AI systems in each category, and it will likely be OpenAI-specific. If there aren’t concrete standards, as Ars Technica noted, the scale could end up as a “communications tool to entice investors that shows the company's aspirational goals rather than a scientific or even technical measurement of progress.”

Why you should care: OpenAI is facing a lot of regulatory scrutiny right now—Microsoft and Apple have abandoned their plans to take on board seats to avoid antitrust concerns—and this may be a way to gloss some of their safety efforts while also giving them a new marketing tool, especially as they’re reportedly nearing a breakthrough in reasoning models. It’s notable that there’s no mention or definition of artificial general intelligence (AGI), a term that has been extensively bandied about without any agreement on what it actually is, and I wonder if the industry is moving away from it as a benchmark/buzzword.

X’s blue checks may be illegal

Nutshell: The EU says that X/Twitter is violating the Digital Services Act (DSA) with its “deceptive” blue check system.

More: The finding is based on how X turned its verification system into a pay-to-play system where anyone can purchase a blue verification checkmark regardless of account authenticity. The EU also says that X isn’t complying with ad transparency and research data access provisions. This is one of the first tests for the EU’s relatively new DSA, which focuses on consumer protection in digital services. And they picked a doozy. Unsurprisingly, Elon Musk is not taking it well, accusing the European Commission of censorship.

Why you should care: The EU is becoming a global tech regulator, and its rulings against Big Tech companies might very well affect their operations in the rest of the world. The “checkpocalypse” caused mass confusion, and in my opinion Twitter Blue or X Premium or whatever it’s called now has made the platform a worse place to be because blue-check bots get promoted to the top. But there’s also a lot of academic research that happens on X, and not allowing researchers access to the data they need has been a really severe hindrance to that.

NASA mock Mars mission wraps up

Nutshell: Four people lived in a mock Martian habitat for over a year to explore the effects of a Mars mission on crew health, performance, and needs.

More: The “Crew Health and Performance Exploration Analog” (CHAPEA) mission saw four people locked in what’s essentially a Martian soundstage for 371 days. They did simulated “Marswalks,” had to deal with the 22-minute communication delay, grew crops, and faced simulated mission threats. NASA did all this to study “how the crew performance and health changes based on realistic Mars restrictions and lifestyle of the crew members," and they have two more planned. The crew seemed in good spirits on their exit, but some experts raised concerns that it was a largely pointless experiment because we know what isolation does to people (nothing good) and should be able to figure out how allocate resources without these missions.

Why you should care: I’m endlessly fascinated by space, so I’m really excited about the idea of a mission to Mars, but even I wouldn’t have signed up for this mission. I really hope it gathered actually useful and important data, and it’ll be interesting to see what papers come out of it.

Extra Reckoning

Today: Why I Still Tweet

When I meet new people, sometimes I struggle to explain what it is that I do. “I’m a PhD student in Law, Science, and Technology” or “I’m a researcher at the Yale Digital Ethics Center” usually provoke either blank looks or, if I’m lucky, an “oh cool… what does that mean?” If I don’t feel like talking more, I say “I’m trying to keep the killer robots from coming for us all.” If I want to say more, I usually pull something together about tech ethics and governance and policy and AI/XR. It’s the latter that I have the most trouble explaining, because it’s the thing that the fewest people have heard of/are interested in. But “I sit and think and read and then write papers” honestly still sounds kind of boring. Sometimes I have something cool I’ve done recently that I can talk about, like a conference, but other times I’m trying to explain a presentation I gave on something like digital identity and virtual social worlds, and it all sounds very esoteric and, I worry (in some situations more than others), boring.

In academia, the proof I have that I work on interesting things is in my published papers (every academic respectfully acknowledges that anything another academic works on is cool, even if it’s the most boring thing you’ve ever heard of). But I have to acknowledge that basically no one normal reads them.1 I like to think that I write well, but academic papers are long and jargon-y and on topics that, unless you’re really, really interested in, you probably don’t have the patience to read 20-30 pages on. And so I have to put my work out in ways that people can actually engage with it.

I do not like Elon Musk. I do not like X. I sometimes have mixed feelings about this thing we call the Internet (just like I do about most of the tech that I research… which is why I research it). And yet, people are still on X (and also Mastodon and Bluesky and Threads, where I also am). And so it’s where I can publicize my work in ways that’s more accessible than plopping 30 pages of text into someone’s inbox and expecting them to read it all. It’s a similar reason as to why I write this newsletter: so I can have some sort of communication with people who aren’t in my academic bubble because, like any other bubble, it’s easy to start thinking that’s all there is. But then you remember that a huge number of people have no idea what generative AI or the metaverse is, and the phrase “technological mediation” probably makes most people’s eyes glaze over. So anyway, I Tweet so that people can get an idea of what it is that I actually do, and hopefully a taste of why it actually matters.

To save you a click, is a thread of my recently published paper, Safety and Privacy in Immersive Extended Reality: An Analysis and Policy Recommendations:

1/ 🧵 Hot off the (digital) printing press—Our new paper, Safety and Privacy in Immersive Extended Reality https://doi.org/10.1007/s44206-024-00114-1.

With Isadora Neroni Rezende, Huw Roberts, David Wong, Mariarosaria Taddeo, and Luciano Floridi

2/ 📈 The premise: immersive extended reality (IXR) includes immersive VR & AR environments and is rapidly growing. However, it poses safety and privacy risks both novel and not. We analyze some of the risks and make EU policy recommendations to address them.

3/ 🚨 Safety Threats: IXR can threaten physical, mental, and social stability. Users can face physical harm (cybersickness, strobing, etc.), mental health effects (e.g., from harassment/abuse), and social risks (e.g., political polarization).

4/ 🛡️ Privacy Threats: Biometric data surveillance and its impacts on informational privacy and chilling effects are concerning, but we need to think about more than just data privacy (i.e., how nudging affects decisional privacy & device sensors infringe on local privacy).

5/ ⚖️ Current Legislation: Key EU laws like the GDPR, Product Safety Legislation, ePrivacy Directive, DMA and DSA, and AI Act address some risks, but there's room for tweaks to better address IXR.

6/ 📜 Some Policy Recommendations:

Physical Safety: Implement tools for users to mute, blur, or escape from others. Clarify laws on virtual assaults.

Mental Safety: Label AI avatars, establish age gates, prevent manipulative research, and ensure transparency in virtual surroundings.

Social Stability: Create & enforce accessibility standards, improve content moderation, and improve XR literacy.

7/ 🌐 Global Implications: While focused on the EU, these recommendations can apply to other national/global IXR governance, encouraging harmonized safety and privacy approaches.

8/ 💡 IXR has immense potential but must be managed responsibly to protect fundamental rights. We hope this inspires a conversation about regulation, trade-offs, and how to create a rights-respecting Extended Reality.

9/9 📖 Check out the full paper open access here: https://doi.org/10.1007/s44206-024-00114-1

I Reckon…

that AI Act Day should be a bank holiday.

Mike, you are perhaps the exception that proves the rule. And yes, it doesn’t matter how many people read something as long as the *right* people read it, but still.

Thumbnail generated by DALL-E 3 via ChatGPT with a variation on the prompt “Generate an abstract brushy impressionist painting representing a martian surface”

Maybe people on Mars can plant potatoes like the movie. Your newsletter is informative about digital technology, so I think more and more people will become readers