Welcome back to the Ethical Reckoner. In this Weekly Reckoning, it’s an AI-palooza, with some good news for Assyriologists, mixed news (a foiled disinformation plot, debate over an AI safety bill), and bad news (X’s AI is wreaking havoc). Plus, reflections from a panel on election disinformation.

This edition of the WR is brought to you by… the sunsets of Rio

The Reckonnaisance

OpenAI disrupts covert Iranian political influence operation

Nutshell: OpenAI detected a group of Iranian ChatGPT accounts generating articles and social media posts about US politics, but said that the operation got little engagement.

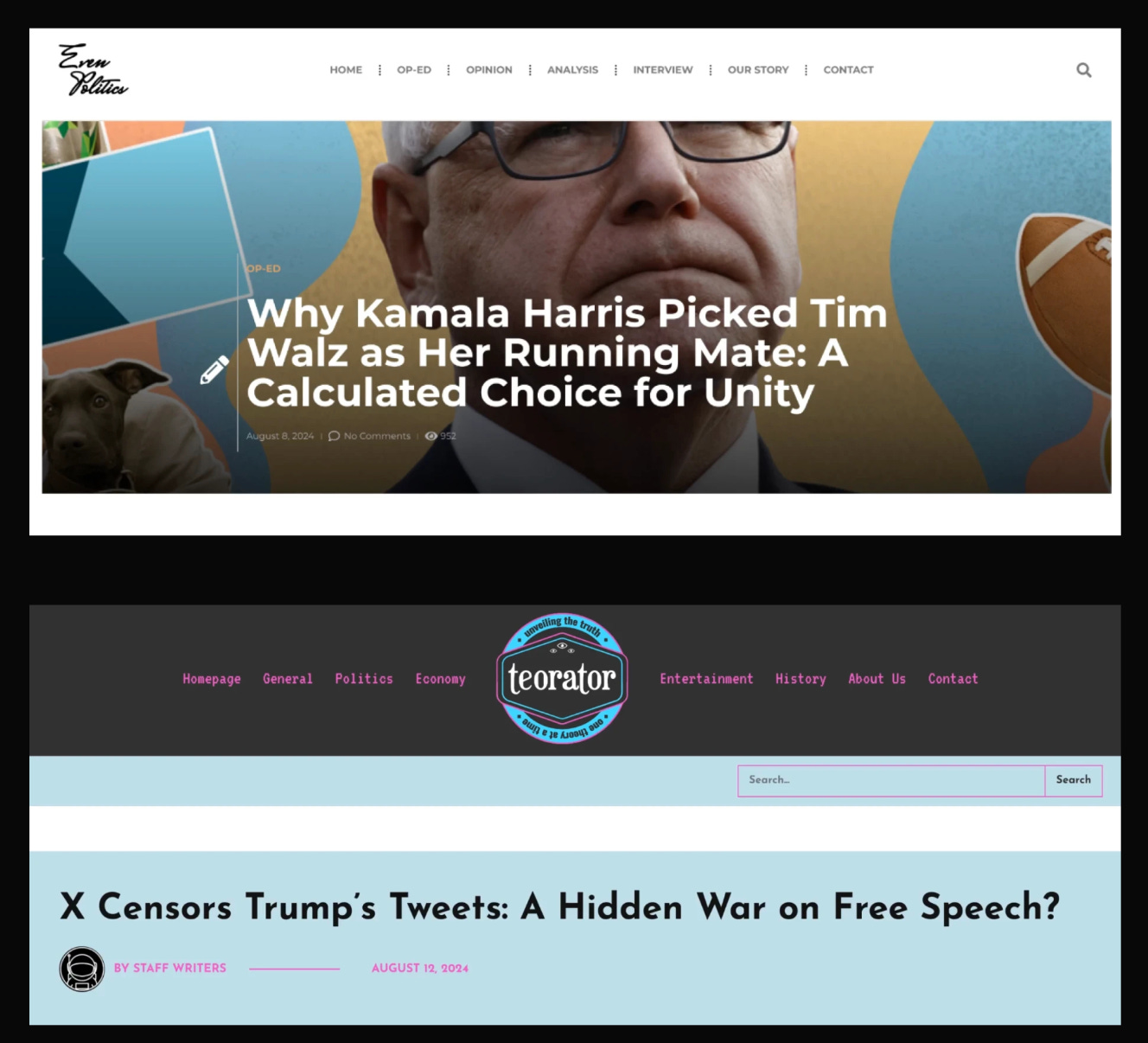

More: The operation used ChatGPT to generate longform articles with titles like “X Censors Trump’s Tweets: A Hidden War on Free Speech?” and “Why Kamala Harris Picked Tim Walz as Her Running Mate: A Calculated Choice for Unity”. As you can tell from the headlines, it generated articles from both sides of the political spectrum, and the same was true about the X accounts involved. OpenAI reported that the topics involved “mainly, the conflict in Gaza, Israel’s presence at the Olympic Games, and the U.S. presidential election—and to a lesser extent politics in Venezuela, the rights of Latinx communities in the U.S. (both in Spanish and English), and Scottish independence,” and that they interspersed political posts with posts about fashion and beauty. While the posts got little to no engagement, it seems to be part of a broader influence operation reported by Microsoft.

Why you should care: As OpenAI notes, regardless of how much “audience engagement” these posts got, “foreign influence operations” are serious. But I have to wonder what Iran was hoping to accomplish with this. It generated content across the political spectrum, not focused on any specific candidate. Was it trying to exacerbate polarization? Test the waters for what content gets engagement? It also reflects a growing trend in disinformation, which is the creation of fake news sites (fake in the sense that they are posing as news sites and also that they are publishing fake news). Often these sites pretend to be local news sites (like “Savannah Time”) which are actually outnumbering actual local news websites (1,265 to 1,213). 45% of them are targeted at swing states (though the most frequently targeted state is, oddly, Illinois), and Russia and dark-money donors are behind most of them.

California tries to prevent AI disaster, to dismay of some in Silicon Valley

Nutshell: California’s SB 1047 bill would require safety tests for large systems and hold companies liable if their AI systems cause “critical harm” to humans or property.

More: The bill has been flying through the California legislature despite major pushback and lobbying from some in Silicon Valley. Legislators made a few amendments suggested by AI companies, including removing a provision that allowed the attorney general to sue AI companies for “negligent safety practices” before a disaster occurred (it replaced it with an “injunctive relief” provision). It only applies to the largest AI models, using both compute and cost as metrics (let the budget crunching begin…). Most AI companies have issued some form of opposing statement, and prominent AI researchers are split. Some Silicon Valley legislators, including Nancy Pelosi, are also opposed to it, in part because of its regulations on open-source development.

Why you should care: As unlikely as AI disaster may be, it’s probably a good thing to have something on the books saying “hey, AI companies, we’ll sue you if you cause mass casualties.” At the same time, the law isn’t perfect, with some cautioning that legislating hypothetical risks too quickly could stifle innovation. I think the best thing it does is require companies to implement safety and security protocols, which could have a host of knock-on benefits by encouraging companies to think about safety. However, we also need to encourage developers to think about bias, deepfakes, and other ethical issues, and potentially address them with additional legislation. For an example, see…

X’s AI image generator launches, immediately causes chaos

Nutshell: The Grok 2 AI on X launched image generation with few guardrails, flooding the platform with fake images of Trump, Harris, and Elon Musk himself.

More: While most AI tools have safeguards to keep users from generating images of real people or copyrighted characters, Grok initially lacked these. Musk calls himself a “free speech absolutist” and this is absolutely in line with his “anything goes” attitude to AI—notwithstanding the irony of him suing advertisers to compel their speech on his platform—but there’s no intellectual excuse for reportedly allowing your AI to generate child sexual abuse material. X implemented some restrictions after criticism, but later tests found that it was very easy to get around those restrictions. TikTok faced a similar situation with their video generating tool, which would generate videos of people saying basically anything, but they actually pulled the tool rather than try a slapdash fix.

Why you should care: I was literally just on a panel about election disinformation (more below), and while AI hasn’t swung an election—yet—releasing a tool with zero safeguards is irresponsible at best and continues to undermine our media environment at worst. X ostensibly has a policy on synthetic and manipulated media that would prevent much of this, but when the boss himself is spreading deepfakes and releasing a tool encouraging users to break the rules, what does it matter? (The EU might beg to differ, as they’re already investigating X for various violations.)

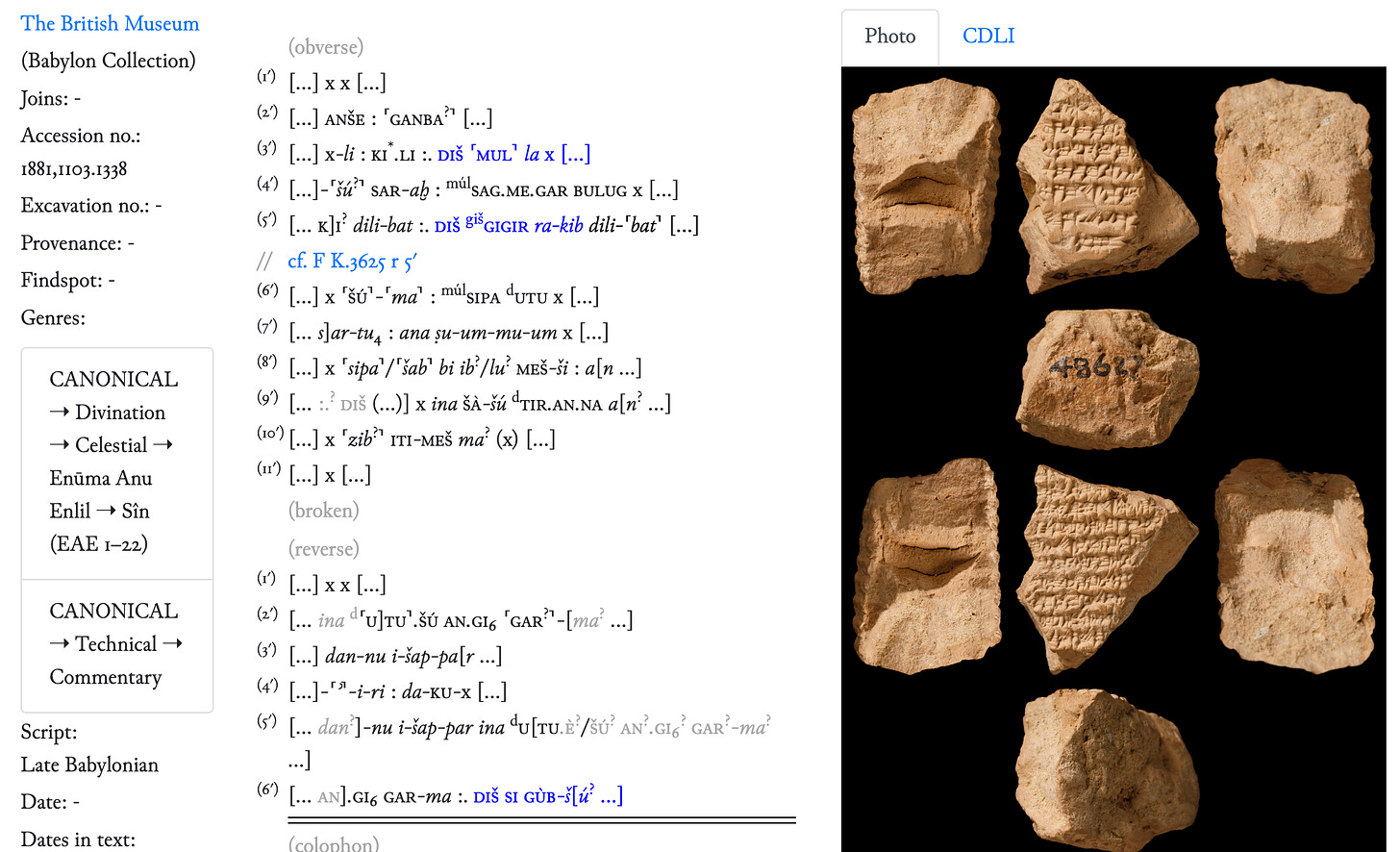

AI helps reveal epic of Gilgamesh

Nutshell: Machine learning is helping identify missing pieces of Gilgamesh and expand our understanding of Assyriology.

More: The “Fragmentarium” at the University of Munich helps match the 500,000 cuneiform tablets sitting in museum storage to existing and new works. This includes the approximately 30% of Gilgamesh that remains undiscovered. Assyriologists are reportedly thrilled, both by the new epics and by more mundane writing.

Why you should care: I harp on AI’s shortcomings a lot in this newsletter, but I love stories like this that remind me of why I do what I do—because I love technology and what it enables. I also wanted to be an archeologist in middle school, so this appeals to that past self. It also makes me wonder if our digital artifacts will be preserved in museums of the future, or if I should start printing this newsletter out and filing it away just in case.

Extra Reckoning

Last week, I was on a panel called “Legal and Policy Perspectives on How to Combat Disinformation in 2024 Election Cycles,” organized by the Wikimedia Foundation’s Summer Legal Fellow class. Alongside Rick Hasen, Michael Karanicolas, David Nemer, and our wonderful moderator Leighanna Mixter, we talked about the dangers of disinformation on social media in the biggest election year in the history of the world.

Our discussion covered a lot of ground, and I learned a ton from my co-panelists. David brought a lot of insights from his research on Brazil. Michael talked about how anti-disinformation laws are being used to attack journalists in emerging democracies (though others are trying, including Canada, which had a law that criminalized the dissemination of unknown falsehoods struck down as unconstitutional). Rick discussed how the universal acceptance of the importance of “loser’s consent” (when the loser of an election concedes) has been undermined by rhetoric around stolen elections in the US. And while most disinformation is still the “traditional” sort, because I focus on digital ethics, I spoke about AI-generated disinformation—especially the threat it poses to down-ballot and international races—and also about the Digital Ethics Center’s project with Wikimedia analyzing disinformation on Wikipedia in the run-up to the 2024 US presidential election.

The Wikimedia team had a lot of great questions for us, but one of my favorites was the last one, asked by an audience member. They referenced a Hannah Arendt quote and asked how disinformation plays into the dynamics she describes:

"The moment we no longer have a free press, anything can happen. What makes it possible for a totalitarian or any other dictatorship to rule is that people are not informed; how can you have an opinion if you are not informed? If everybody always lies to you, the consequence is not that you believe the lies, but rather that nobody believes anything any longer. This is because lies, by their very nature, have to be changed, and a lying government has constantly to rewrite its own history… And a people that no longer can believe anything cannot make up its mind. It is deprived not only of its capacity to act but also of its capacity to think and to judge. And with such a people you can then do what you please.”

I really liked this question, and unfortunately, it’s highly relevant to our current situation. Rather than an unfree press, though, at least in the US, we’re worried about a media landscape that is so noxious and inscrutable that it’s impossible to know what to believe, with the result that you don’t believe anything. We’ve been seeing this since the 2020 election, but it’s ramping up. Just this week, Trump accused Harris of using AI to manufacture pictures of crowds greeting her, and Elon Musk has thrown out the X rulebook by tweeting deepfakes. Disinformation is typically sought out and consumed by a small knot of people—think of the conspiratorial corners of the Internet that attract the Flat Earthers and vaccine skeptics—but it’s increasingly being put directly in front of us on social media. Like we discussed last week, the firestorm around Olympic boxer Imane Khelif was stoked by Russian disinformation networks and amplified by algorithms:

While this is an evolution in the medium and transmission of disinformation, it’s not a sea change. History doesn’t repeat itself, but it does rhyme, and in the US, we’ve had several previous stanzas. As I said in my response, the latest season of Rachel Maddow’s podcast Ultra made this point incredibly well, telling the true story of the ultraright on the rise in WWII-era US and how our democracy was very much at risk.

Whether it’s Father Coughlin on the radio, Joseph McCarthy on his bully pulpit, or Trump on X, the US has long had political figures pushing disinformation for their own ends. Regardless of medium, regardless of their specific goal, they are all aimed at making sure that the “people are not informed,” to make us unsure and unable to make up our minds. And in a time when prominent people are trying to discredit our media environment, organizations like Wikimedia that rebuild trust, that keep track of our history and give us information we can believe, are more crucial than ever.

I Reckon…

that being stuck on the ISS for eight months is still probably better than riding back to Earth on a Boeing.

Thumbnail generated by DALL-E 3 via ChatGPT with the prompt “Make me an abstract Impressionist brushy painting in shades of orange of a Rio sunset”.

Regarding your Extra Reckoning section:

The disinformation you mention appears to be one-sided. Is there no disinformation from the opposing side, or were you unable to find examples?

You state that the ramping up of disinformation is recent, but this phenomenon actually began much earlier, such as during the 2000 election.

I disagree with your claim that disinformation is only sought out and consumed by a "small knot of people." I believe its reach and impact are more widespread.

Could you address these points and provide a more balanced perspective on the issue of disinformation?