Welcome back to the Ethical Reckoner. In this (slightly belated) Weekly Reckoning, we cover Microsoft’s new nuclear reactor, Instagram’s new teen safety features, and California’s new deepfake laws, plus how talking to a chatbot can reduce conspiratorial beliefs and my take on the UN’s new report on governing AI for humanity.

This edition of the WR is brought to you by… apple picking to ring in the equinox.

The Reckonnaisance

Three Mile Island comes back online… for Microsoft

More: Microsoft is probably going to use the energy for its data centers and AI research. And honestly, as slightly dystopian as “let’s bring a nuclear reactor back online for this private company” sounds, it’s a pretty good solution for power-hungry data centers, since governments apparently don’t want the energy. Of course, we could/should be building even more reactors for non-commercial uses, and maybe this will help us get there.

Why you should care: AI is triggering a nuclear revival, which may sound scary but is probably a good thing because nuclear power is actually really safe. It’s fallen out of favor, but in the absence of a fusion breakthrough/significant measures to advance other clean energy, if AI is what it takes to get us to build more fission plants, I can’t really complain.

Instagram adds new teen safety features

Nutshell: In advance of possible legislation, Instagram is making changes to teen accounts.

More: Instagram is making teen accounts private by default, implementing messaging and content restrictions and muting notifications overnight. For teens under 16, parents will have to approve changes to the default settings. However, if a parent isn’t engaged with this or doesn’t want to create an Instagram account to supervise their teen, it seems like there’s basically nothing stopping a teen from inviting a friend or burner account to be their “parent” and change these settings at will. Meta says they’ll be looking to make sure this doesn’t happen, and some kids will certainly slip through the cracks, but the proof will be in how many. Skeptics say that this is probably an attempt to pre-empt stricter legislation and doesn’t go far enough.

Why you should care: Hopefully at this point I don’t have to keep harping on again about why keeping kids safe online is important. At the same time, it’s a thorny topic. In general, I think we’re over-protective of kids in the physical world1 and under-protective in the digital world. We shouldn’t allow the pendulum to swing too far in the other direction, like giving parents direct access to their kids’ DMs (this doesn’t, just allows parents to see who their kid is messaging). But if parents don’t take action, it won’t be effective. These measures, combined with efforts to drill into parents that these are easy measures they can take to keep their kids safe, will hopefully help, although there’s more to be done, like allowing kids to directly report when they receive an inappropriate message.

California passes anti-deepfake bills

Nutshell: Governor Newsom signed three bills that attempt to safeguard elections from deepfakes.

More: One, which goes into effect immediately, prohibits individuals from knowingly distributing election-related AI-generated or altered content 120 days before and 60 days after elections. The second and third, which would go into effect next year, would require campaigns to disclose when an election ad is AI-generated, and platforms to label such content. The laws are already being challenged in court over free speech concerns.

Why you should care: Even though AI hasn’t been the election disruptor that many feared, it seems eminently reasonable to require campaigns and platforms to label when they use AI in election-related material, especially since we’re already seeing deepfakes wielded in elections in the US and abroad. Critics say that the laws are too broad, but they explicitly don’t ban parody or satire, and when the federal government isn’t acting, we can’t blame California for doing so.

Talking to a chatbot can reduce conspiracy beliefs

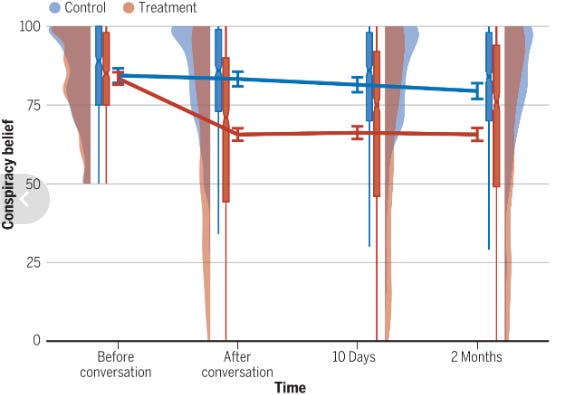

Nutshell: A new study shows that conversing about conspiracy theories with an AI chatbot reduced conspiratorial beliefs for months.

More: The effect was large (about a 20% reduction in conspiracy beliefs) and persisted for at least two months (the limit of the study’s follow-through) even though participants only went through three rounds of conversation. The chatbot was instructed to counter conspiracy beliefs with evidence, and the authors found that information the chatbot provided was accurate.

Why you should care: Persuading people to stop believing in conspiracies is really hard. Even though we caution people against believing what a chatbot tells them, people still tend to trust them, and this may be one instance where that trust is a positive influence. If this study replicates and its methods expanded, it could be a way to decrease conspiratorial beliefs more broadly. Got a conspiracy-driven relative? Take a look at the methods section and send them to ChatGPT.

Extra Reckoning

How should AI be governed? Who should make the rules, and what should they be? These are questions I spend a lot of time thinking about, and the UN has just issued a big report opining on it called Governing AI for Humanity. It’s 101 pages long, but I read it, and I have some thoughts. I could write a whole paper on this (don’t tempt me) but here, I’ll just talk about the seven recommendations it makes. (I discussed some of these points in a recent thread.)

1. Establish an international scientific panel on AI to create scientific consensus on AI “capabilities, opportunities, risks and uncertainties” and issue reports on how AI could be used for the public interest.

Because AI governance is such a values-laden topic (whose definition of fairness do we use? Whose rights do we respect and prioritize?) I think that having dialogues that are too high-level is counterproductive. Scientific consensus is vital because it forms the building blocks of larger forms of consensus, and I think that lower-level, less politicized discussions are vital to making sense of governance issues.

2. Hold twice-yearly “policy dialogues” on AI governance.

These would glom on to existing UN events, but I fear they’ll fall prey to the issues I mentioned above. They’re supposed to be for sharing “best practices on AI governance that foster development while furthering respect, protection and fulfilment of all human rights,” but while I support a human rights-based AI governance framework, not all countries do, or at least not all countries can agree on how to interpret human rights. The report also calls on countries to voluntarily share “significant AI incidents that stretched or exceeded the capacity of State agencies to respond,” but states are going to be extremely reluctant to do this and admit when they’ve failed.

3. Create an AI standards exchange to figure out what standards we have and what standards we need.

Standards are essentially technical agreements on how things should be designed or work—standards are why you can trust that your car is safe, that your card payments will work and be secure, and why you know you won’t be electrocuted when you plug in your phone. But there are multiple standards organizations, some non-AI standards will apply to AI, and countries compete to influence different standards, which makes it a very thorny landscape that needs to be mapped.

4. Set up a “capacity development network” with regional nodes across the world to catalyze development and foster collaboration.

This would be great. AI development capabilities are not evenly distributed and are often siloed, so formalizing a collaborative network would support a “bottom-up, cross-domain, open and collaborative” development paradigm in the public sector that would contrast with the closed, private-sector-driven model we have now and promote AI for the public interest.

5. Establish a “global fund for AI to put a floor under the AI divide” by providing resources to developers from countries with fewer computing and data resources.

Like #4, this would be so helpful to promote AI development in under-resourced countries. The trick would be funding it, and then keeping it funded—while countries and companies may initially put some resources into it to look good, wealthy countries’ failure to follow through on COVAX funding for COVID vaccines may be indicative of how interest might fall off.

6. Create a “global AI data framework” and a data commons to promote interoperability and data sharing.

Solidifying “global principles and practical arrangements for AI training data governance and use” would be helpful; right now, everyone is just kind of figuring it out as they go. However, I think there’s essentially zero chance of anyone agreeing to a data commons. Countries are hanging onto their data with death grips, partially for national security reasons, and it’s extremely unlikely that, say, the US would contribute anything to a data commons that China could also access.

7. Stand up an AI office within the UN.

I like their vision of this as “light and agile” and relying on existing institutions when possible—again, see the importance of a decentralized AI governance framework. These other efforts need coordination but with minimal faff and overhead; spending ages spinning up a new institution would hurt the chances of actually achieving its goals. There’s an ambitious list of 1st-year deliverables, and it would be impressive if it could achieve all of them, but first it needs support and buy-in.

Overall, I was pleasantly surprised by this report. But in proposing what “could” be done, it skirts around the question of what can be done—how will these proposals lead to agreement between the US and China? What will countries be willing to derogate to global governance? These problems are deep-rooted and I didn’t expect the report to answer them; there’s no easy answer. I’m sure these proposals will face their share of difficulties and roadblocks. But they seem like a good start.

I Reckon…

that Cards Against Humanity suing Elon Musk is the best news one could hope for.

American schools being a notable exception.

Thumbnail generated by DALL-E 3 via ChatGPT with the prompt “Please create a brushy abstract impressionist painting in shades of greens on the theme of newness”.

![[a chart showing ballooning standards from the IEEE, ISO/IEC, and ITU since 2018]

[a chart showing ballooning standards from the IEEE, ISO/IEC, and ITU since 2018]](https://substackcdn.com/image/fetch/w_1456,c_limit,f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2Fb4ddbb64-e607-4207-acf1-27f4f775dd51_1574x984.png)

![[216 When we look back in five years, the technology landscape could appear drastically different from today. However, if we stay the course and overcome hesitation and doubt, we can look back in five years at an Al governance landscape that is inclusive and empowering for individuals, communities and States everywhere. It is not technological change itself, but how humanity responds to it, that ultimately matters.] [216 When we look back in five years, the technology landscape could appear drastically different from today. However, if we stay the course and overcome hesitation and doubt, we can look back in five years at an Al governance landscape that is inclusive and empowering for individuals, communities and States everywhere. It is not technological change itself, but how humanity responds to it, that ultimately matters.]](https://substackcdn.com/image/fetch/w_1456,c_limit,f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F35352a0a-d4d6-4ce6-87fc-e8857167c0b5_952x450.png)