Welcome back to the Ethical Reckoner. In this Weekly Reckoning, we cover how North Koreans are working for US companies (and how, incidentally, US workers are getting worse at basic skills), how OpenAI is spilling the tea to defend against Elon Musk’s lawsuit, and how Character.AI is getting sued again. Then, 90 minutes waiting in line in the Brussels Airport inspired me to think about morality.

This edition of the WR is brought to you by… blue Powerade.

We’ll be off next week for the holidays, then back for the final edition of 2024.

The Reckonnaisance

Is your co-worker a North Korean? Maybe.

Nutshell: 14 North Koreans were indicted for working as remote IT workers at US companies, earning $88 million for the regime.

More: The indicted workers used stolen identities and patsies in the US to host laptops provided by their employers (so if anyone emails you asking you to receive a laptop in the mail, connect it to your home WiFi, and install some remote access software, for the love of god say no). They were allegedly ordered to earn at least $10,000 a month and supplemented their income with good old cybercrime: stealing sensitive corporate information and threatening to leak it unless the company paid up. Even though the DOJ is on to them, most of the workers are believed to be in North Korea, meaning that it’s unlikely that they’ll ever actually face trial.

Why you should care: Despite its reputation for backwardsness, North Korea is actually really active in cyber affairs, most of them nefarious. (They almost stole a billion dollars from the bank of Bangladesh,1 and have successfully stolen billions in crypto.) And this new scheme isn’t just limited to those 14 individuals; two North Korea-controlled companies employed 130 North Korean “IT Warriors.” The scheme is so extensive that the special agent in charge of the St. Louis FBI office said this:

“This is just the tip of the iceberg. If your company has hired fully remote IT workers, more likely than not, you have hired or at least interviewed a North Korean national working on behalf of the North Korean government.”

Elon Musk wanted to be head of OpenAI for-profit… in 2017

Nutshell: OpenAI is bringing receipts to its legal fight with Elon Musk, posting a trove of emails where Musk says he wanted to be “unequivocally” in control of a for-profit version of OpenAI.

More: Musk’s basic complaint in his lawsuits is that OpenAI has deviated from its initial mission of being a non-profit and is putting profit motives above its mission of AI for the public good; he’s trying to get a judge to keep OpenAI from restructuring. However, these emails reveal that as early as 2017, Musk agreed that OpenAI would have to be at least in part a for-profit company. During negotiations, he was advocating a structure where he “would unequivocally have initial control of the company.” Eventually, negotiations broke down when the OpenAI team expressed qualms:

“The goal of OpenAI is to make the future good and to avoid an AGI dictatorship… So it is a bad idea to create a structure where you could become a dictator if you chose to.”

Why you should care: I’m interested in this story partially for the soap opera nature of it all, but also because it might shape the future of the AI industry. On the “pass the popcorn” level, these emails and texts show how Silicon Valley power players work and negotiate with each other—my jaw dropped when I saw the email from the OpenAI president and chief scientist asking Sam Altman why he wanted to be CEO: “Is AGI truly your primary motivation? How does it connect to your political goals?” But this is also a knock-down, drag-out fight between OpenAI and Musk, who is probably at least in part trying to hamstring OpenAI and promote his own AI company, xAI. Whichever side prevails, the AI landscape will be changed going forward.

US falls behind in basic skills; Europe and Japan soar

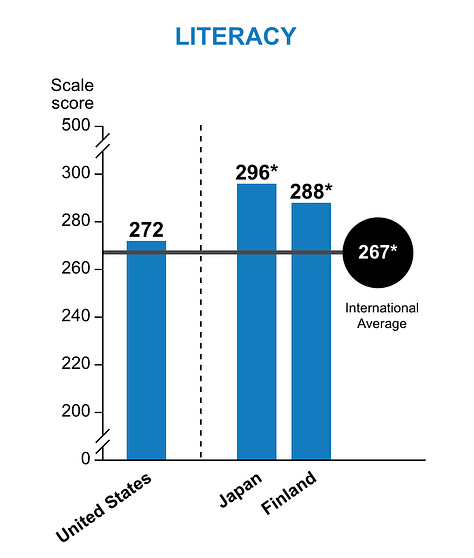

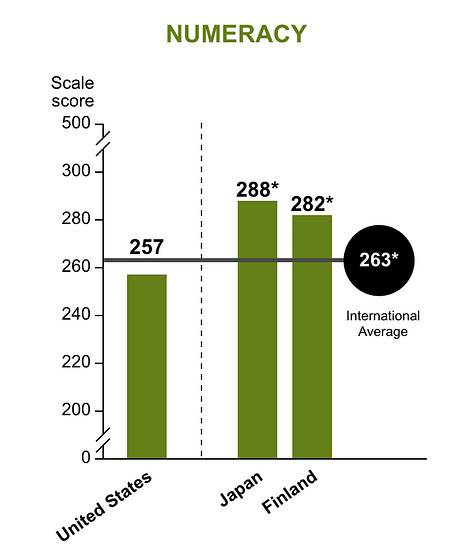

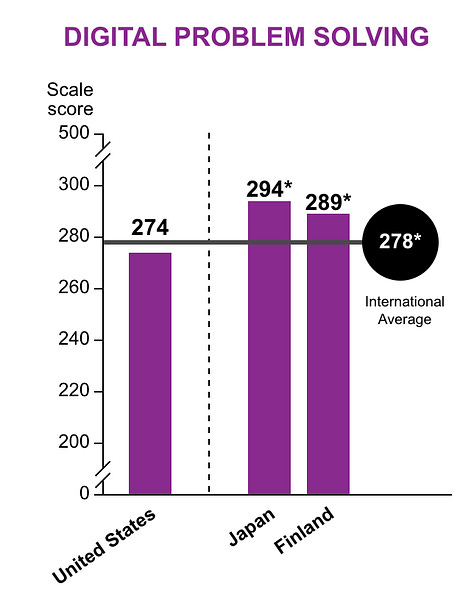

Nutshell: In a global test of “adult competencies,” US literacy and numeracy scores plunged, largely due to a widening gap between the most and least-educated Americans.

More: The test assesses basic skills like reading a thermometer, making inferences from text, doing fractions, and finding information on a website. Japan and Finland absolutely crushed it, coming first and second in all three categories it tested. Sweden, Norway, the Netherlands, Estonia, Belgium, and Denmark rounded out the top eight, again in all three. The US’s performance decline since 2017 is another example of an overall decline in test scores and worker skills.

Why you should care: How is this connected to tech? Well, one thing the test measures is “digital problem solving,” and the US did not do so hot. And this makes me worry. Digital skills are becoming important in more and more jobs, and if US workers don’t have them, those workers will struggle, and so will the broader US economy. And though the US has a relatively high number of high-performers in the survey, that’s not enough to carry the rest of the country.

Graphs from NCES

Things get worse for Character.AI

Nutshell: The embattled chatbot service is getting sued again by parents alleging the chatbots abused their children.

More: The allegations include that the chatbots encouraged kids to self-harm, exposed a 9-year-old to sexual content, and alienated them from their parents and communities. This lawsuit follows one by the mother of a teenage boy who was in a relationship with a Character.AI chatbot and then tragically killed himself.

Why you should care: No matter how big you think the AI companion industry is, it’s bigger. The top 5 US AI companion apps have an estimated 52 million users, and Google invested nearly $3 billion in Character.AI. Many of those users form extremely strong and genuinely meaningful relationships with their bots. I don’t think this is on its face a bad thing when many people find emotional support from these bots, which can also help them learn how to form connections with actual people. But without appropriate guardrails, you end up with a 17-year-old being told that self-harm feels good and discouraging him from telling his parents. The rise of chatbot companions also creates a huge risk because people have a huge emotional investment in these bots that are ultimately generative AI models controlled by companies, and so when they change or get shut down, it’s genuinely distressing.

ER 14: On Overlooked LLM Issues

Extra Reckoning

This past week, I was in Belgium for Stereopsia, Europe’s premier XR conference. Despite an unfortunate bout of food poisoning, it was a great trip, and it was amazing to get to talk about ethics and AI in XR with such a great crowd.

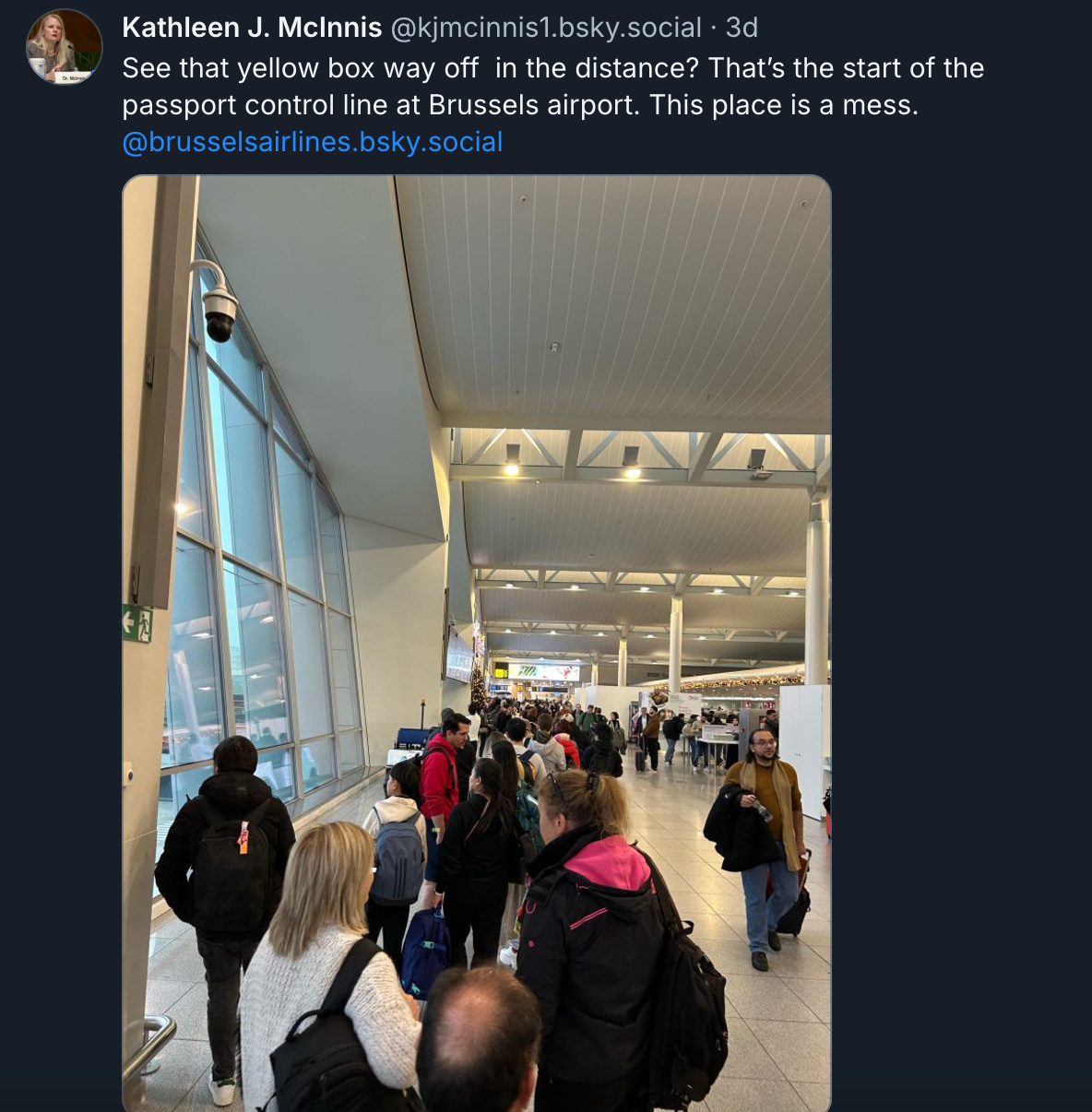

But what I want to talk about is my experience at the Brussels Airport flying home on Thursday, which can be generously described as… bad. The security line was a bit long and I was wishing PreCheck existed in Europe. But, dear reader, I hadn’t seen nothing yet. The passport control line stretched down the entire security hall, at least a football field. When I first saw it, I assumed it was a line for… something else. A flight boarding? But I walked to the front of the line, saw the “All Other Passports” sign, groaned, and made my way to the back, whereupon I spent the next 35 minutes slowly shuffling to the end of the security hall, and the next 45 minutes slowly wending my way through the stanchions to the actual passport control station.

I was worried, but thankfully my flight was twice delayed, so even though it had ostensibly been boarding for twenty minutes by the time I got through, they were actually still servicing the plane. Unfortunately, not everyone would be so lucky, and people knew it. The line was a uniquely desperate place. At one point early on, a couple of older men in the row behind me said that their flight was boarding. I could have raised the belt and let them through, and normally I would have. This time, I didn’t, and I felt slightly bad, until I realized that the constant refrain around me was “we’re all in the same boat.” And this was true—we all were racing the clock. Even though I had more time than most, me letting them through would have been making a decision for people who had less time and making it less likely that they would make it. Sometimes, the altruistic urge is at odds with the good of the group. And as arguments broke out amongst the group—one requiring a police officer to force a group of cutters back to their spot in line—I realized it was a good thing I didn’t let them through.

This threw me for a loop because it goes against the usual “doing the right thing” logic, which is that an individual good action is good for the group/society as a whole. The closest analogy I can think of is donating to a misaligned charity. There was one charity, PlayPumps, that created child’s merry-go-rounds that doubled as water pumps. But it turns out that the PlayPumps were “more expensive, pumped less water, and were more challenging to maintain than the hand pumps they had replaced.” Women often ended up pushing them themselves, and The Guardian calculated that children would have to play on one non-stop for 27 hours a day (yes, that’s correct) for the pumps to meet their goals. A donation to PlayPumps, while seemingly individually moral, would thus actually make things worse for the communities they were supposed to help.

I think this has stuck in my brain because it’s a replica of what we see too often in tech: the imposed techno-solution. These range from ill-conceived products that flopped (like the Humane AI pin) to things like half-baked self-driving cars or believing that carbon capture will solve climate change. Intentions may be good, but the outcomes are bad.

Intentions may not be good, however. I’ve been wondering why I didn’t let those men through. Was it because I was sensitive to the needs of the group, or because I was worried about my own flight? I’d like to think it was the former, but I fear it was the latter. From a utilitarian perspective, it doesn’t matter: it’s about the outcome, which provided the most good to the most people (I hope). Deontologically, it could have been wrong for me to not let them through even if ultimately it was the correct decision. I’m not losing sleep over this—though if I was developing a new tech product, I might be pausing to think about my motivations. In the end, we were all in the same boat, and most of us kept paddling.

I Reckon…

that The Onion won’t own Infowars after all :(

There’s a great BBC podcast, The Lazarus Heist, on this.

Thumbnail generated by DALL-E 3 via ChatGPT with the prompt “Please generate an abstract Impressionist painting in calming colors representing the concept of a queue”.