Welcome back to the Ethical Reckoner! In this Weekly Reckoning, we have an update on the Vesuvius Challenge (not a TikTok trend, don’t worry), a scarier use of AI to generate fake IDs, and Meta’s AI push and “incoherent” manipulated media policy. This was on my mind because of the event I spoke at on Friday on AI and elections, which I recap in the Extra Reckoning (spoiler: AI isn’t the problem).

This edition of the WR is brought to you by: the lazy expat Super Bowl experience.

The Reckonnaisance

The Herculaneum Scrolls have been read!

Nutshell: A scroll carbonized by the eruption of Vesuvius has been deciphered by a team using AI techniques.

More: The 2000-year-old scrolls are essentially lumps of coal, but AI is helping decipher them. I wrote about the first milestone in the Vesuvius Challenge, when the first word (“purple”) was discovered. Now, over 2000 characters from one of the scrolls have been read and the Grand Prize of $700,000 awarded. The scroll, though to be by the Epicurean philosopher Philodemus, discusses pleasure and following the Epicurean. Choice quote: “as too in the case of food, we do not right away believe things that are scarce to be absolutely more pleasant than those which are abundant.” (Cases in point: gelato (lots) > popsicles (few) in Italy, rice (abundant) > sourdough (scarce) in China, deep dish < any other pizza in Chicago.)

Why you should care: These are the kinds of stories that make me really excited about AI and technology. Before computer vision/machine learning (and CT scanning), these scrolls would have been lost forever. But now we can read them, prying open a window into what our predecessors thought 2000 years ago. If it had been, like, a grocery list, I may not have been so enthusiastic (actually, that would have been fascinating too). But the fact that they may be “2000-year-old blog posts”? As a blogger, I have to celebrate that. Also see: recovering old films using AI.

A new era of fake IDs is coming, powered by AI

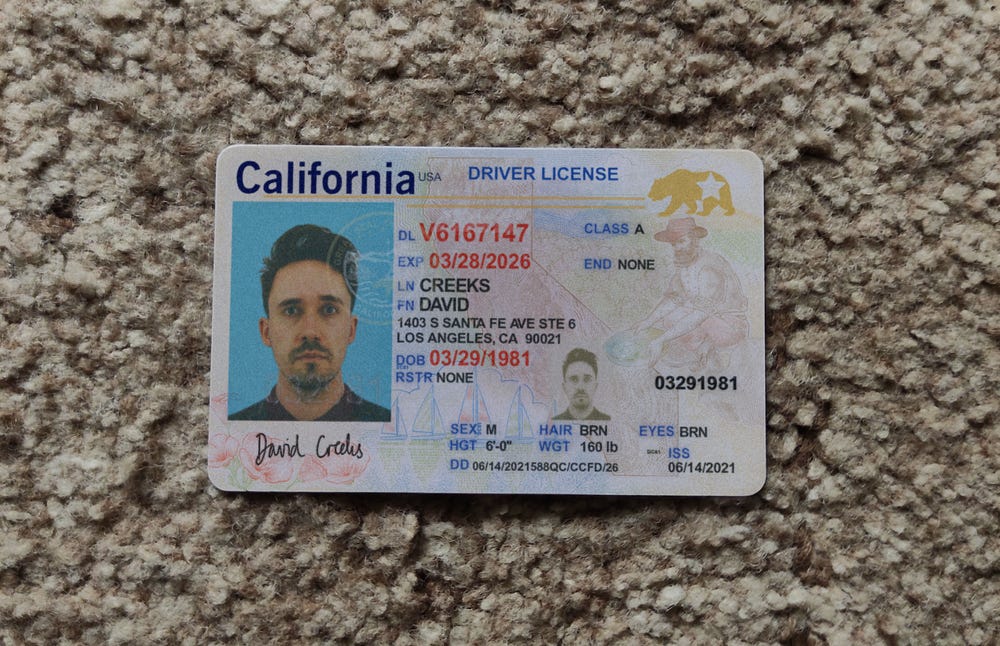

Nutshell: A site used neural networks to create fake IDs for $15 each that can fool online identity checks.

More: I say “used” because the site, OnlyFake, shut down after 404 Media investigated it, but as a proof of concept, it’s scary. 404 Media was able to generate a highly realistic California drivers’ license and a British passport good enough to fool a cryptocurrency exchange’s ID check and sign up for an account.

Why you should care: The service didn’t print IDs, just provided photos of the ID against real backgrounds, but they’re realistic enough to bypass Know Your Customer checks at crypto exchanges, and potentially other places like banks and remote work situations. And, if we ever decide to do ID verification or age checks for, say, social media, this technology could render that moot, as anyone with a few bucks will be able to generate an ID realistic enough to bypass them.

Meta’s pivot to AI, brought to you by… your Facebook comments

Nutshell: Zuckerberg is pledging that Meta will build “general intelligence” to fuel its next generation of services, throwing the gauntlet down to OpenAI and Microsoft.

More: This new AI initiative may or may not end up being open sourced, but it definitely will be based on Meta user data—including pictures, videos, and comments. Zuckerberg estimates that this is bigger than the Common Crawl dataset, versions of which have been used to train LLMs like ChatGPT. That dataset contains 250 billion web pages, so saying you’ve exceeded that is a big deal. Between its massive chip orders and its data resources, Meta is in a really strong position to compete in AI.

Why you should care: Meta may have a large dataset to work with, but what they do with it is important. The unfiltered Common Crawl dataset contains hate speech, harassment, etc., and is mostly in English and thus not representative of human diversity, so using it creates issues and trade-offs in the final model. Facebook comments are not known for their civility, so what a model trained on them looks like in the end is anyone’s guess.

Facebook Oversight Board demands rewrite of “incoherent” manipulated media rules

Nutshell: Meta’s “Supreme Court” ruled on a case about an altered video of Joe Biden, ruling that it should stay up, but criticizing the policies that allow it to do so.

More: Meta’s Manipulated Media Policy covers only AI-manipulated videos that make people appear to say “words that they did not say,” but not doing things that they did not do. Since the video in question—altered to make it appear that Biden was inappropriately touching his granddaughter—didn’t involve speech or AI, it was ruled to not violate the policy. Ironically, even though the video policy is about preventing manipulated audio, Meta doesn’t have a policy forbidding standalone manipulated audio. The Oversight Board called on Facebook to overhaul its “incoherent” policies to cover a broader swathe of manipulated video and audio, and to label rather than take down offending posts, but Meta doesn’t have to follow its suggestions.

Why you should care: In its decision, the Board is especially concerned with this policy considering the number of elections coming up this year. There’s already been AI-generated deepfake images and audio in the US election—and in other elections worldwide—and while disinformation scholars are split on how much influence AI-generated disinformation will have on the US election, Facebook should have a coherent policy in place for how to address it. Which brings me to…

Extra Reckoning

On Friday, I was fortunate to be a panelist for a Democrats Abroad UK event on AI and elections, and I wanted to take this space to reflect on it a bit. My wonderful co-panelists (Andrew Strait from the Ada Lovelace Institute and Sarah Eagan from the Center for Countering Digital Hate) provided incredible insights on the landscape of mis/disinformation and how we need to inoculate ourselves against it. In fact, the overall takeaway was that AI itself is not the problem, although generative AI does make generating fake images, videos, and (especially) audio far easier. Rather, the panic about the “AIpocalypse” is actually a symptom of broader issues in our information ecosystem, which is dominated by massive platforms that algorithmically promote engaging content regardless of whether it’s good or bad for the health of the infosphere.1 And we don’t have good ways to know if content being promoted is AI-generated: AI companies and platforms are trying to watermark synthetic content (both visibly and invisibly), but these can easily be foiled. AI doesn’t fundamentally change the infrastructure for targeting and distributing disinformation, but it may exacerbate problems that already exist by facilitating both more viral and more targeted content—in other words, we’ve made our bed, and now we’re lying in it.

The real solution is to re-design our platformized2 infosphere from the ground up; the Center for Countering Digital Hate advocates the STAR design approach (Safety by design, Transparency, Accountability, and Respect). This requires us to prioritize legislation and regulation, because platforms aren’t going to do this unless adequately incentivized otherwise. Meta and OpenAI are making lots of noise about their election integrity plans, but even if all of their policies were perfect (which they aren’t), we shouldn’t have to depend on the goodwill of Big Tech companies to safeguard our elections. Companies are not supposed to be the arbiters of democracy. The government is. And yet in this historically unproductive Congress, I’m not holding my breath.3

The other danger of relying on platforms is that they won’t address all elections equally. 2024 is the biggest election year in global history—half the planet is going to the polls this year—and different countries have different media ecosystems, different policies, overall different electoral contexts. And, crucially, different levels of prioritization from platforms. Bangladesh’s January election was full of deepfakes (covered in WR 11), but campaigns stopped reporting them because platforms didn’t take action, so there was no point. This is a continuation of how content moderation is generally worse in non-English, non-Western contexts. Research also doesn’t tend to focus on developing countries, so we don’t know how much influence these deepfakes had.

Top-ticket elections in big countries—i.e., the US presidential election—will get attention from platforms because of the backlash they’ll get if something goes wrong. But less-scrutinized elections in other countries, and even more local elections? I wouldn’t be surprised if a well-timed deepfake or generated audio clip swung a school board, House, or international election, facilitated by the lack of measures to combat them and, ultimately, our cessation of political power to the platforms that run our infosphere.

I Reckon…

that Bluesky opening its doors to all might make it a little less lonely (seriously, come on over).

Basically the realm of information, data, and knowledge, populated by information-generating organisms, that crosses the physical and digital worlds.

The increasing domination of the Internet by large online platforms.

Independent of Congress, the FCC said that AI-voiced robocalls are illegal. Snaps for the FCC.

Thumbnail image is not AI-generated, but a close-up I took of interesting textures in Cezanne’s “Hillside in Provence” at the British Museum.