WR 22: The volunteers keeping science & software afloat; AI beer

Weekly Reckoning for the week of 1/4/24

Welcome back to the Ethical Reckoner. In this Weekly Reckoning, we cover an exciting/scary new OpenAI product, a scary/relieving cybersecurity near-crisis, a controversial social media bill, and some good news for beer drinkers. Then, some reflections on the critical but under-recognized areas of peer review and open-source software maintenance.

This edition of the WR is brought to you by… the Easter bagpipers of Leuven.

The Reckonnaisance

OpenAI holds back voice cloning tool… kind of

Nutshell: OpenAI announced it has developed a voice-imitating tool, but isn’t rolling it out (widely) (yet).

More: The tool, Voice Engine, can generate natural-sounding speech of a specific person in multiple languages with just a 15-second audio sample. OpenAI is allowing a few “trusted partners” to use this tool, but not rolling it out more broadly because of the fraud and disinformation risks. Some of the use cases OpenAI is promoting are translating videos/podcasts, providing reading assistance, giving verbal feedback in specific languages, and supporting non-verbal people and people with speech conditions. However, there’s already evidence that it’s being misused at HeyGen, one of the partners, which offers realistic digital avatars. Although OpenAI’s usage policy prohibits the nonconsensual imitation of other people’s voices, HeyGen is being used to “clone” women and promote Chinese-Russian relations on the Chinese Internet.

Why you should care: Many people have been advocating for slower rollouts of risky AI tools for years, and it looks like OpenAI is partially listening. They themselves acknowledge that this threatens voice-based authentication and elections—to say nothing of the scam calls that are on the rise—so they aren’t releasing it to the public yet. (I should note that there are other voice imitators out there, like the tool that was used to generate the deepfake Biden robocall.) However, it seems like they could have vetted their partners more closely, especially ones that provide services that threaten individual reputations and our infosphere as a whole, and the question of whether the potential benefits outweigh the risks hasn’t been answered.

Backdoor that could have “hacked the planet” discovered in the nick of time

Nutshell: A software engineer discovered a compromise in a widely used open-source software package; if distributed, it would have created backdoors into some of the most widely used systems in the world.

More: I’ll try to explain this to be as minimally software engineer-y as possible. Basically, a shocking amount of foundational software is open-source (i.e., maintained by a volunteer community). This software package, “xz Utils,” which compresses files (think of a zip file), is used in a lot of flavors of the Linux operating system, which is installed on many computers, servers, and other systems. Over the last two years, one of the maintainers of xz Utils has been gaining trust—and adding malicious code. Recently, they’ve been pushing for its inclusion in production versions, which would be distributed across the world. Thankfully, the exploit was discovered by an engineer who, suspicious that his code was running 500ms slower than usual, dug into the package and discovered the exploit. But had it shipped, it could have allowed for hackers to gain access to entire systems (of, say, banks, governments, schools, etc.), potentially facilitating data breaches, ransomware attacks, and all sorts of other bad things—“hacking the planet,” in other words. (If this was still too software-y, try this.)

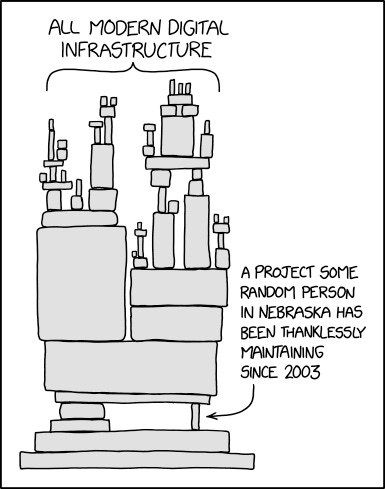

Why you should care: As this xkcd comic perfectly illustrates, a ton of our modern digital infrastructure is dependent on really important yet largely unmonitored open-source projects that volunteers thanklessly maintain. The original maintainer seemed burnt out, which allowed the mysterious “Jia Tan” (whom people suspect may be a state actor) to gain trust and get the malicious code into the software. There are mechanisms for a “peer review” of sorts (which we’ll talk about later), but those failed here. Truly, we just got lucky, because this could have been very, very bad.

Florida passes social media age verification bill

Nutshell: Starting next year, Florida will prohibit anyone under 14 from having social media accounts and ban minors from adult websites.

More: The law would require parental consent for 14 and 15-year-olds to have social media accounts. It also requires “anonymous age verification” that individuals are over 18 for websites with material that’s more than 1/3 “material harmful to minors,” including sexual content. Penalties are $10,000-$50,000 per violation, and social media companies are likely to sue.

Why you should care: The bill has two goals: one, to keep kids and young teens off of social media, and two, to keep minors off of porn sites. By signing this bill, Governor DeSantis is staking positions both in the debate over whether kids should be on social media (especially hot this week with the release of Jonathan Haidt’s book “The Anxious Generation”) and the debate over kids’ safety online. States like Kansas are joining this, but with an even more ideological bent that includes “homosexuality” under the definition of “sexual conduct” deemed “harmful to minors.”

My feelings about the kids-on-social-media debate ping-pong. On the one hand, social media being bad for kids feels intuitive and “truthy,” especially when so many kids are suffering. But the data doesn’t support all social media being bad for all kids, and in moderation it can help children connect with their friends. I do think it’s probably better for kids to spend less time on social media—though this may be me nostaligizing—and it can definitely be bad for vulnerable kids. My current thoughts are that a better solution than an outright ban (although platforms should be made to enforce the no-kids-under-13 rule) is to make algorithm changes to keep platforms from targeting kids with harmful content and take measures to reduce the time they spend on apps overall, but this is easier said than done.

AI-brewed beer, coming to bars near you

Nutshell: Perhaps the most important research to come out of my current university: AI could make better beer.

More: A research team (including members of the Leuven Institute for Beer Research, which I am delighted exists) trained machine learning models to predict what beer would taste like based on its chemical properties. The best model outperformed statistical tools to predict beer flavor and consumer response, and also can help brewers figure out what to add to make beer taste better.

Why you should care: Better beer? Yes please.1

Extra Reckoning

Peer review is broken.

You’ve probably heard the phrase “peer-reviewed research” or “a peer-reviewed journal” in some context where someone is trying to impress on you the importance and legitimacy of whatever scientific conclusion they’ve presented to you, and you might have a vague idea of what it is. But what exactly is peer review and how does it work? And you know we’re going to get into the question of how does it not work?

This is the lifecycle of a journal article in most academic disciplines:2

The paper is researched and written. You’d think this would be the hard part, but alas.

The article is submitted to a journal, likely through a web portal that looks like it was created in the 1990s.

The editor assigned to the piece finds at least two other academics (ranging from master’s students to professors) in the field to serve as peer reviewers. This process can drag on, because requested peer reviewers typically have a week to accept or reject the request, and many of them don’t seem to check their email.

The reviewers read the paper and assess its merits. There’s no set way to do this, but typically they should look at the methodological validity, whether the conclusions are supported by the evidence, and if the argument makes sense. They usually have around a month to do this.

The reviewers make a recommendation to the editor. This can be accept, reject, or major or minor revisions.

The editor makes a final decision. If that decision is revisions, the editor sends the feedback to the author(s), who revise the paper, and steps 3-6 repeat until the paper is accepted or rejected, usually with the same reviewers.

This process can take… a while. Academics are busy. And reviewers aren’t paid for reviews; it’s seen as part of the collective responsibility of academia. But recently, peer review has been facing a “crisis” of reviewer burnout; a journal editor quit over it. The number of reviewers editors have to ask is increasing (with early-career editors having to ask more3), and the whole process can take months.4

One thing that the image of the selfless academic heroically devoting hours to peer review overlooks is that a lot of times, peer reviewers… don’t do a good job. Published papers with content (and images) clearly generated by ChatGPT (including the phrases “as of my last knowledge update” and “as a large language model”) are popping up left and right. An older experiment sent a paper with eight deliberate errors to 300 reviewers, and the median number caught was two. Every year, papers are retracted over errors that reviewers should have identified (including the infamous vaccines-cause-autism paper).

Guess where else the community is supposed to catch errors and issues? Open-source software. And unless you skipped the Reckonnaisance, you know how that’s going (hint: burnout leading to severe security threats). I’m still fresh and I enjoy peer review because it gives me a preview of where research in my field is going. Not every peer reviewer (or software maintainer) can say the same.

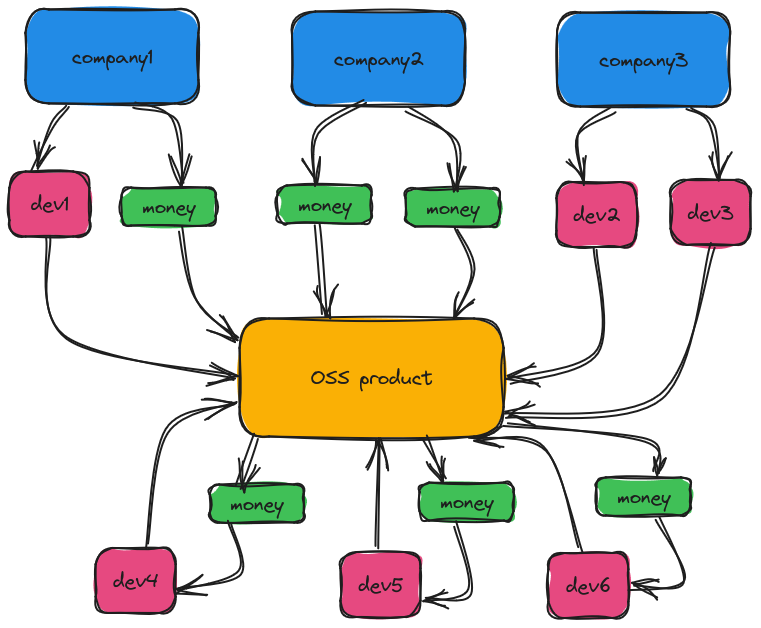

There are a lot of ideas for how to fix peer review, but I want to hone in on two of them—one to fix the number-of-reviewers crisis and one to fix the quality crisis—that might also help fix open-source software. The first is compensating volunteers. People warn that paying reviewers will increase publishing fees (already thousands of dollars an article), but that doesn’t have to be the case when journal publisher profit margins are approaching 40%. Some people have floated $450 per review, and that might actually be unsustainable, but there is surely a number high enough that it attracts not just hungry PhD students but low enough that it doesn’t incentivize journals to raise fees. An alternative is non-monetary compensation: some journals provide access to articles or other services, like Elsevier, which gives 30 days of access to its Scopus database for each review, and this is also nice because not all institutions provide access (looking at you, Bologna). The issue with compensating people for work on open-source software, though, is that even though for-profit companies are making money off of this software, there’s no direct link between the beneficiaries and the volunteers—but we could build one.5

The second solution is training volunteers. No one teaches PhD students how to review papers. I learned by Googling and asking my friends. Graduate programs should include workshops on writing peer reviews. CS programs should teach students how to help with open-source software, including how to do code reviews, but even when curricula include pair programming and code review, they rarely talk about open-source software and repository maintenance. But it seems logical: if volunteers are taught how to do the work they’re volunteering for, and compensated in some way for it, they’ll be more willing to do it, and do it better.

I know that several journal editors read this newsletter, so I will be clear: I will keep reviewing papers because I enjoy it and because it’s good for academia, which may undermine my argument. But not everyone feels this way, and I worry that the peer review system is limping towards collapse, taking down all academics down with it. This is a whole-of-academia problem, and it starts with universities, who need to teach their PhD students (at a minimum) how to write good peer reviews. Journals should explore ways to compensate reviewers, even non-monetarily; it feels good to be recognized for the effort reviewing takes. As for open-source software, it’s harder because there’s no built-in monetary pool. But more advocacy around compensation and teaching CS students about open-source software might inspire more of them to take part, and hopefully defend our digital infrastructure while they’re at it.

I Reckon…

that China is walking a perilous AI tightrope (new piece published in CEPA!).

And for the non-drinkers among us: better non-alcoholic beer? Very necessary.

Law is the exception; law review editors, who edit and publish articles, are all students.

As the guest editor for a special collection, I can relate.

*brief pause to check my articles’ statuses in the editorial manager*

The incentive structures for open-source software and peer review are slightly different, since OS maintainers area pure volunteers while peer reviewers are solicited, so worth thinking about how this might change how it affects OS dynamics.

Thumbnail generated by ChatGPT with variations on the prompt “Please generate an abstract watercolor with soft brushstrokes representing the concept of "volunteering"”.