Welcome back to the Ethical Reckoner. This Weekly Reckoning can be summed up as “tech regulation matters,” because we’re going to talk about how a principal was framed with generative AI and Grindr (allegedly) shared user health data with advertisers. We also have an update on the first self-driving car racing league, and then I talk about my pseudo-intellectual reasons for loving Netflix’s The Circle.

This edition of the WR is brought to you by… the joys of having a top sheet again (it just makes sense, Europe).

The Reckonnaisance

Baltimore principal framed with AI

Nutshell: A disgruntled athletic director used AI to create an audio clip of his school’s principal making racist and antisemitic comments.

More: The principal was temporarily removed after the clip spread across social media, with many outraged commentators calling for his removal. After forensic analysis revealed traces of AI and human editing and a subpoena showed that the email address it originated from belonged to the athletic director, the principal was exonerated and the athletic director was charged with disrupting school activities (and also theft, stalking, and witness retaliation, because apparently he was also stealing money from the school district).

Why you should care: The last big news about deepfake audio was the robocall impersonating President Biden, but this shows the side of deepfakes that I’m more concerned about: targeting normal people who aren’t going to be fact checked by national organizations. It’s what’s happening with deepfake pornography (98% of deepfake videos are pornographic, 99% of that is of women, and many are used to harass and shame) and could be applied to local and non-headlining elections across the globe. A few other thoughts:

This shows the unique risks of generated audio; while generated videos still sometimes have telltale signs or quirks, generated audio is harder to detect.

The public did exactly what they should be expected to do—get outraged—but now that we have generated content, we may need to take a second to check our initial reactions.

The use of the “disrupting school activities” charge is interesting and shows that there may be less obvious aspects of our legal systems that might help combat misuse of generative AI. But we should couple that with measures to prevent misuse in the first place, because even though the principal was exonerated, it’s not “no harm, no foul”—he and the school faced threats, emotional distress, and probably still reputation damage.

Grindr sued for sharing users’ HIV statuses

Nutshell: LGBTQ+ dating app Grindr is being sued in the UK for allegedly sharing private data, including HIV status, to advertisers without consent.

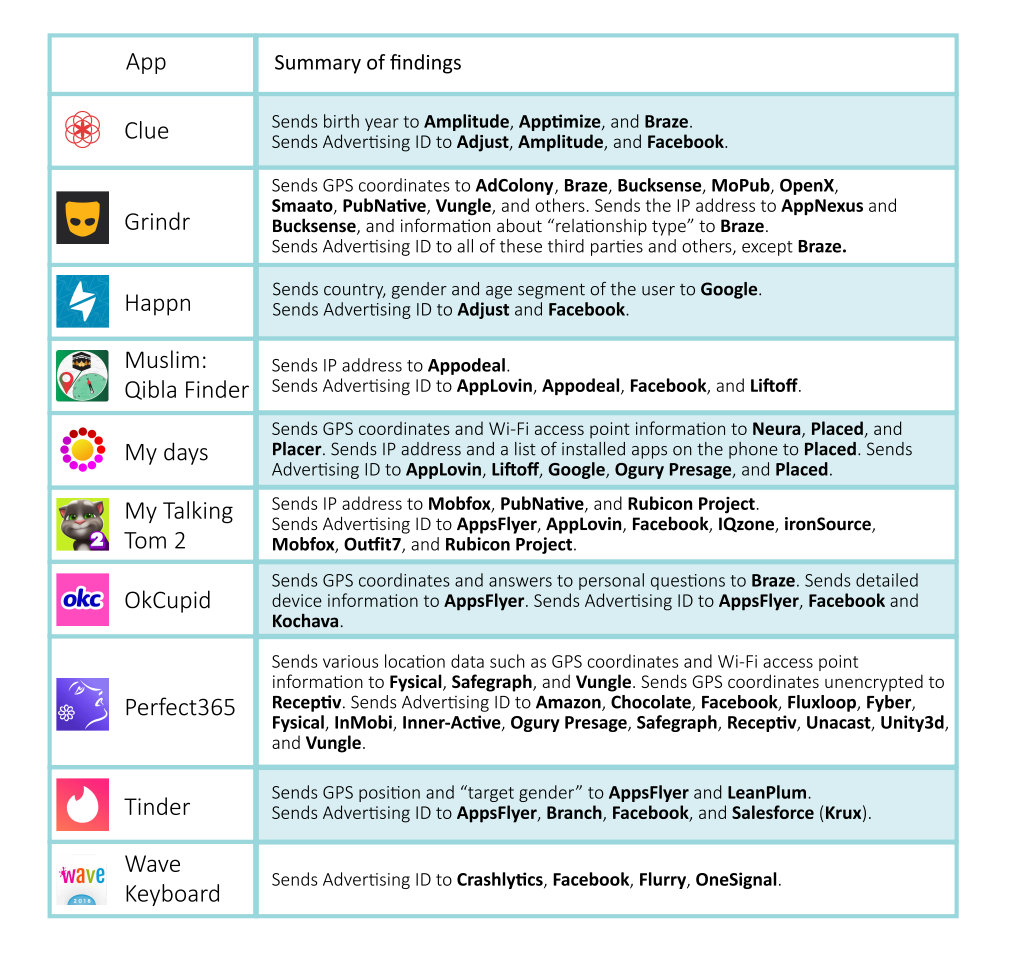

More: The class action suit is being organized on behalf of people who used the app between 2018 and 2020. Grindr claims that they have never monetized user health information and that the claim is “based on a mischaracterization of practices from more than four years ago.” Those practices appears to be sharing users’ GPS coordinates, sexuality, HIV status, and other data with two app-optimizing companies. Grindr doesn’t have a stellar privacy track record; in 2021, they were fined €6.5 million for violating the GDPR by sharing private user data (not including HIV status) with advertisers.

Why you should care: This is why privacy laws matter. Even if Grindr’s denials are correct, with the way data is shared and sold between services, it’s entirely possible that HIV status data ended up in the hands of advertisers. Grindr data has been purchased and used to out people, and adding HIV status into the mix makes the situation even worse. But even if you’re not on Grindr, your data can be harvested and exploited like this… unless we have data protection legislation preventing it. Which, in the US, we don’t.

China faces Big Tech worker exodus

Nutshell: Many Big Tech employees in China are burning out and quitting to rest or found their own companies.

More: China’s “FAANG”1 equivalent is “BAT” (Baidu, Alibaba, and Tencent). At the end of last year, they had 364,477 employees, a drop of nearly 25,000 (or nearly 6.5%) from a year before. While some of this is because of layoffs, many people are resigning because of BAT’s notoriously grueling work culture. While the authorities have been trying to crack down on “996” culture (working 9am-9pm, 6 days a week), it hasn’t been well-enforced.

Why you should care: China is facing economic headwinds and high youth unemployment, but many of the people quitting in this wave are going on to found other ventures, which could help revitalize China’s tech sector by encouraging more startups. You may not care about that because you probably don’t work in the Chinese tech sector, but in an age of increased geopolitical tensions and tech competition with China, this could change the dynamics.

First Autonomous Racing League gets off to a slow start

Nutshell: The inaugural race of the Abu Dhabi Autonomous Racing League took an hour to complete all seven laps,2 and only half of the cars finished.

More: The four cars tried mightily, but during trials struggled with spins, crashing, and just going “off the track to take a little break” (#relatable). And after one car spun out during the race, triggering a yellow caution flag, the others interpreted the rules a bit too literally and stopped on the track across from the beached car because you aren’t supposed to overtake (running) cars under a yellow flag. The commentators were admirable sports about it all, describing how one car just “lost it on the braking” and praising the historic nature of the race.

Why you should care: While highly amusing, there is possibly some practical utility to this. There’s a long history of trickledown from automotive racing to consumer vehicles. You have Formula 1 to thank for paddle shifters, active suspension, and advancements in synthetic fuel. Clearly there’s a long way to go, but if data or technology from this league helps develop autonomous driving algorithms, we can all forgive the blue car its little nap.

Extra Reckoning

If you’re not watching The Circle on Netflix, you should be. Billed as “a social media competition,” the show isolates about a dozen people in individual camera-filled apartments. They set up profiles and can communicate only through a chat interface, never seeing each other face-to-face. Every few days, all the players rank each other, and the top two players become “Influencers” and can eliminate another player. At the end of the game, the top-ranked player in the final rankings wins $100,000. Throughout, there are twists and turns and lots of drama, but it’s also a genuinely interesting commentary on our relationship to social media, online relationships, and authenticity (or so I tell myself to justify my viewing). Players don’t have to play as themselves; they can “catfish” as someone else, and many people do so in order to appear more “likable” to the group. This in itself is a commentary on what kinds of people we think (or that we think others think) are appealing online, but it also creates an interesting game dynamic where sometimes people become fixated on ferreting out the catfish rather than the true point of the game, which is building alliances to make yourself more popular—but not too popular, lest you paint a target on your back.

Spoilers ahead for Season 6, episodes 1-5

This season had an extra twist: one of the early players was actually an AI chatbot. Basically, the Netflix team fine-tuned a large language model (LLM) on conversations from past seasons of the show and then had it generate a profile and let it loose.3 The AI generated a profile of a 26-year-old white guy named Max from Wisconsin. He claimed to be working as a veterinary intern and used a man with a dog as his profile picture (not AI-generated but donated by stand-up comedian Griffin James). In an “interview,” the LLM said that it chose Max “to have attributes that would be both relatable and approachable to a diverse set of players, thereby facilitating more meaningful interactions,” including its name (“common and easy-to-remember”), occupation (“suggests a compassion and a love for animals, which are traits that are universally admirable”), and Wisconsinness (chosen to “arouse minimal suspicion while maximizing relatability among a wide range of players”). And indeed, players liked Max, even forming alliances with it. And, when the show informed contestants that there was an AI player in their midst, the resultant witch hunt burned Steffi, whose encyclopedic knowledge of astrology was deemed suspicious, at the stake instead of Max. Even the AI engineer in the game didn’t detect it. At that point, the producers pulled the plug on Max, but I would have loved to see it go all the way.

Having Max in the game confirms what I suspected about the show all along: that many of the players’ interactions are formulaic, even algorithmic, designed to build alliances and popularity. Vulnerability is strategically deployed to build “authenticity,” but even this is something Max was able to emulate. This is also a strategy deployed on The Bachelor/Bachelorette, which is even more terrifying because at the end, you’re not just hoping to be liked, you’re hoping to be getting married. (Indeed, these shows are so formulaic that they’ve resulted in an excellent blog post and book called “How to Win The Bachelor” laying out the dominant strategies.) But, even though chatbots don’t have actual experiences, they can still be perceived as “authentic,” which is one reason why people are falling in love with AI chatbots.

There’s a lot of philosophical debate over whether AI can have internal experiences or mental states. Ultimately, we fall back on how we perceive the AI and its communications, which is also why even though we can’t know for sure that other people are actually conscious (see solipsism and the “philosophical zombie theory”), we still treat them as if they are, because if you didn’t, you would be mistreating a thinking, feeling being. But LLMs aren’t thinking, feeling beings, and so humanizing or over-anthropomorphizing them is dangerous. Even the one of the creators of Max alternates between referring to it as “it” and “he,” sometimes in the same sentence, showing how easy it is to perceive these as humanlike. And while I don’t think there’s anything inherently wrong with AI companionship, if we end up perceiving them as more human than humans and replacing human interactions with AI interactions, we’re entering dangerous territory. But that’s (hopefully) far down the line. In the meantime, I propose a new Turing Test: try to get AI to win The Circle.

I Reckon…

that if Neopets is back, we can revive Webkinz.

Acronym for the Big Tech combo of Facebook, Apple, Amazon, Netflix, Google; now the less-catchy “MAMAA” (Meta, Amazon, Microsoft, Apple, Alphabet).

For context, F1 cars can complete a lap of this circuit in under 90 seconds.

It sounds like there was some curation/clever prompt engineering involved, but the producers claim the messages and strategy were all generated by the LLM.

Thumbnail is a close-up of a Monet painting from his studio in Giverny.